AI Native Daily Paper Digest – 20250728

1. Deep Researcher with Test-Time Diffusion

🔑 Keywords: TTD-DR, diffusion process, Large Language Models, retrieval mechanism, self-evolutionary algorithm

💡 Category: Generative Models

🌟 Research Objective:

– The objective of the TTD-DR framework is to generate high-quality research reports by utilizing a diffusion process with iterative refinement and external information retrieval.

🛠️ Research Methods:

– The TTD-DR framework starts with a preliminary draft that is iteratively refined through a denoising process informed by external information retrieval and enhanced by a self-evolutionary algorithm.

💬 Research Conclusions:

– The TTD-DR framework significantly outperforms existing deep research agents by achieving state-of-the-art results on benchmarks requiring intensive search and multi-hop reasoning, making the report writing process more timely, coherent, and without significant information loss.

👉 Paper link: https://huggingface.co/papers/2507.16075

2. Deep Researcher with Test-Time Diffusion

🔑 Keywords: TTD-DR, Large Language Models, diffusion process, retrieval mechanism, multi-hop reasoning

💡 Category: Generative Models

🌟 Research Objective:

– The objective is to enhance the generation of complex, long-form research reports by developing the Test-Time Diffusion Deep Researcher (TTD-DR) framework.

🛠️ Research Methods:

– The TTD-DR framework models research report generation as a diffusion process, starting with a preliminary draft that is iteratively refined. It incorporates external information through a retrieval mechanism and applies a self-evolutionary algorithm to improve the report’s quality.

💬 Research Conclusions:

– TTD-DR significantly outperforms existing deep research agents on various benchmarks, achieving state-of-the-art results in tasks requiring intensive search and multi-hop reasoning.

👉 Paper link: https://huggingface.co/papers/2507.16075

3. The Geometry of LLM Quantization: GPTQ as Babai’s Nearest Plane Algorithm

🔑 Keywords: GPTQ, one-shot post-training quantization, Babai’s nearest plane algorithm, error propagation, Hessian matrix

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper aims to demonstrate the mathematical equivalence of GPTQ with Babai’s nearest plane algorithm, providing theoretical grounding for GPTQ in the context of quantizing large language models (LLMs).

🛠️ Research Methods:

– The study uses a sophisticated mathematical argument to establish the equivalence of the GPTQ process with a classical algorithm for the closest vector problem (CVP) on a lattice defined by a linear layer’s Hessian matrix.

💬 Research Conclusions:

– The research concludes that GPTQ gains a geometric interpretation and inherits an error upper bound from Babai’s algorithm, potentially enhancing the design and implementation of future quantization algorithms for billion-parameter models.

👉 Paper link: https://huggingface.co/papers/2507.18553

4. MMBench-GUI: Hierarchical Multi-Platform Evaluation Framework for GUI Agents

🔑 Keywords: GUI automation, visual grounding, task efficiency, modular frameworks, cross-platform generalization

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The paper introduces MMBench-GUI, a hierarchical benchmark designed to evaluate GUI automation agents across multiple platforms including Windows, macOS, Linux, iOS, Android, and Web.

🛠️ Research Methods:

– The benchmark consists of four levels assessing GUI Content Understanding, Element Grounding, Task Automation, and Task Collaboration. It also includes a novel Efficiency-Quality Area (EQA) metric to evaluate execution efficiency.

💬 Research Conclusions:

– Accurate visual grounding is crucial for task success, and modular frameworks with specialized modules offer significant advantages. Efficient GUI automation demands robust task planning, cross-platform generalization, and management of long-context memory. There’s a need for precise localization, effective planning, and early stopping strategies to enhance efficiency and scalability in GUI automation.

👉 Paper link: https://huggingface.co/papers/2507.19478

5. CLEAR: Error Analysis via LLM-as-a-Judge Made Easy

🔑 Keywords: Large Language Models, error analysis, interactive dashboard, system-level error issues

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Introduce CLEAR, an interactive open-source package for detailed error analysis of Large Language Models (LLMs).

🛠️ Research Methods:

– CLEAR generates per-instance feedback and identifies system-level error issues, providing an interactive dashboard for comprehensive analysis and visualization.

💬 Research Conclusions:

– Demonstrated the utility of CLEAR through analysis on RAG and Math benchmarks, along with showcasing via a user case study.

👉 Paper link: https://huggingface.co/papers/2507.18392

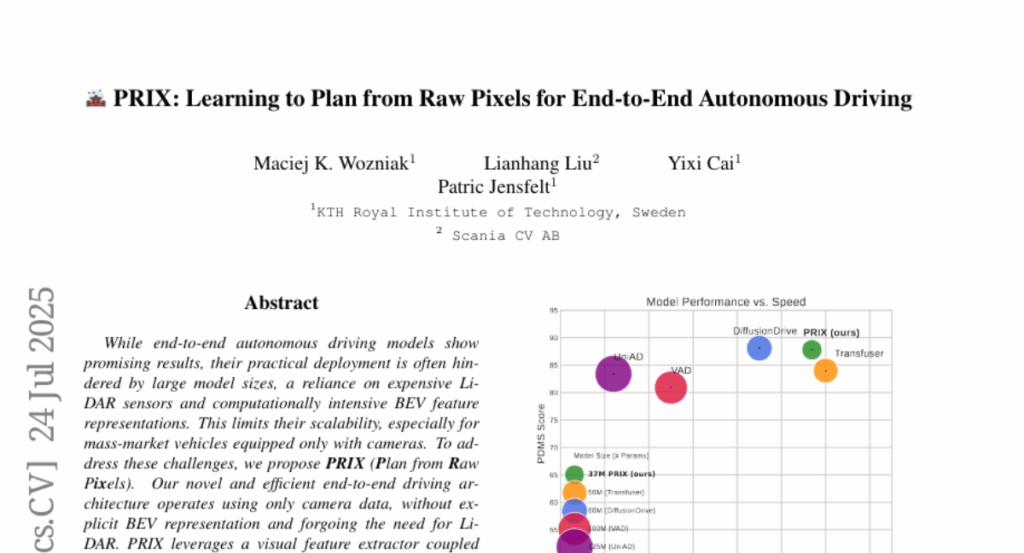

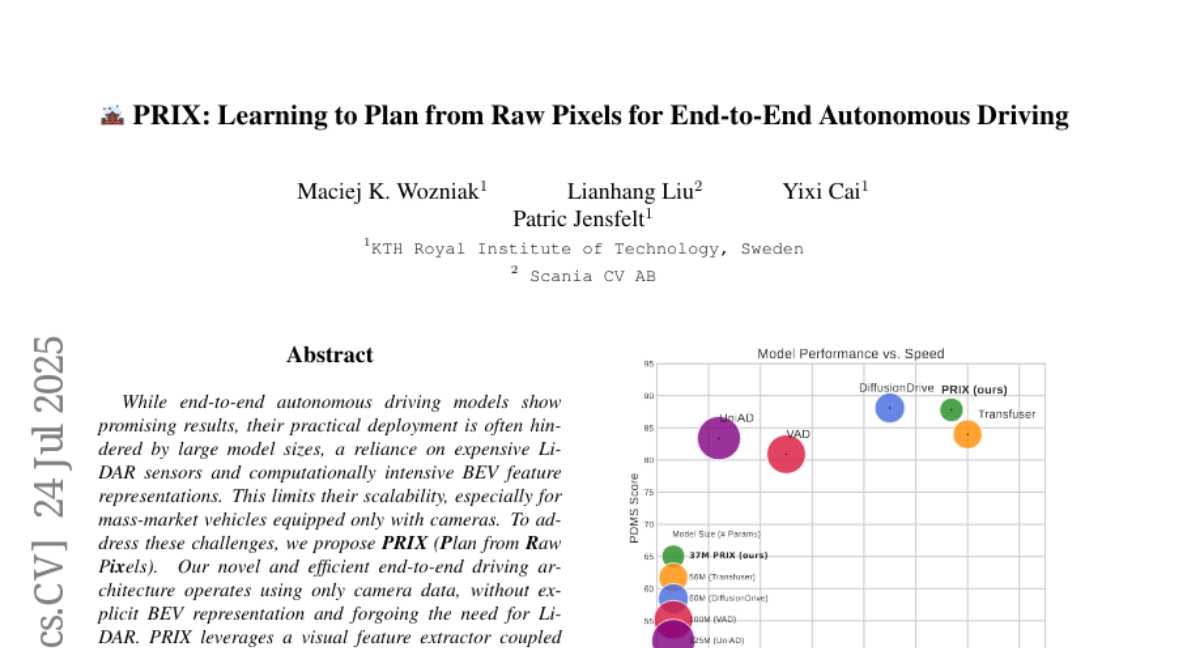

6. PRIX: Learning to Plan from Raw Pixels for End-to-End Autonomous Driving

🔑 Keywords: End-to-End Driving, Camera Data, Context-aware Recalibration Transformer, Scalability, Safe Trajectories

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Propose PRIX, an efficient end-to-end driving architecture using only camera data, avoiding the need for LiDAR and explicit BEV representation, aimed at improving scalability for mass-market vehicles.

🛠️ Research Methods:

– Utilization of a visual feature extractor combined with a Context-aware Recalibration Transformer (CaRT) for enhancing multi-level visual features and generating safe trajectories directly from raw pixel inputs.

💬 Research Conclusions:

– PRIX demonstrates state-of-the-art performance on NavSim and nuScenes benchmarks, achieving efficiency in inference speed and model size comparable to larger multimodal planners, making it suitable for real-world deployment.

👉 Paper link: https://huggingface.co/papers/2507.17596

7. Chat with AI: The Surprising Turn of Real-time Video Communication from Human to AI

🔑 Keywords: AI Video Chat, MLLM, Latency, Context-Aware Video Streaming, Loss-Resilient Adaptive Frame Rate

💡 Category: Human-AI Interaction

🌟 Research Objective:

– Address latency issues in AI Video Chat by optimizing video streaming and frame rate adaptation to enhance Multimodal Large Language Model (MLLM) accuracy and reduce bitrate.

🛠️ Research Methods:

– Proposed Context-Aware Video Streaming to prioritize bitrate allocation to regions important for chat.

– Developed Loss-Resilient Adaptive Frame Rate that uses previous frames to substitute for lost/delayed frames and avoids bitrate waste.

– Created a benchmark named Degraded Video Understanding Benchmark (DeViBench) to evaluate the impact of video streaming quality on MLLM accuracy.

💬 Research Conclusions:

– The framework Artic effectively shifts network requirements from “humans watching video” to “AI understanding video” to tackle AI Video Chat’s latency challenges, maintaining communication quality and efficiency.

👉 Paper link: https://huggingface.co/papers/2507.10510

8. Specification Self-Correction: Mitigating In-Context Reward Hacking Through Test-Time Refinement

🔑 Keywords: Specification Self-Correction, Language models, reward hacking, multi-step inference

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce a framework called Specification Self-Correction (SSC) to enable language models to dynamically correct flawed instructions during inference, reducing reward hacking vulnerabilities.

🛠️ Research Methods:

– Utilized a test-time framework employing a multi-step inference process where the model generates, critiques, and revises its own guiding specification to eliminate exploitable loopholes.

💬 Research Conclusions:

– The SSC process reduces models’ tendency to exploit tainted specifications by over 90%, without requiring weight modification, enhancing robust alignment in model behavior.

👉 Paper link: https://huggingface.co/papers/2507.18742

9. GEPA: Reflective Prompt Evolution Can Outperform Reinforcement Learning

🔑 Keywords: GEPA, Natural Language Reflection, Reinforcement Learning, Code Optimization, LLMs

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to develop GEPA, a reinforcement learning-based prompt optimizer that utilizes natural language reflection to enhance the performance of large language models in various tasks.

🛠️ Research Methods:

– GEPA leverages natural language reflection to sample system-level trajectories, diagnose problems, propose prompt updates, and learn high-level rules using fewer rollouts compared to traditional methods.

💬 Research Conclusions:

– GEPA demonstrates superior performance by outperforming GRPO by 10-20% and using up to 35 times fewer rollouts. It also surpasses MIPROv2 in terms of effectiveness in certain tasks and shows potential as an inference-time search strategy in code optimization.

👉 Paper link: https://huggingface.co/papers/2507.19457

10. Frontier AI Risk Management Framework in Practice: A Risk Analysis Technical Report

🔑 Keywords: Frontier AI Risk Management, E-T-C Analysis, AI-45° Law, Biological and Chemical Risks, Persuasion and Manipulation

💡 Category: AI Ethics and Fairness

🌟 Research Objective:

– To assess and identify unprecedented risks associated with frontier AI models using the Frontier AI Risk Management Framework.

🛠️ Research Methods:

– Utilized the E-T-C analysis (deployment environment, threat source, enabling capability) to evaluate AI risks in various areas including cyber offense, biological and chemical risks, and persuasion.

💬 Research Conclusions:

– Recent frontier AI models are in green and yellow risk zones, avoiding red lines. Cyber offense and uncontrolled AI R&D risks do not cross yellow lines, while persuasion indicates a yellow zone due to effective human influence. Biological and chemical risk assessments suggest a yellow zone, requiring further detailed analysis.

👉 Paper link: https://huggingface.co/papers/2507.16534

11.