AI Native Daily Paper Digest – 20250807

1. VeriGUI: Verifiable Long-Chain GUI Dataset

🔑 Keywords: VeriGUI, GUI Agents, Long-Chain Complexity, Subtask-Level Verifiability, Human-Computer Interaction

💡 Category: Human-AI Interaction

🌟 Research Objective:

– Introduce VeriGUI, a dataset designed for evaluating GUI agents in long-horizon tasks, focusing on complexity and subtask verifiability.

🛠️ Research Methods:

– Develop and annotate a GUI dataset with task trajectories spanning desktop and web environments, emphasizing long-chain and subtask-level verification.

💬 Research Conclusions:

– Highlight significant performance gaps in handling long-horizon tasks by current GUI agents, indicating a need for improved planning and decision-making capabilities.

👉 Paper link: https://huggingface.co/papers/2508.04026

2. Is Chain-of-Thought Reasoning of LLMs a Mirage? A Data Distribution Lens

🔑 Keywords: Chain-of-Thought, CoT reasoning, Large Language Model, distribution discrepancy, inductive bias

💡 Category: Natural Language Processing

🌟 Research Objective:

– Investigate the limitations of Chain-of-Thought reasoning in Large Language Models through the analysis of distribution discrepancies between training and test data.

🛠️ Research Methods:

– Developed a controlled environment called DataAlchemy to train LLMs from scratch, systematically testing them under various distribution conditions across dimensions of task, length, and format.

💬 Research Conclusions:

– CoT reasoning in LLMs is not robust and tends to fail when tested beyond training distributions, highlighting the challenge of achieving genuine and generalizable reasoning.

👉 Paper link: https://huggingface.co/papers/2508.01191

3. Efficient Agents: Building Effective Agents While Reducing Cost

🔑 Keywords: Large Language Model, Efficiency-Effectiveness Trade-off, Agent Framework, Cost-of-Pass, Efficient Agents

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To systematically examine the efficiency-effectiveness trade-off in LLM-driven agent systems, aiming to achieve cost-effective designs without sacrificing performance.

🛠️ Research Methods:

– The study addresses three critical questions regarding task complexity, diminishing returns from additional modules, and efficiency gains through agent framework design by conducting empirical analysis on the GAIA benchmark, evaluating LLM backbone selection, agent framework designs, and test-time scaling strategies.

💬 Research Conclusions:

– The research introduces Efficient Agents, an agent framework that maintains 96.7% performance of the leading OWL framework while reducing operational costs and improving cost-of-pass by 28.4%.

– Provides actionable insights for designing efficient, high-performing AI-driven solutions, enhancing accessibility and sustainability.

👉 Paper link: https://huggingface.co/papers/2508.02694

4. SEAgent: Self-Evolving Computer Use Agent with Autonomous Learning from Experience

🔑 Keywords: SEAgent, Experiential Learning, Computer Use Agents, World State Model, Curriculum Generator

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The paper aims to address the limitations of large vision-language models in mastering novel and specialized software environments by proposing SEAgent, a self-evolving framework for computer-use agents.

🛠️ Research Methods:

– The SEAgent framework employs experiential learning through a World State Model and a Curriculum Generator to facilitate the autonomous mastery of software by computer-use agents.

– It includes adversarial imitation of failure actions and Group Relative Policy Optimization (GRPO) on successful actions.

– A specialist-to-generalist training strategy is used to develop a stronger generalist agent.

💬 Research Conclusions:

– The SEAgent framework significantly improves the success rate by 23.2% compared to a competitive open-source CUA, achieving a performance from 11.3% to 34.5% in five novel software environments within OS-World.

👉 Paper link: https://huggingface.co/papers/2508.04700

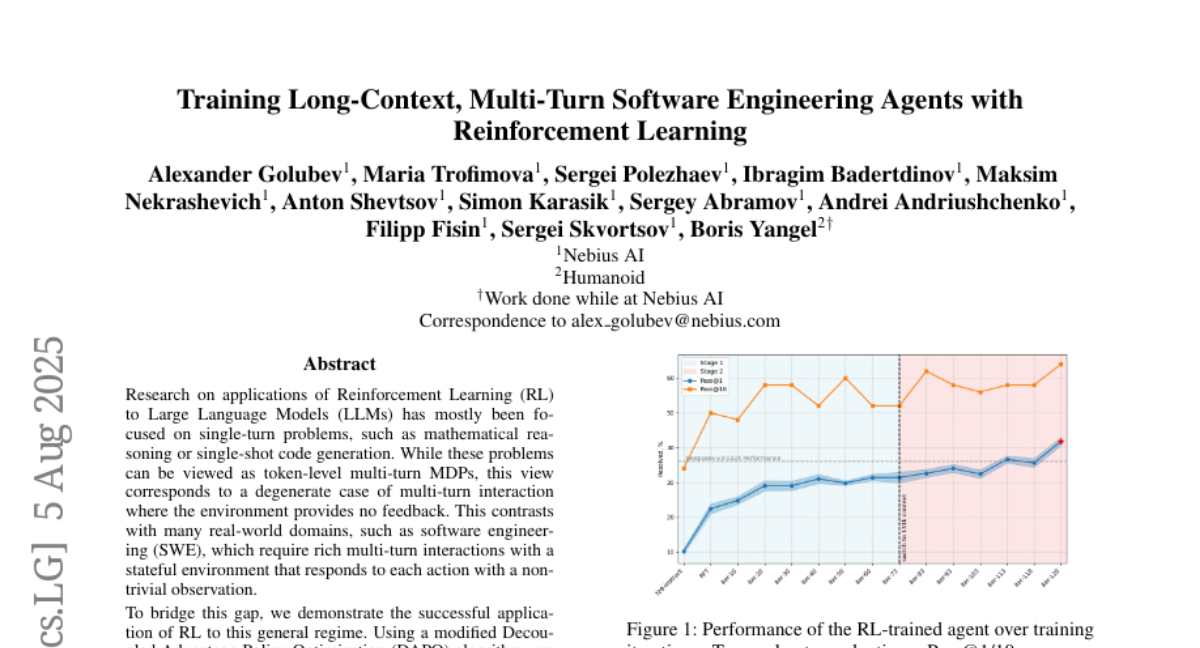

5. Training Long-Context, Multi-Turn Software Engineering Agents with Reinforcement Learning

🔑 Keywords: Reinforcement Learning, Large Language Models, Software Engineering, Decoupled Advantage Policy Optimization

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to expand the application of Reinforcement Learning to multistep interactions in real-world domains such as software engineering.

🛠️ Research Methods:

– Utilizes a modified Decoupled Advantage Policy Optimization algorithm to train an AI agent, specifically based on the Qwen2.5-72B-Instruct model, on software engineering tasks.

💬 Research Conclusions:

– The proposed approach significantly improves the agent’s success rate on the SWE-bench Verified benchmark and is competitive against leading models on SWE-rebench.

👉 Paper link: https://huggingface.co/papers/2508.03501

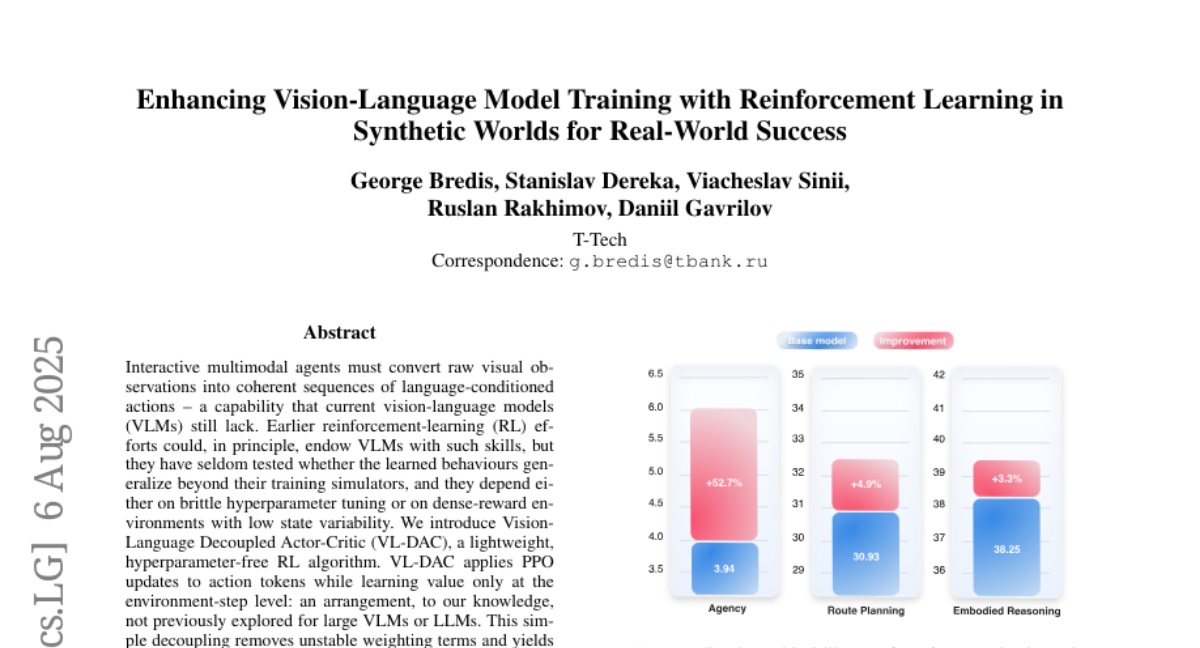

6. Enhancing Vision-Language Model Training with Reinforcement Learning in Synthetic Worlds for Real-World Success

🔑 Keywords: VL-DAC, VLMs, reinforcement learning, hyperparameter-free, simulators

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To develop a hyperparameter-free reinforcement learning algorithm (VL-DAC) that enables vision-language models (VLMs) to learn generalized policies from inexpensive simulators.

🛠️ Research Methods:

– The paper introduces VL-DAC, which utilizes PPO updates and decouples action token learning from value learning at the environment-step level.

💬 Research Conclusions:

– VL-DAC significantly improves generalized policy learning in VLMs across various benchmarks such as BALROG, VSI-Bench, and VisualWebBench without compromising image understanding accuracy.

👉 Paper link: https://huggingface.co/papers/2508.04280

7. Agent Lightning: Train ANY AI Agents with Reinforcement Learning

🔑 Keywords: Agent Lightning, Reinforcement Learning, Large Language Models, Training-Agent Disaggregation, hierarchical RL algorithm

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study introduces Agent Lightning, a framework for RL-based training of LLMs in AI agents, aiming to decouple agent execution from training.

🛠️ Research Methods:

– Utilizes a hierarchical RL algorithm called LightningRL, with a credit assignment module, to transform agent-generated trajectories into training transitions.

– Implements a Training-Agent Disaggregation architecture to integrate agent observability and standardize the finetuning interface.

💬 Research Conclusions:

– Demonstrates stable and continuous improvements across various tasks, highlighting the framework’s potential for real-world agent training and deployment.

👉 Paper link: https://huggingface.co/papers/2508.03680

8. CoTox: Chain-of-Thought-Based Molecular Toxicity Reasoning and Prediction

🔑 Keywords: CoTox, LLM, Chain-of-Thought, Multi-toxicity Prediction, Drug Development

💡 Category: AI in Healthcare

🌟 Research Objective:

– To integrate LLMs with chain-of-thought reasoning to enhance multi-toxicity prediction in drug development by incorporating chemical structures, biological pathways, and gene ontology terms.

🛠️ Research Methods:

– Utilizing CoTox framework that combines chemical structure data, biological pathways, and gene ontology for interpretable toxicity predictions with LLMs, demonstrating effectiveness using various LLMs including GPT-4o.

💬 Research Conclusions:

– CoTox outperforms traditional and deep learning models in toxicity prediction, enhances reasoning ability by using IUPAC names, and aligns predictions with physiological responses, thereby improving interpretability and supporting early-stage drug safety assessment.

👉 Paper link: https://huggingface.co/papers/2508.03159

9. Sotopia-RL: Reward Design for Social Intelligence

🔑 Keywords: Social intelligence, Reinforcement Learning, partial observability, multi-dimensional rewards, Markov decision process

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The objective of Sotopia-RL is to enhance social intelligence in large language models by refining feedback into utterance-level, multi-dimensional rewards, improving performance in social tasks such as negotiation and collaboration.

🛠️ Research Methods:

– Sotopia-RL addresses partial observability and multi-dimensionality in social interactions by implementing utterance-level credit assignment and multi-dimensional rewards within an open-ended social learning environment called Sotopia.

💬 Research Conclusions:

– Experiments demonstrate that Sotopia-RL achieves state-of-the-art social goal completion scores, outperforming existing approaches, with ablation studies confirming the necessity of the proposed methodologies. The model’s implementation is available publicly.

👉 Paper link: https://huggingface.co/papers/2508.03905

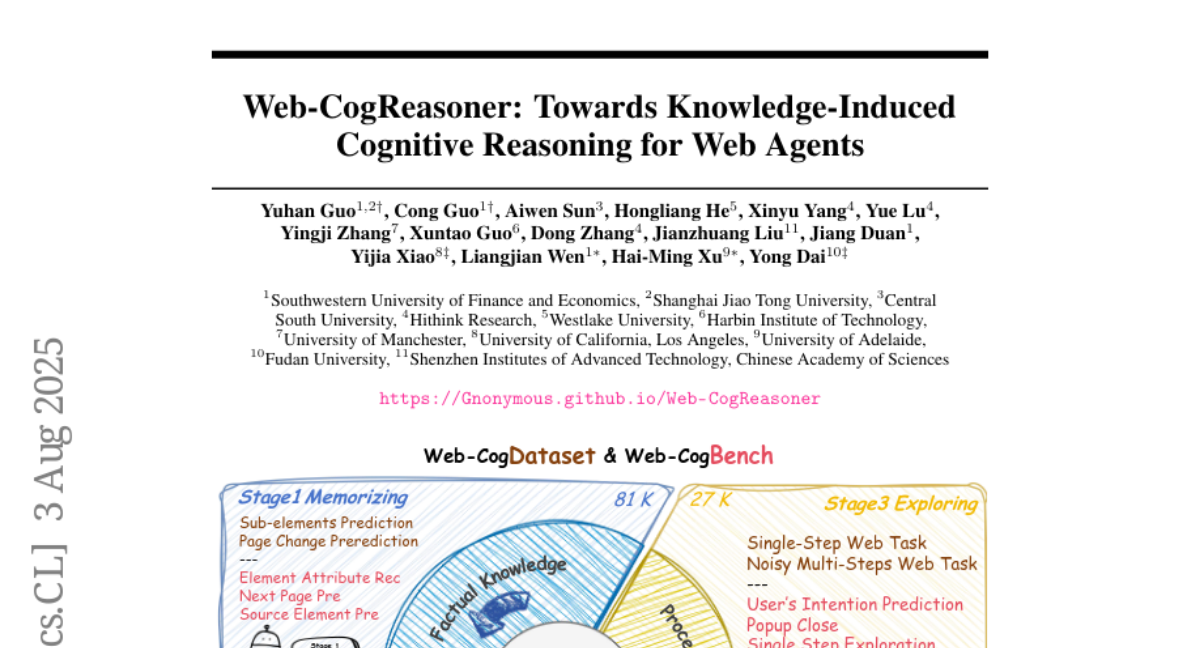

10. Web-CogReasoner: Towards Knowledge-Induced Cognitive Reasoning for Web Agents

🔑 Keywords: Web-CogKnowledge Framework, Multimodal Learning, Knowledge Representation, Cognitive Processes, AI Native

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– Develop a framework that enhances web agents’ abilities through structured knowledge learning and cognitive processes.

🛠️ Research Methods:

– Proposing the Web-CogKnowledge Framework and constructing the Web-CogDataset to categorize knowledge and facilitate cognitive reasoning.

– Implementing a novel knowledge-driven Chain-of-Thought (CoT) reasoning framework with the Web-CogReasoner.

💬 Research Conclusions:

– The proposed framework significantly outperforms existing models in task generalization, with a comprehensive evaluation suite introduced for performance assessment.

👉 Paper link: https://huggingface.co/papers/2508.01858

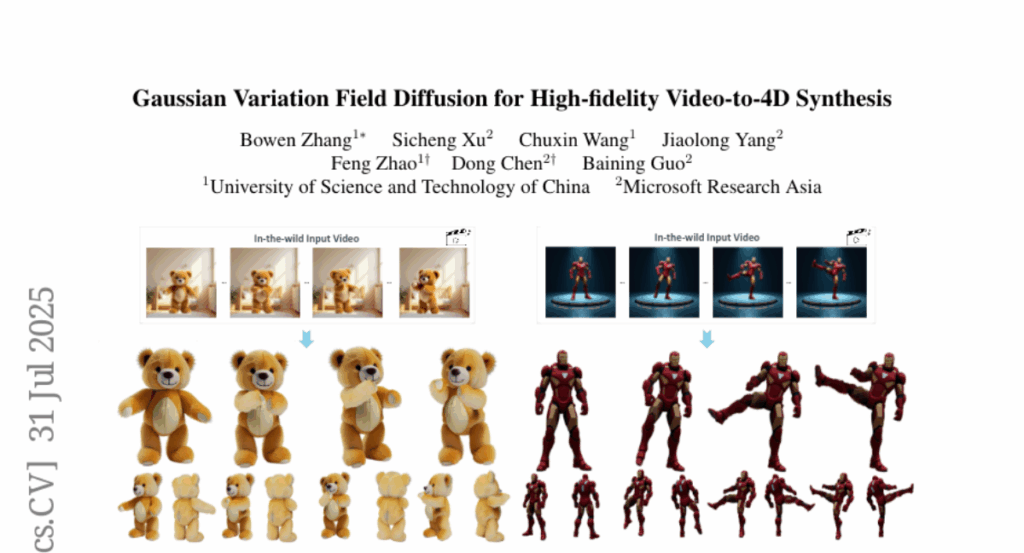

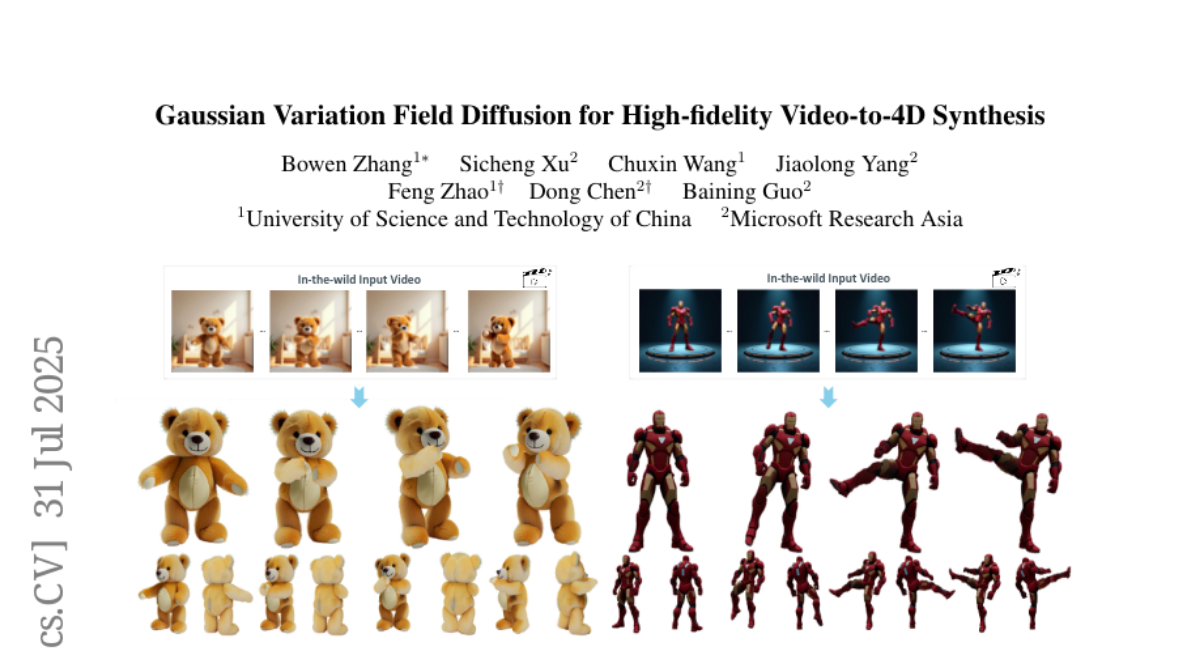

11. Gaussian Variation Field Diffusion for High-fidelity Video-to-4D Synthesis

🔑 Keywords: Video-to-4D generation, 3D content, Gaussian Splats, Variation Field VAE, Diffusion Transformer

💡 Category: Generative Models

🌟 Research Objective:

– Introducing a novel framework for generating high-quality dynamic 3D content from single video inputs.

🛠️ Research Methods:

– Utilizing a Direct 4DMesh-to-GS Variation Field VAE for efficient encoding and a Gaussian Variation Field diffusion model with a temporal-aware Diffusion Transformer.

💬 Research Conclusions:

– Demonstrated superior quality and generalization in generating 3D content compared to existing methods, with effectiveness on in-the-wild video inputs trained on synthetic data.

👉 Paper link: https://huggingface.co/papers/2507.23785

12. LaTCoder: Converting Webpage Design to Code with Layout-as-Thought

🔑 Keywords: Design-to-Code, Multimodal Large Language Models (MLLMs), Chain-of-Thought, Layout Preservation

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance layout preservation in design-to-code tasks by developing LaTCoder, which preserves webpage designs during code generation.

🛠️ Research Methods:

– A novel approach is implemented by dividing webpage designs into image blocks and using Chain-of-Thought reasoning with Multimodal Large Language Models (MLLMs) to generate code.

– Application of assembly strategies like absolute positioning and MLLM-based methods followed by dynamic selection.

💬 Research Conclusions:

– LaTCoder shows significant improvement in automatic metrics with a 66.67% increase in TreeBLEU and a 38% decrease in MAE using DeepSeek-VL2.

– Human preference evaluations reveal that annotators prefer LaTCoder-generated webpages in over 60% of cases, demonstrating effectiveness.

👉 Paper link: https://huggingface.co/papers/2508.03560