AI Native Daily Paper Digest – 20250904

1. Open Data Synthesis For Deep Research

🔑 Keywords: AI-generated summary, Deep Research, Hierarchical Constraint Satisfaction Problems, dual-agent system, reasoning trajectories

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The objective is to enhance Deep Research tasks by using a scalable framework called InfoSeek, which synthesizes hierarchical constraint satisfaction problems.

🛠️ Research Methods:

– Employed a dual-agent system to build a Research Tree from large-scale webpages, transforming these trees into natural language questions for complex task synthesis.

– Utilized reject sampling to generate reasoning trajectories and enable effective training.

💬 Research Conclusions:

– Models trained with InfoSeek outperform larger baseline models on challenging benchmarks, demonstrating significant improvement in performance and optimization strategies.

👉 Paper link: https://huggingface.co/papers/2509.00375

2. Robix: A Unified Model for Robot Interaction, Reasoning and Planning

🔑 Keywords: Robix, Vision-Language Model, Robot Reasoning, Task Planning, Human-Robot Interaction

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The research aims to introduce Robix, a unified model combining robot reasoning, task planning, and natural language interaction to enhance interactive task execution.

🛠️ Research Methods:

– Robix employs a vision-language architecture, integrating chain-of-thought reasoning and a three-stage training strategy, including continued pretraining, supervised finetuning, and reinforcement learning.

💬 Research Conclusions:

– Extensive experiments demonstrate that Robix surpasses both open-source and commercial baselines in interactive task execution, showing strong generalization across diverse instruction types and various user-involved tasks.

👉 Paper link: https://huggingface.co/papers/2509.01106

3. LMEnt: A Suite for Analyzing Knowledge in Language Models from Pretraining Data to Representations

🔑 Keywords: Language models, knowledge acquisition, pretraining, knowledge representations, entity-based retrieval

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The paper introduces LMEnt, a suite aimed at analyzing how language models acquire knowledge during pretraining.

🛠️ Research Methods:

– LMEnt provides a knowledge-rich pretraining corpus annotated with entity mentions, an entity-based retrieval method, and 12 pretrained models with up to 1 billion parameters.

💬 Research Conclusions:

– The study highlights that while fact frequency is important for knowledge acquisition, it doesn’t fully explain learning trends, demonstrating the utility of LMEnt in further understanding knowledge representations and learning dynamics in language models.

👉 Paper link: https://huggingface.co/papers/2509.03405

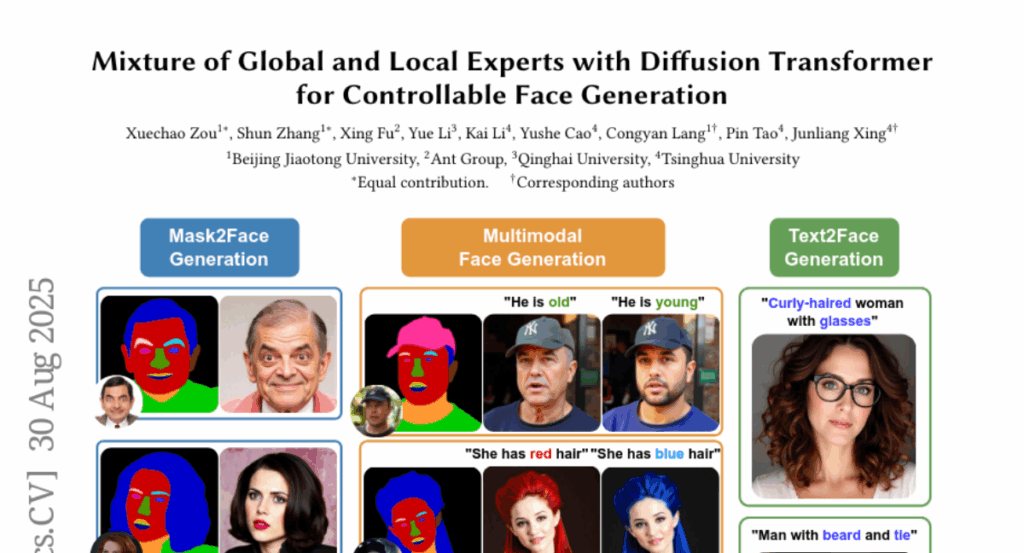

4. Mixture of Global and Local Experts with Diffusion Transformer for Controllable Face Generation

🔑 Keywords: Diffusion Transformers, Semantic-decoupled latent modeling, Controllable face generation, Dynamic gating, Zero-shot generalization

💡 Category: Generative Models

🌟 Research Objective:

– Introduce Face-MoGLE for high-quality, controllable face generation using semantic-decoupled latent modeling.

🛠️ Research Methods:

– Utilize Diffusion Transformers with a mixture of global and local experts, and a dynamic gating network for fine-grained controllability.

💬 Research Conclusions:

– Face-MoGLE demonstrates effectiveness in both multimodal and monomodal settings and exhibits robust zero-shot generalization capability.

👉 Paper link: https://huggingface.co/papers/2509.00428

5. MOSAIC: Multi-Subject Personalized Generation via Correspondence-Aware Alignment and Disentanglement

🔑 Keywords: Multi-subject generation, Semantic alignment, Feature disentanglement, Semantic correspondence, MOSAIC

💡 Category: Generative Models

🌟 Research Objective:

– To enhance multi-subject image generation with precise semantic alignment and orthogonal feature disentanglement.

🛠️ Research Methods:

– Utilizing a representation-centric framework, introducing SemAlign-MS, and implementing semantic correspondence attention loss and multi-reference disentanglement loss to ensure fidelity and coherence in image synthesis.

💬 Research Conclusions:

– The MOSAIC framework achieves state-of-the-art performance, maintaining high fidelity with 4+ reference subjects, surpassing existing methods which degrade beyond three subjects.

👉 Paper link: https://huggingface.co/papers/2509.01977

6. Planning with Reasoning using Vision Language World Model

🔑 Keywords: Vision Language World Model, Visual Planning, Semantic Abstraction

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to develop the Vision Language World Model (VLWM) to enhance visual planning through language-based world modeling.

🛠️ Research Methods:

– VLWM integrates language-based world modeling, action policy learning, and dynamics modeling. It uses a foundation model, aided by Tree of Captions and iterative LLM Self-Refine, to predict action and world state changes.

💬 Research Conclusions:

– VLWM excels in Visual Planning for Assistance, outperforming existing models in benchmarks like RoboVQA and achieving a significant improvement of +27% Elo score in PlannerArena human evaluations.

👉 Paper link: https://huggingface.co/papers/2509.02722

7. SATQuest: A Verifier for Logical Reasoning Evaluation and Reinforcement Fine-Tuning of LLMs

🔑 Keywords: SATQuest, Logical Reasoning, Large Language Models, Reinforcement Fine-tuning

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The study aims to evaluate and enhance the logical reasoning capabilities of LLMs by generating diverse SAT-based problems.

🛠️ Research Methods:

– SATQuest employs randomized SAT-based problem generation and objective answer verification via PySAT, structuring problems along instance scale, problem type, and question format.

💬 Research Conclusions:

– The use of SATQuest identifies significant limitations in LLMs’ logical reasoning, particularly in generalization. Moreover, reinforcement fine-tuning with SATQuest rewards improves performance and generalization but highlights challenges in cross-format adaptation.

👉 Paper link: https://huggingface.co/papers/2509.00930

8. Manipulation as in Simulation: Enabling Accurate Geometry Perception in Robots

🔑 Keywords: Camera Depth Models, depth camera, sim-to-real gap, robotic manipulation, neural data engine

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The study aims to enhance depth camera accuracy and improve metric depth prediction to enable better generalization of robotic manipulation policies from simulation to real-world tasks.

🛠️ Research Methods:

– The researchers propose Camera Depth Models (CDMs) as a simple plugin for depth cameras, using a neural data engine to generate high-quality paired data modeling a depth camera’s noise pattern.

💬 Research Conclusions:

– CDMs achieve nearly simulation-level accuracy in depth prediction, effectively bridging the sim-to-real gap in manipulation tasks. Policy trained on raw simulated depth generalizes seamlessly to real-world robots without fine-tuning, even on challenging tasks.

👉 Paper link: https://huggingface.co/papers/2509.02530