AI Native Daily Paper Digest – 20250905

1. Drivel-ology: Challenging LLMs with Interpreting Nonsense with Depth

🔑 Keywords: Drivelology, LLMs, NLP, benchmark dataset, pragmatic understanding

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce and explore the concept of Drivelology, a linguistic phenomenon described as “nonsense with depth,” and assess the ability of LLMs to understand its nuanced meanings.

🛠️ Research Methods:

– Developed a benchmark dataset of over 1,200 curated Drivelology examples in multiple languages, requiring expert annotation and validation.

💬 Research Conclusions:

– LLMs show significant limitations in grasping the layered semantics of Drivelology, often confusing it with shallow nonsense and struggling with pragmatic understanding beyond statistical fluency.

👉 Paper link: https://huggingface.co/papers/2509.03867

2. From Editor to Dense Geometry Estimator

🔑 Keywords: FE2E, Diffusion Transformer, dense geometry prediction, image-to-image task, AI-generated summary

💡 Category: Computer Vision

🌟 Research Objective:

– Introduce FE2E, a framework utilizing Diffusion Transformer for improving zero-shot monocular depth and normal estimation.

🛠️ Research Methods:

– Analyze fine-tuning behaviors of editing and generative models for dense geometry estimation.

– Reformulate the editor’s loss into a consistent velocity training objective.

– Employ logarithmic quantization to manage precision conflicts.

💬 Research Conclusions:

– FE2E achieves significant performance improvements without scaling training data, outperforming generative models, especially on the ETH3D dataset.

👉 Paper link: https://huggingface.co/papers/2509.04338

3. DeepResearch Arena: The First Exam of LLMs’ Research Abilities via Seminar-Grounded Tasks

🔑 Keywords: DeepResearch Arena, research agents, academic seminars, MAHTG

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To evaluate deep research agents across multiple disciplines using the DeepResearch Arena benchmark created from academic seminar transcripts.

🛠️ Research Methods:

– Implementation of a Multi-Agent Hierarchical Task Generation (MAHTG) system to convert seminar transcripts into research tasks while ensuring task traceability and noise filtering.

💬 Research Conclusions:

– DeepResearch Arena offers significant challenges and reveals performance gaps among state-of-the-art research agents across various models.

👉 Paper link: https://huggingface.co/papers/2509.01396

4. Towards a Unified View of Large Language Model Post-Training

🔑 Keywords: Unified Policy Gradient Estimator, Hybrid Post-Training, AI Native, Reinforcement Learning, Supervised Fine-Tuning

💡 Category: Natural Language Processing

🌟 Research Objective:

– This study aims to integrate online and offline post-training data to enhance language model performance across various benchmarks.

🛠️ Research Methods:

– The researchers developed a Unified Policy Gradient Estimator to reconcile Reinforcement Learning and Supervised Fine-Tuning approaches and propose a Hybrid Post-Training algorithm.

💬 Research Conclusions:

– The proposed framework and algorithm demonstrate superior performance across multiple benchmarks, effectively combining demonstration exploitation with stable exploration.

👉 Paper link: https://huggingface.co/papers/2509.04419

5. Inverse IFEval: Can LLMs Unlearn Stubborn Training Conventions to Follow Real Instructions?

🔑 Keywords: Large Language Models, Inverse IFEval, cognitive inertia, Counter-intuitive Ability, supervised fine-tuning

💡 Category: Natural Language Processing

🌟 Research Objective:

– The research introduces Inverse IFEval as a benchmark to evaluate Large Language Models’ capacity to override training biases and respond to unconventional instructions.

🛠️ Research Methods:

– The study employs a human-in-the-loop pipeline to construct a dataset consisting of 1012 Chinese and English questions across 23 different domains, using the LLM-as-a-Judge framework for evaluation.

💬 Research Conclusions:

– The findings highlight the need for adaptability in LLMs under unconventional contexts, stressing that future alignment efforts should account for more than fluency and factual correctness. Inverse IFEval aims to mitigate cognitive inertia, reduce overfitting, and enhance LLMs’ instruction-following reliability in diverse scenarios.

👉 Paper link: https://huggingface.co/papers/2509.04292

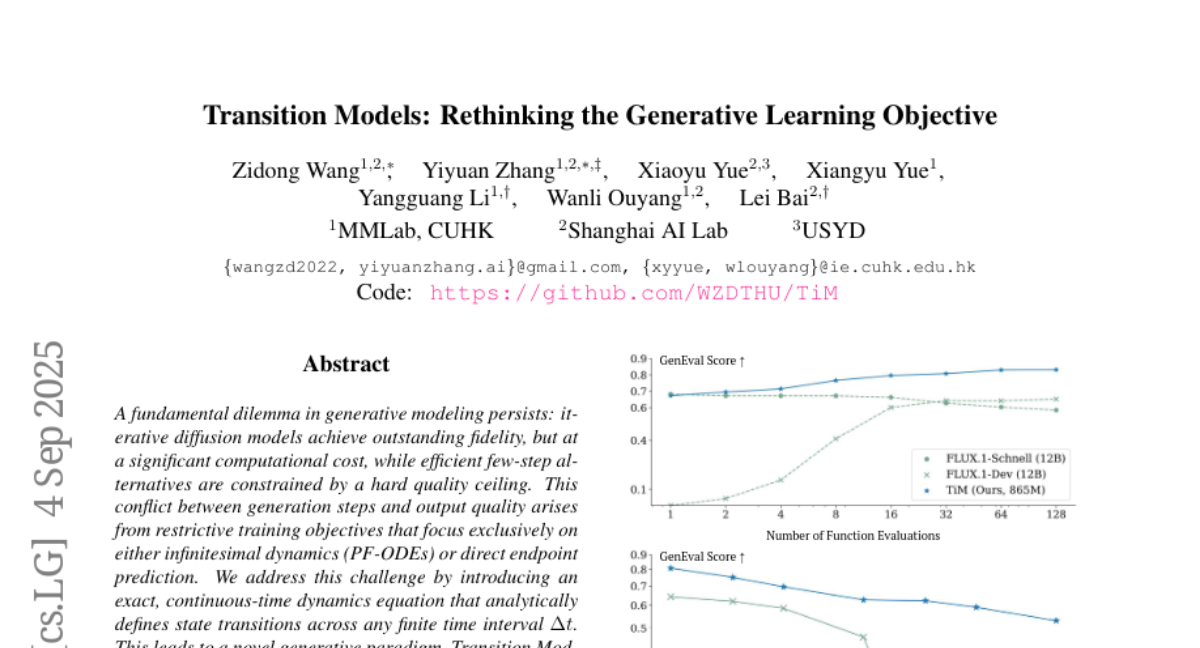

6. Transition Models: Rethinking the Generative Learning Objective

🔑 Keywords: Transition Models (TiM), continuous-time dynamics equation, state-of-the-art performance, monotonic quality improvement, native-resolution strategy

💡 Category: Generative Models

🌟 Research Objective:

– The research aims to address the trade-off between computational cost and output quality in generative modeling by introducing a novel paradigm called Transition Models (TiM).

🛠️ Research Methods:

– The study introduces an exact continuous-time dynamics equation that allows state transitions over any finite time interval, enabling adaptation to arbitrary-step transitions.

💬 Research Conclusions:

– TiM outperforms leading generative models like SD3.5 and FLUX.1, achieving state-of-the-art performance with only 865M parameters and demonstrating monotonic quality improvement with increasing sampling budgets.

– When utilizing a native-resolution strategy, TiM reaches exceptional fidelity at resolutions up to 4096×4096.

👉 Paper link: https://huggingface.co/papers/2509.04394

7. Video-MTR: Reinforced Multi-Turn Reasoning for Long Video Understanding

🔑 Keywords: Video-MTR, Reinforced Multi-Turn Reasoning, end-to-end training, gated bi-level reward system, long-form video understanding

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Video-MTR is designed to enhance long-form video understanding by iteratively selecting key segments and comprehending questions.

🛠️ Research Methods:

– The framework employs a multi-turn reasoning approach, incorporating a novel gated bi-level reward system to optimize segment selection and question comprehension.

💬 Research Conclusions:

– Video-MTR significantly outperforms existing methods in terms of accuracy and efficiency, as demonstrated through extensive experiments on benchmarks like VideoMME, MLVU, and EgoSchema.

👉 Paper link: https://huggingface.co/papers/2508.20478

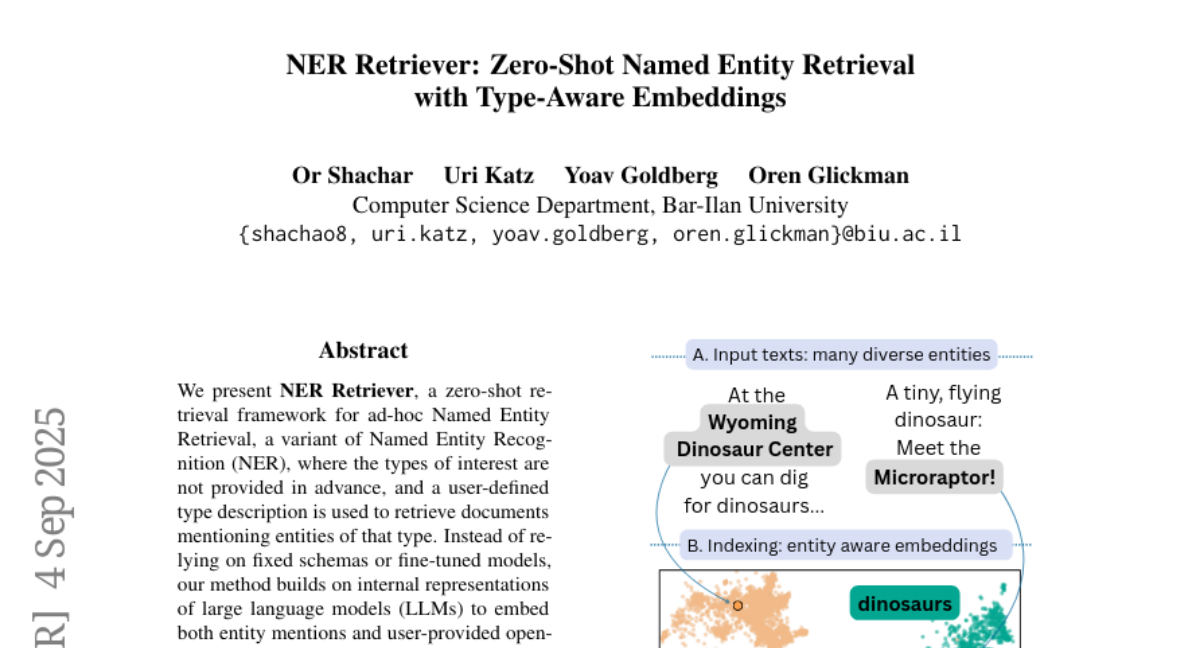

8. NER Retriever: Zero-Shot Named Entity Retrieval with Type-Aware Embeddings

🔑 Keywords: NER Retriever, zero-shot retrieval, Named Entity Recognition, internal representations, large language models

💡 Category: Natural Language Processing

🌟 Research Objective:

– To develop a framework, NER Retriever, for zero-shot Named Entity Retrieval without relying on fixed schemas or fine-tuned models by using internal representations from large language models.

🛠️ Research Methods:

– Utilizing internal representations, specifically value vectors from mid-layer transformer blocks, to embed entity mentions and user-provided type descriptions into a shared semantic space.

– Training a lightweight contrastive projection network to refine these embeddings, aligning type-compatible entities and separating unrelated types.

💬 Research Conclusions:

– NER Retriever significantly outperforms lexical and dense sentence-level retrieval methods on three benchmarks.

– The approach demonstrates a practical solution for scalable, schema-free entity retrieval, supported by the effectiveness of internal representations and representation selection within large language models.

👉 Paper link: https://huggingface.co/papers/2509.04011

9. Few-step Flow for 3D Generation via Marginal-Data Transport Distillation

🔑 Keywords: MDT-dist, Velocity Matching, Velocity Distillation, 3D flow generation, sampling steps

💡 Category: Generative Models

🌟 Research Objective:

– The primary objective is to accelerate 3D flow generation by developing the MDT-dist framework using Marginal-Data Transport to improve speed and fidelity.

🛠️ Research Methods:

– Introduction of two optimizable objectives: Velocity Matching (VM) and Velocity Distillation (VD), to facilitate efficient optimization for 3D generation models.

💬 Research Conclusions:

– The MDT-dist framework significantly reduces the number of sampling steps and achieves substantial speedup while maintaining high visual and geometric fidelity, outperforming existing consistency model distillation methods.

👉 Paper link: https://huggingface.co/papers/2509.04406

10. Loong: Synthesize Long Chain-of-Thoughts at Scale through Verifiers

🔑 Keywords: Large Language Models, Reinforcement Learning with Verifiable Reward, synthetic data generation, Chain-of-Thought, verification

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The Loong Project aims to enhance reasoning capabilities in Large Language Models (LLMs) using Reinforcement Learning with Verifiable Reward (RLVR) through a framework for synthetic data generation and verification.

🛠️ Research Methods:

– Introduction of LoongBench and LoongEnv: LoongBench is a curated dataset across 12 reasoning-intensive domains with human-vetted examples; LoongEnv is a modular environment enabling generation of synthetic data and supports various prompting strategies.

💬 Research Conclusions:

– The project demonstrated potential improvements in LLM’s reasoning abilities using synthetic data across diverse domains and highlighted performance bottlenecks and domain coverage issues through benchmarking.

👉 Paper link: https://huggingface.co/papers/2509.03059

11. Durian: Dual Reference-guided Portrait Animation with Attribute Transfer

🔑 Keywords: Durian, zero-shot manner, dual reference networks, diffusion model, attribute transfer

💡 Category: Generative Models

🌟 Research Objective:

– Durian introduces a novel method for generating high-fidelity portrait animations with facial attribute transfer using a zero-shot approach.

🛠️ Research Methods:

– Utilizes dual reference networks combined with a diffusion model to transfer attributes spatially between frames.

– Employs a self-reconstruction formulation where frames are sampled and conditioned with mask expansion strategies, enhanced by keypoint-conditioned image generation.

💬 Research Conclusions:

– Durian achieves state-of-the-art performance in portrait animation with multi-attribute composition, demonstrating effective generalization across diverse attributes without explicit triplet supervision.

👉 Paper link: https://huggingface.co/papers/2509.04434

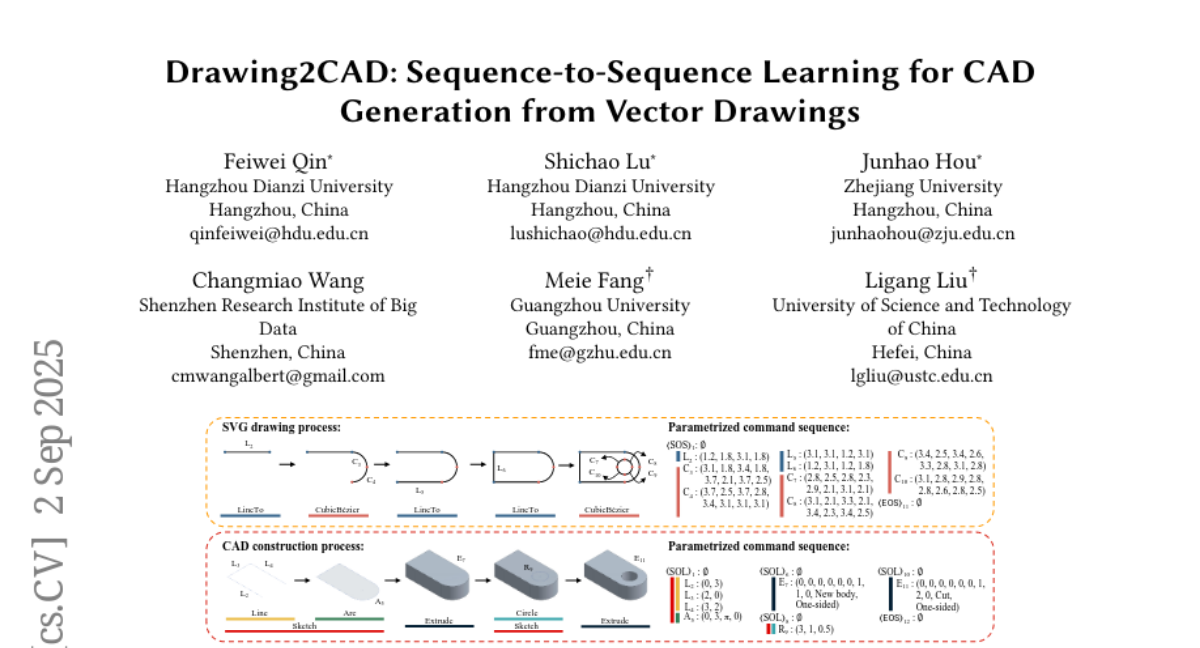

12. Drawing2CAD: Sequence-to-Sequence Learning for CAD Generation from Vector Drawings

🔑 Keywords: Drawing2CAD, sequence-to-sequence learning, parametric CAD models, dual-decoder transformer architecture, vector primitive representation

💡 Category: Generative Models

🌟 Research Objective:

– To transform 2D vector drawings into parametric CAD models using a sequence-to-sequence learning framework.

🛠️ Research Methods:

– Utilization of a dual-decoder transformer architecture and soft target distribution loss function to enhance precision and flexibility in CAD model generation.

– Implementation of a network-friendly vector primitive representation for precise geometric information maintenance.

💬 Research Conclusions:

– The Drawing2CAD framework effectively bridges the gap between traditional 2D engineering drawings and modern parametric CAD modeling.

– The creation and evaluation of the CAD-VGDrawing dataset demonstrate significant improving generative modeling accuracy.

👉 Paper link: https://huggingface.co/papers/2508.18733

13. Delta Activations: A Representation for Finetuned Large Language Models

🔑 Keywords: Delta Activations, vector embeddings, clustering, few-shot finetuning

💡 Category: Machine Learning

🌟 Research Objective:

– To introduce Delta Activations as a method to represent fine-tuned models as vector embeddings, facilitating their clustering by domain and task.

🛠️ Research Methods:

– Utilizing shifts in internal activations of models relative to a base model to create vector embeddings, supporting model selection and merging through few-shot finetuning.

💬 Research Conclusions:

– Delta Activations reveal structure in the model landscape by enabling effective clustering and have robust and additive properties in finetuned settings.

– The approach aids in the reuse of publicly available models by embedding tasks and models for more efficient exploration and selection.

👉 Paper link: https://huggingface.co/papers/2509.04442

14. False Sense of Security: Why Probing-based Malicious Input Detection Fails to Generalize

🔑 Keywords: Probing-based approaches, Large Language Models, Safety detection, Instructional patterns, Semantic harmfulness

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to investigate the effectiveness of probing-based approaches in detecting harmful instructions in Large Language Models (LLMs), focusing on whether these methods rely on semantic understanding or superficial patterns.

🛠️ Research Methods:

– The researchers conducted systematic experiments, comparing the performance of simple n-gram methods with controlled experiments on semantically cleaned datasets to analyze the dependencies on learned patterns.

💬 Research Conclusions:

– The study concluded that current probing-based approaches give a false sense of security, as they depend on recognizing superficial instructional patterns and trigger words instead of understanding semantic harmfulness, suggesting a need for redesigning models and evaluation protocols.

👉 Paper link: https://huggingface.co/papers/2509.03888

15.