AI Native Daily Paper Digest – 20250912

1. VLA-Adapter: An Effective Paradigm for Tiny-Scale Vision-Language-Action Model

🔑 Keywords: VLA-Adapter, Bridge Attention, lightweight Policy module, state-of-the-art performance, fast inference speed

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The research aims to reduce VLA models’ reliance on large-scale VLMs and extensive pre-training by introducing the VLA-Adapter, which optimizes the bridge between vision-language representations and actions.

🛠️ Research Methods:

– The introduction of a lightweight Policy module with Bridge Attention to inject optimal conditions into the action space, allowing for high performance without the need for pre-training on robotic data.

💬 Research Conclusions:

– The VLA-Adapter achieves state-of-the-art performance and fast inference speed while minimizing computational resources, and effectively facilitates training on consumer-grade GPUs.

👉 Paper link: https://huggingface.co/papers/2509.09372

2. HuMo: Human-Centric Video Generation via Collaborative Multi-Modal Conditioning

🔑 Keywords: Human-Centric Video Generation, multimodal inputs, subject preservation, high-quality dataset, audio-visual sync

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Develop a unified framework, HuMo, for Human-Centric Video Generation (HCVG) to address challenges in multimodal control.

🛠️ Research Methods:

– Construct a high-quality dataset with diverse and paired text, reference images, and audio.

– Utilize a two-stage progressive multimodal training paradigm with task-specific strategies for subject preservation and audio-visual sync.

💬 Research Conclusions:

– HuMo surpasses specialized state-of-the-art methods and establishes a unified framework for collaborative multimodal-conditioned HCVG.

👉 Paper link: https://huggingface.co/papers/2509.08519

3. SimpleVLA-RL: Scaling VLA Training via Reinforcement Learning

🔑 Keywords: SimpleVLA-RL, Reinforcement Learning, Vision-Language-Action, trajectory sampling, state-of-the-art performance

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The primary aim of the research is to enhance long-horizon action planning in Vision-Language-Action (VLA) models using an efficient RL framework called SimpleVLA-RL.

🛠️ Research Methods:

– The study utilizes techniques such as VLA-specific trajectory sampling, scalable parallelization, and optimized loss computation to improve the performance of VLA models.

💬 Research Conclusions:

– SimpleVLA-RL not only achieves state-of-the-art performance but also outperforms existing models on tasks involving distribution shift, reduces dependency on large-scale data, and discovers novel patterns such as “pushcut” during training.

👉 Paper link: https://huggingface.co/papers/2509.09674

4. EchoX: Towards Mitigating Acoustic-Semantic Gap via Echo Training for Speech-to-Speech LLMs

🔑 Keywords: EchoX, speech-to-speech models, acoustic-semantic gap, semantic representations, reasoning abilities

💡 Category: Natural Language Processing

🌟 Research Objective:

– To address the acoustic-semantic gap in speech-to-speech large language models and improve reasoning capabilities.

🛠️ Research Methods:

– Introduce EchoX, a model that uses semantic representations and dynamically generates speech training targets to integrate both acoustic and semantic learning.

💬 Research Conclusions:

– EchoX achieves advanced performance on knowledge-based benchmarks, demonstrating strong reasoning abilities with approximately six thousand hours of training data.

👉 Paper link: https://huggingface.co/papers/2509.09174

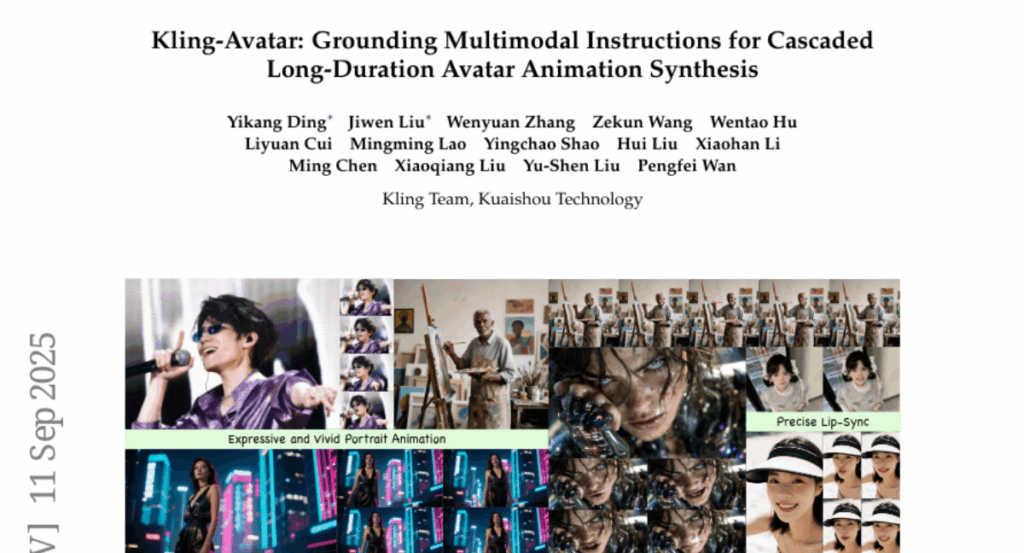

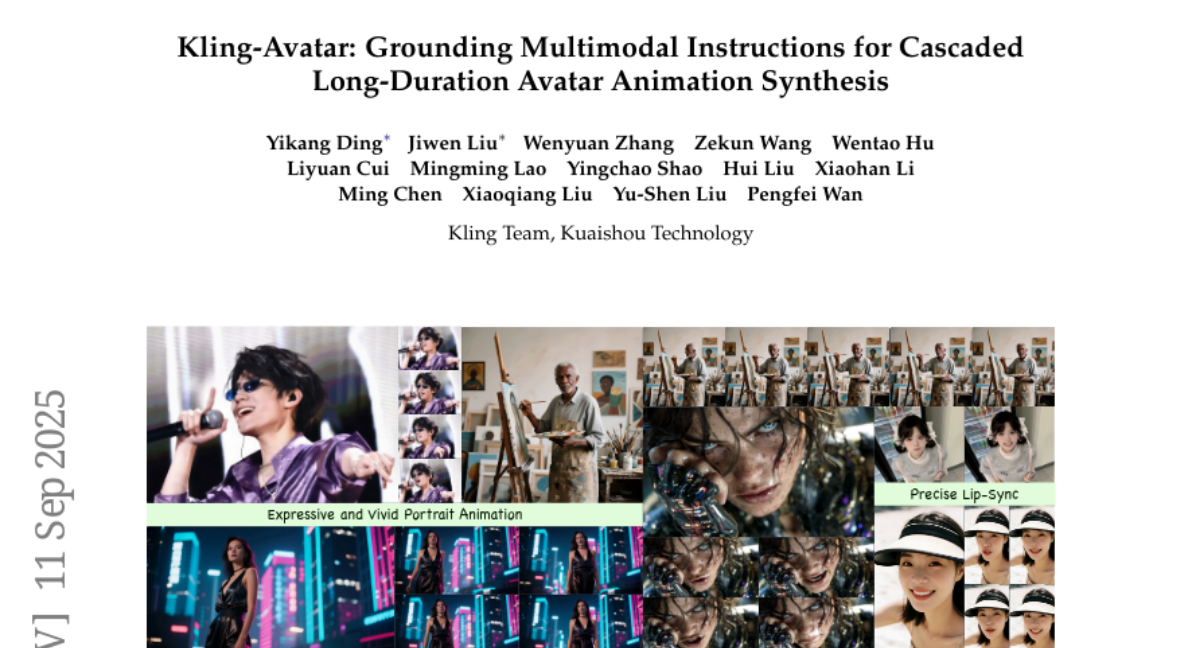

5. Kling-Avatar: Grounding Multimodal Instructions for Cascaded Long-Duration Avatar Animation Synthesis

🔑 Keywords: AI Native, multimodal instruction understanding, photorealistic portrait generation, audio-driven avatar, Kling-Avatar

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance audio-driven avatar video generation by integrating multimodal instruction understanding with photorealistic portrait generation for improved semantic coherence and expressiveness.

🛠️ Research Methods:

– Implementation of a two-stage pipeline involving a multimodal large language model to create blueprint videos followed by generating sub-clips using a first-last frame strategy for detailed and intent-preserving outputs.

💬 Research Conclusions:

– The Kling-Avatar framework successfully generates vivid, long-duration videos with superior lip synchronization, emotion expressiveness, and cross-domain generalization, setting a new benchmark in high-fidelity audio-driven avatar synthesis.

👉 Paper link: https://huggingface.co/papers/2509.09595

6. Harnessing Uncertainty: Entropy-Modulated Policy Gradients for Long-Horizon LLM Agents

🔑 Keywords: Entropy-Modulated Policy Gradients, EMPG, Large Language Models, Policy Gradients, Reinforcement Learning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to address the learning dynamics issues in long-horizon tasks conducted by agents based on Large Language Models by recalibrating policy gradients to improve performance.

🛠️ Research Methods:

– The introduction of Entropy-Modulated Policy Gradients (EMPG) framework that recalibrates learning signals based on the step-wise uncertainty and task outcome to enhance efficiency and stability.

💬 Research Conclusions:

– EMPG shows significant performance gains in challenging tasks like WebShop, ALFWorld, and Deep Search compared to existing strong policy gradient baselines, demonstrating its effectiveness in overcoming sparse reward challenges.

👉 Paper link: https://huggingface.co/papers/2509.09265

7. FLUX-Reason-6M & PRISM-Bench: A Million-Scale Text-to-Image Reasoning Dataset and Comprehensive Benchmark

🔑 Keywords: Reasoning-focused datasets, PRISM-Bench, FLUX-Reason-6M, AI Native, text-to-image models

💡 Category: Generative Models

🌟 Research Objective:

– To address the performance gap in open-source text-to-image models by introducing large-scale, reasoning-focused datasets and comprehensive evaluation benchmarks.

🛠️ Research Methods:

– Creation of FLUX-Reason-6M with 6 million images and 20 million bilingual descriptions, organized by key characteristics.

– Development of PRISM-Bench with seven evaluation tracks, including a Long Text challenge for nuanced human-aligned assessment.

💬 Research Conclusions:

– The study identifies critical performance gaps in leading models by evaluating them on PRISM-Bench.

– The release of datasets and benchmarks aims to accelerate reasoning-oriented text-to-image generation.

👉 Paper link: https://huggingface.co/papers/2509.09680

8. Can Understanding and Generation Truly Benefit Together — or Just Coexist?

🔑 Keywords: UAE, reinforcement learning, image-to-text, text-to-image, unified multimodal learning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance mutual understanding and generation fidelity between image-to-text and text-to-image processes using a novel framework UAE with reinforcement learning.

🛠️ Research Methods:

– Implemented a three-stage process using reinforcement learning in the UAE framework, including pre-training the decoder with long-context image captions and introducing a new benchmark called Unified-Bench to evaluate unification of multimodal models.

💬 Research Conclusions:

– The UAE framework facilitates the encoder to produce more descriptive captions and improves the decoder’s reconstruction quality, resulting in a higher fidelity understanding and generation process.

👉 Paper link: https://huggingface.co/papers/2509.09666

9. MachineLearningLM: Continued Pretraining Language Models on Millions of Synthetic Tabular Prediction Tasks Scales In-Context ML

🔑 Keywords: MachineLearningLM, in-context learning, continued-pretraining, random-forest teacher, many-shot scaling law

💡 Category: Machine Learning

🌟 Research Objective:

– Enhance a general-purpose LLM with robust in-context machine learning capabilities using continued pretraining with synthesized ML tasks.

🛠️ Research Methods:

– Implemented a portable continued-pretraining framework synthesizing ML tasks from structural causal models, using a random-forest teacher to distill decision strategies for robustness.

💬 Research Conclusions:

– Achieved superior performance over strong LLM baselines with approximately 15% improvement in out-of-distribution tabular classification across multiple domains.

– Demonstrated striking many-shot scaling law, achieving random-forest-level accuracy without task-specific training and preserved general chat capabilities.

👉 Paper link: https://huggingface.co/papers/2509.06806

10. AU-Harness: An Open-Source Toolkit for Holistic Evaluation of Audio LLMs

🔑 Keywords: Large Audio Language Models, AU-Harness, batch processing, standardized prompting, temporal understanding, Spoken Language Reasoning

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To develop and present AU-Harness, an efficient evaluation framework designed to enhance the assessment of Large Audio Language Models by addressing challenges in speed, reproducibility, and task coverage.

🛠️ Research Methods:

– Employed optimized batch processing and parallel execution to achieve significant speedup.

– Introduced standardized prompting protocols and flexible configurations for fair model comparison.

– Added new evaluation categories: LLM-Adaptive Diarization and Spoken Language Reasoning.

💬 Research Conclusions:

– AU-Harness reveals significant gaps in current LALMs, especially in temporal understanding and complex spoken language reasoning tasks.

– Identified a lack of standardization in instruction modality across audio benchmarks causing notable performance variations.

– Provides practical tools and insights into model limitations, facilitating systematic LALM development.

👉 Paper link: https://huggingface.co/papers/2509.08031

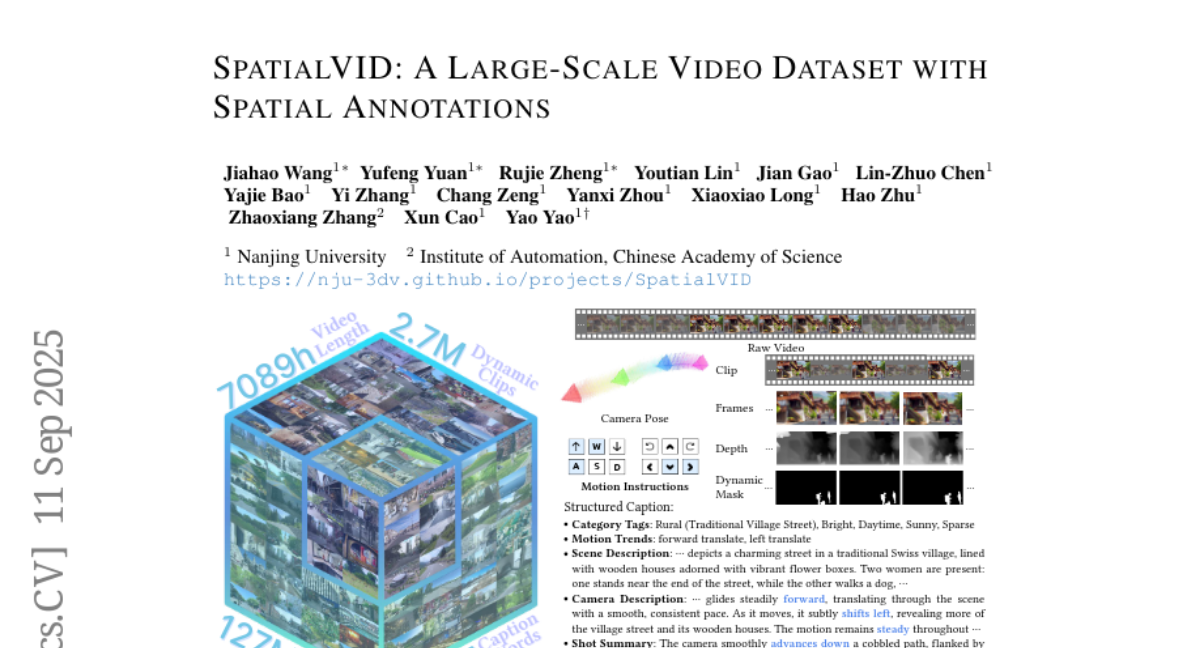

11. SpatialVID: A Large-Scale Video Dataset with Spatial Annotations

🔑 Keywords: SpatialVID, 3D annotations, camera poses, video and 3D vision, data scalability

💡 Category: Computer Vision

🌟 Research Objective:

– To address the limitation of current models in spatial intelligence due to the scarcity of large-scale, high-quality training data, specifically for real-world dynamic scenes.

🛠️ Research Methods:

– Collection and processing of over 21,000 hours of raw video into 2.7 million clips with diverse scenes and dense 3D annotations, enhanced by a comprehensive annotation pipeline providing spatial and semantic information.

💬 Research Conclusions:

– SpatialVID’s rich and diverse dataset significantly improves model generalization and performance, making it a valuable asset for video and 3D vision research.

👉 Paper link: https://huggingface.co/papers/2509.09676

12. mmBERT: A Modern Multilingual Encoder with Annealed Language Learning

🔑 Keywords: mmBERT, Encoder-only Language Model, Multilingual Text, Low-resource Languages, Classification

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study introduces mmBERT, an encoder-only language model pretrained on multilingual text to enhance classification and retrieval tasks.

🛠️ Research Methods:

– Utilizes novel elements such as an inverse mask ratio schedule and inverse temperature sampling ratio, incorporating low-resource languages during a strategic phase.

💬 Research Conclusions:

– mmBERT significantly outperforms previous models on classification and retrieval tasks, especially benefiting from incorporating low-resource languages.

👉 Paper link: https://huggingface.co/papers/2509.06888

13. Visual Programmability: A Guide for Code-as-Thought in Chart Understanding

🔑 Keywords: VLMs, Code-as-Thought, Visual Programmability, reinforcement learning, dual-reward system

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– Enhance Vision-Language Models (VLMs) with an adaptive framework for improved chart understanding and reasoning capabilities by introducing Code-as-Thought (CaT) and Visual Programmability.

🛠️ Research Methods:

– Implementation of an adaptive framework where VLMs choose between code-based and direct visual reasoning, trained with a novel dual-reward system using reinforcement learning.

💬 Research Conclusions:

– Demonstrated strong and robust performance across diverse chart-understanding benchmarks, proving VLMs can dynamically select the optimal reasoning pathway for various tasks.

👉 Paper link: https://huggingface.co/papers/2509.09286

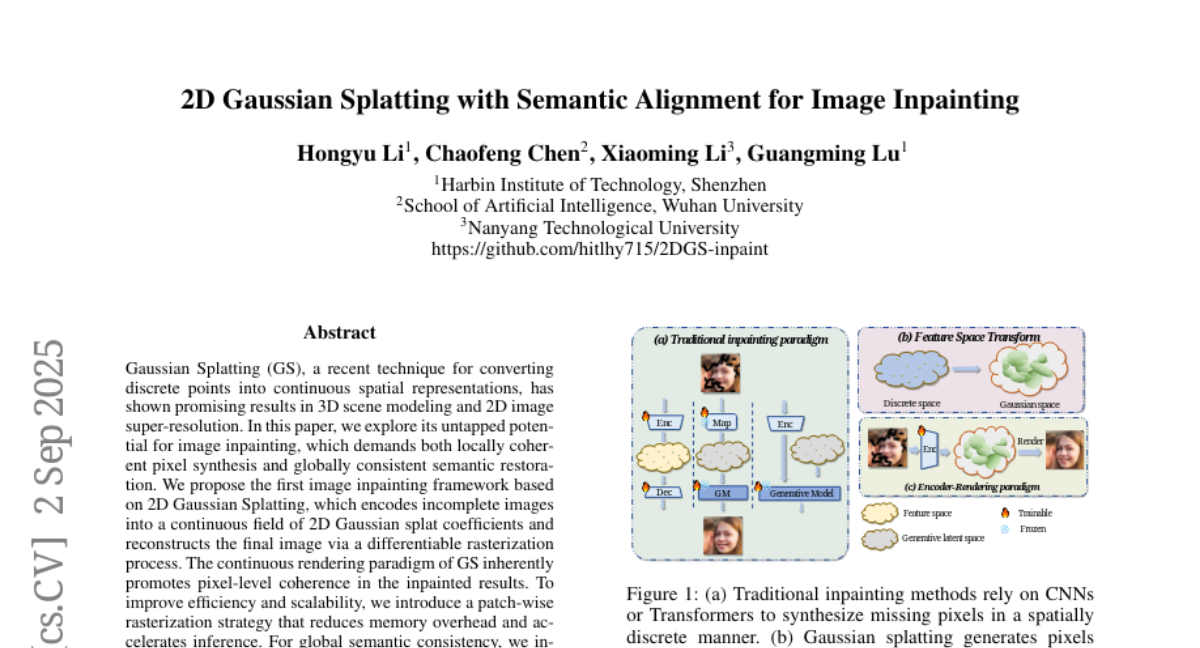

14. 2D Gaussian Splatting with Semantic Alignment for Image Inpainting

🔑 Keywords: Gaussian Splatting, image inpainting, DINO model, global semantic consistency

💡 Category: Computer Vision

🌟 Research Objective:

– The research aims to explore the potential of Gaussian Splatting for image inpainting, focusing on achieving local pixel coherence and global semantic consistency.

🛠️ Research Methods:

– The study proposes an image inpainting framework using 2D Gaussian Splatting, combining a continuous field representation with pretrained DINO model features.

– A patch-wise rasterization strategy is introduced to enhance efficiency by reducing memory overhead and speeding up inference.

💬 Research Conclusions:

– The proposed method demonstrates competitive performance in both quantitative metrics and perceptual quality, suggesting a promising new direction for utilizing Gaussian Splatting in 2D image processing.

👉 Paper link: https://huggingface.co/papers/2509.01964

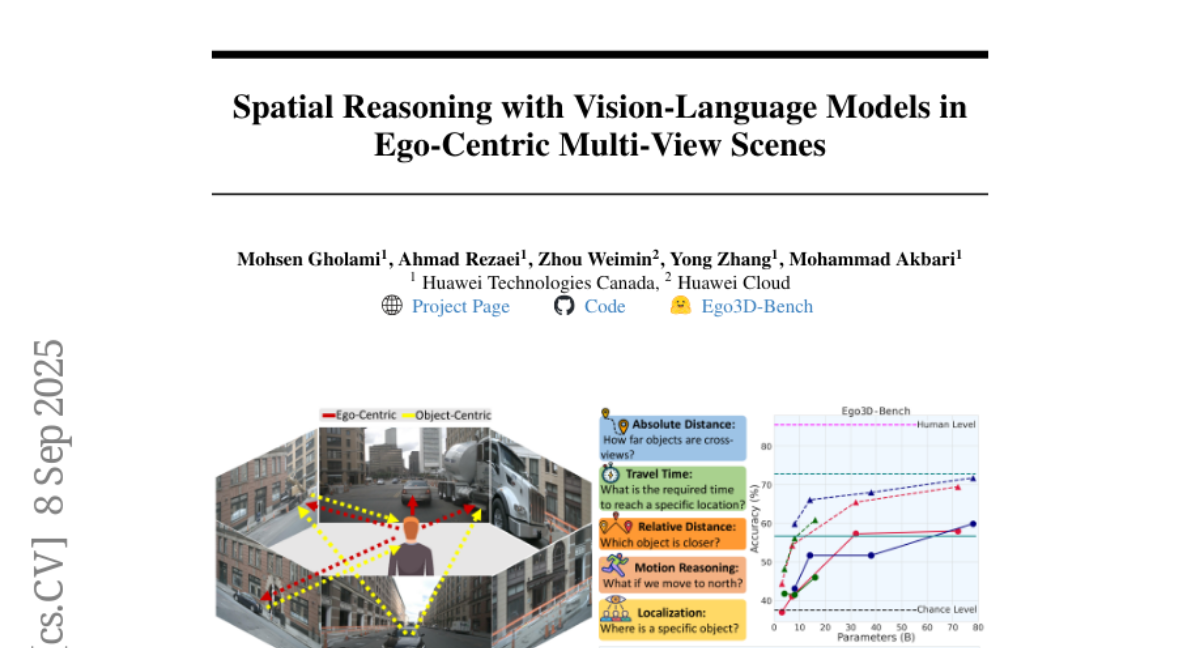

15. Spatial Reasoning with Vision-Language Models in Ego-Centric Multi-View Scenes

🔑 Keywords: Ego3D-Bench, Ego3D-VLM, Vision-Language Models, 3D spatial reasoning, ego-centric, multi-view

💡 Category: Computer Vision

🌟 Research Objective:

– The study focuses on evaluating and enhancing the 3D spatial reasoning abilities of Vision-Language Models (VLMs) using ego-centric, multi-view outdoor data.

🛠️ Research Methods:

– Ego3D-Bench benchmark is introduced, designed with over 8,600 QA pairs and used to assess 16 state-of-the-art VLMs.

– The post-training framework Ego3D-VLM is proposed to improve VLM performance in 3D spatial reasoning by generating cognitive maps.

💬 Research Conclusions:

– Present VLMs are not yet at human-level spatial understanding, evidenced by a notable performance gap in benchmarks.

– Ego3D-VLM significantly enhances performance, achieving a 12% improvement on multi-choice QA and a 56% improvement on absolute distance estimation.

👉 Paper link: https://huggingface.co/papers/2509.06266

16. Gradient-Attention Guided Dual-Masking Synergetic Framework for Robust Text-based Person Retrieval

🔑 Keywords: AI-Generated Summary, CLIP, person representation learning, global contrastive learning, GA-DMS

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Enhance CLIP for person representation learning by improving data quality and model architecture to achieve state-of-the-art performance.

🛠️ Research Methods:

– Developed a noise-resistant data construction pipeline leveraging MLLMs to filter and caption web-sourced images, creating a large-scale dataset named WebPerson.

– Introduced GA-DMS framework, which uses gradient-attention guided dual-masking to improve cross-modal alignment and enhance fine-grained semantic representation learning.

💬 Research Conclusions:

– GA-DMS achieves state-of-the-art performance on multiple benchmarks, demonstrating its efficacy in improving person representation learning.

👉 Paper link: https://huggingface.co/papers/2509.09118

17. OmniEVA: Embodied Versatile Planner via Task-Adaptive 3D-Grounded and Embodiment-aware Reasoning

🔑 Keywords: Embodied Intelligence, Multimodal Understanding, Reasoning, Task-Adaptive 3D Grounding, Embodiment-Aware Reasoning

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– OmniEVA aims to address spatial and embodiment gaps in multimodal large language models for embodied intelligence.

🛠️ Research Methods:

– Introduces a Task-Adaptive 3D Grounding mechanism and an Embodiment-Aware Reasoning framework to improve embodied reasoning and task planning.

💬 Research Conclusions:

– OmniEVA achieves state-of-the-art performance in general embodied reasoning and demonstrates strong capabilities across diverse tasks, as confirmed by evaluations on a suite of proposed embodied benchmarks.

👉 Paper link: https://huggingface.co/papers/2509.09332

18. The Choice of Divergence: A Neglected Key to Mitigating Diversity Collapse in Reinforcement Learning with Verifiable Reward

🔑 Keywords: DPH-RL, Pass@k, RLVR, divergence term, catastrophic forgetting

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To address Pass@k degradation and catastrophic forgetting in fine-tuning Large Language Models (LLMs) using Reinforcement Learning with Verifiable Reward (RLVR) by introducing the DPH-RL framework.

🛠️ Research Methods:

– Implementing a new framework called Diversity-Preserving Hybrid RL (DPH-RL) that employs mass-covering f-divergences, such as forward-KL and JS-divergence, as a rehearsal mechanism to retain knowledge.

💬 Research Conclusions:

– DPH-RL not only resolves Pass@k degradation but also improves Pass@1 and Pass@k performance in- and out-of-domain, showcasing its efficiency by utilizing generator functions for f-divergence computation with only initial policy sampling.

👉 Paper link: https://huggingface.co/papers/2509.07430

19. ObjectReact: Learning Object-Relative Control for Visual Navigation

🔑 Keywords: Object-relative, Visual navigation, AI-generated summary, WayObject Costmap, Scene graph

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Introduce a new object-relative control paradigm using a topometric map for better invariance and generalization in visual navigation.

🛠️ Research Methods:

– Utilize a relative 3D scene graph for topometric map representation and train a local controller called ObjectReact, conditioned on a high-level WayObject Costmap.

💬 Research Conclusions:

– The object-relative approach outperforms the image-relative counterpart, showing improved generalization and cross-embodiment deployment across various settings and tasks.

👉 Paper link: https://huggingface.co/papers/2509.09594

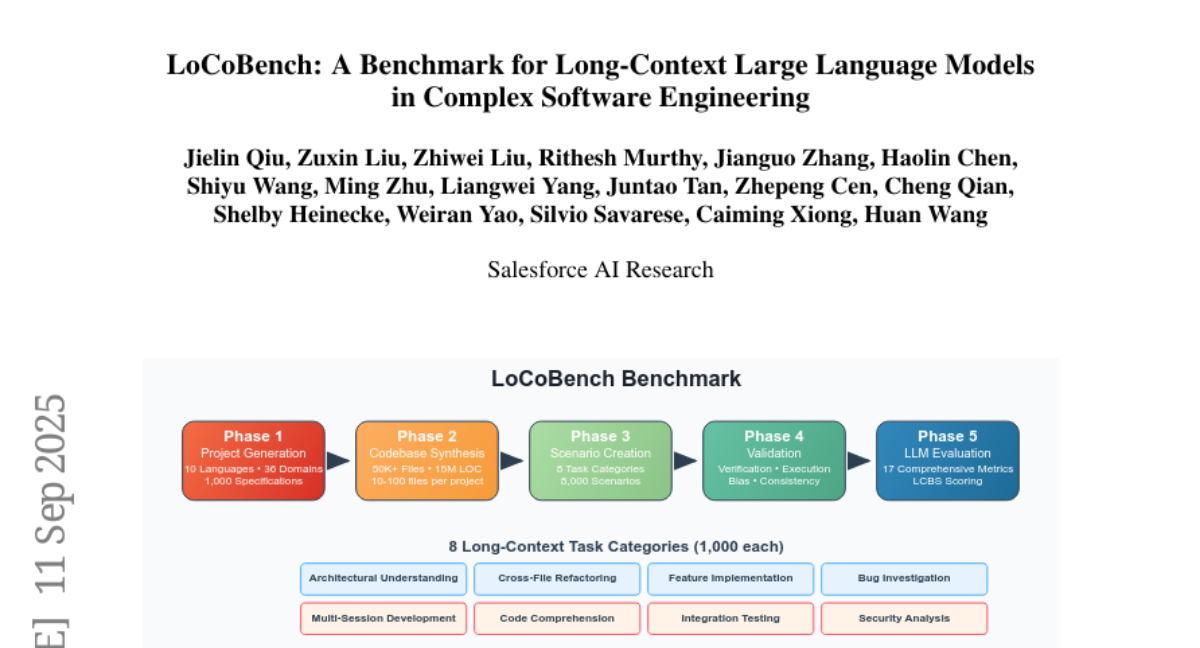

20. LoCoBench: A Benchmark for Long-Context Large Language Models in Complex Software Engineering

🔑 Keywords: Long-Context Language Models, LoCoBench, Codebases, Architectural Consistency, Evaluation Metrics

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The study aims to address the gap in evaluating long-context language models in complex software development scenarios, particularly in understanding entire codebases and maintaining architectural consistency.

🛠️ Research Methods:

– LoCoBench, a benchmark designed for long-context capabilities, evaluates models across 8,000 scenarios in 10 programming languages with varying context lengths.

– The evaluation framework includes 17 metrics across 4 dimensions, introducing a comprehensive LoCoBench Score (LCBS).

💬 Research Conclusions:

– The evaluation reveals significant performance gaps in current state-of-the-art long-context models, highlighting the challenge and necessity for improved solutions in complex software development understanding.

👉 Paper link: https://huggingface.co/papers/2509.09614

21. Modality Alignment with Multi-scale Bilateral Attention for Multimodal Recommendation

🔑 Keywords: MambaRec, Multimodal Recommendation Systems, AI-generated summary, Fusion Quality, Global Modality Alignment

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The research aims to enhance multimodal recommendation systems by improving cross-modal fusion and reducing representational bias.

🛠️ Research Methods:

– Introduction of MambaRec framework with local feature alignment and global distribution regularization through attention-guided learning.

– Utilization of the Dilated Refinement Attention Module (DREAM) for aligning semantic patterns between visual and textual modalities and applying Maximum Mean Discrepancy (MMD) with contrastive loss for global alignment.

💬 Research Conclusions:

– MambaRec demonstrates superior performance in fusion quality, generalization, and efficiency on real-world e-commerce datasets.

👉 Paper link: https://huggingface.co/papers/2509.09114

22. Cross-Domain Evaluation of Transformer-Based Vulnerability Detection on Open & Industry Data

🔑 Keywords: CodeBERT, CI/CD integration, vulnerability detection, cross-domain generalization, AI-DO

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To evaluate and enhance vulnerability detection in code using fine-tuned CodeBERT within CI/CD pipelines.

🛠️ Research Methods:

– Fine-tuning of CodeBERT on open-source and industrial data, with a focus on cross-domain generalization and class imbalance handling.

💬 Research Conclusions:

– Fine-tuned CodeBERT improves vulnerability detection, especially with undersampling techniques, though performance varies with data domain.

– AI-DO recommender system effectively integrates into workflows, enhancing code review processes without disruptions.

👉 Paper link: https://huggingface.co/papers/2509.09313