AI Native Daily Paper Digest – 20250915

1. IntrEx: A Dataset for Modeling Engagement in Educational Conversations

🔑 Keywords: IntrEx, Large Language Models, Engagement, Educational Conversations, Linguistic and Cognitive Factors

💡 Category: AI in Education

🌟 Research Objective:

– To address the gap in understanding linguistic features that drive engagement in educational conversations by introducing the IntrEx dataset.

🛠️ Research Methods:

– Employed a rigorous annotation process with over 100 second-language learners and used a comparison-based rating approach inspired by reinforcement learning from human feedback (RLHF).

💬 Research Conclusions:

– Fine-tuned large language models (LLMs) on interestingness ratings outperform larger proprietary models like GPT-4o, and linguistic and cognitive factors such as concreteness, comprehensibility, and uptake significantly influence engagement in educational dialogues.

👉 Paper link: https://huggingface.co/papers/2509.06652

2. X-Part: high fidelity and structure coherent shape decomposition

🔑 Keywords: AI Native, Generative model, Bounding box, Point-wise semantic features, Part-level shape generation

💡 Category: Generative Models

🌟 Research Objective:

– The main objective is to introduce X-Part, a controllable generative model for decomposing 3D objects into semantically meaningful parts with high geometric fidelity.

🛠️ Research Methods:

– Utilizes bounding boxes and point-wise semantic features to achieve meaningful decomposition and supports an editable pipeline for interactive part generation.

💬 Research Conclusions:

– X-Part establishes a new paradigm in part-level shape generation, achieving state-of-the-art performance and offering production-ready, editable, and structurally sound 3D assets.

👉 Paper link: https://huggingface.co/papers/2509.08643

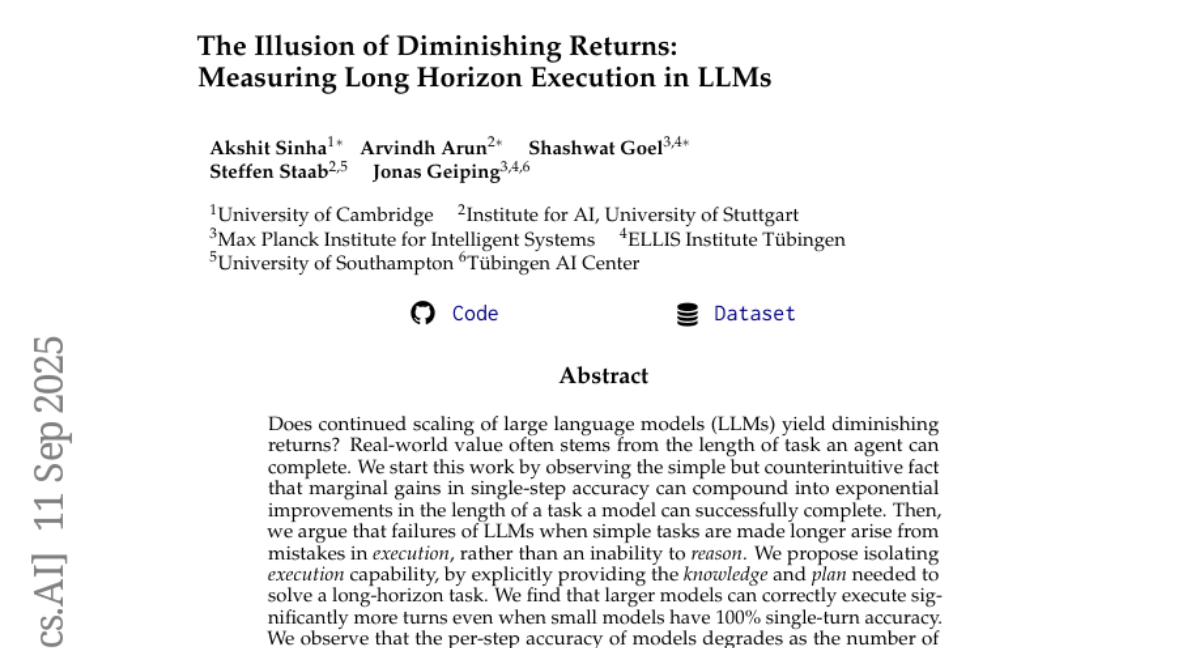

3. The Illusion of Diminishing Returns: Measuring Long Horizon Execution in LLMs

🔑 Keywords: Large Language Models, Execution Capability, Self-conditioning, Thinking Models

💡 Category: Natural Language Processing

🌟 Research Objective:

– Investigate whether scaling large language models results in diminishing returns in the context of long-task execution.

🛠️ Research Methods:

– Analyzing the execution capability by isolating it and providing the necessary knowledge and plans for long-horizon tasks.

– Benchmarking thinking models to understand their execution ability in single turns.

💬 Research Conclusions:

– Larger models improve task length completion despite diminishing single-step accuracy.

– Self-conditioning effects degrade per-step accuracy as task steps increase.

– Recent thinking models can execute longer tasks without self-conditioning, emphasizing the benefits of model scaling for long-horizon tasks.

👉 Paper link: https://huggingface.co/papers/2509.09677

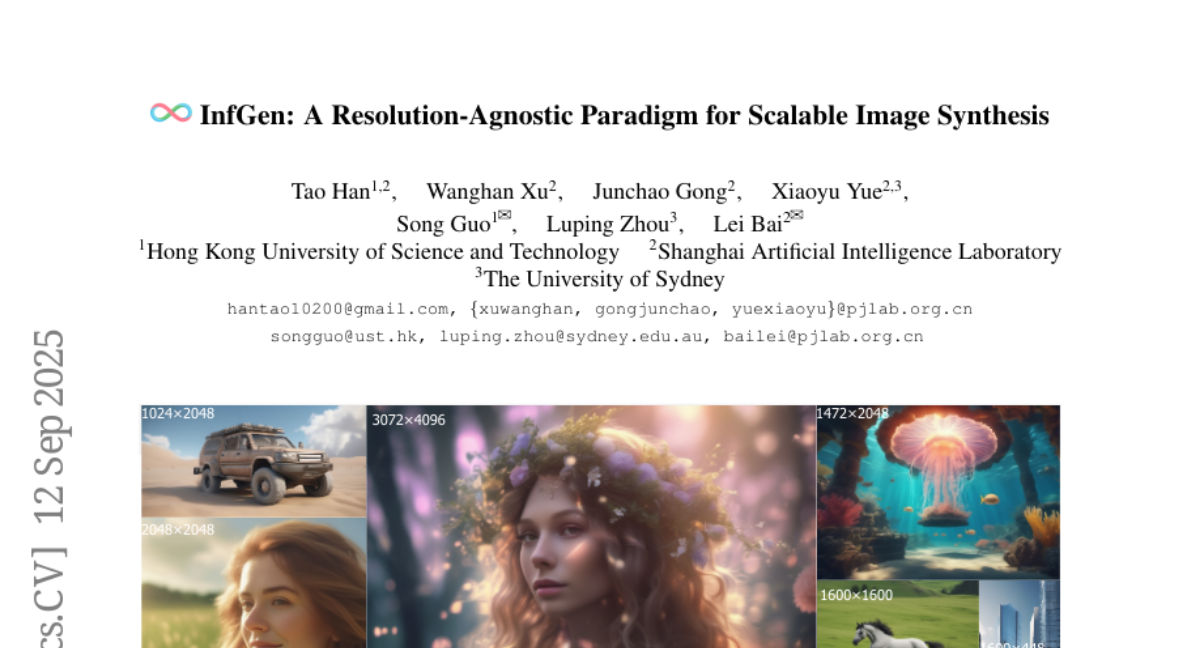

4. InfGen: A Resolution-Agnostic Paradigm for Scalable Image Synthesis

🔑 Keywords: InfGen, arbitrary resolution, one-step generator, VAE decoder, image generation time

💡 Category: Generative Models

🌟 Research Objective:

– Introduce InfGen as a new one-step generator to replace the VAE decoder for generating arbitrary high-resolution images from a fixed-size latent, reducing computational complexity and generation time.

🛠️ Research Methods:

– Utilize a compact generated latent from diffusion models to decode images at any resolution without the need to retrain the models, simplifying the process.

💬 Research Conclusions:

– InfGen enables high-resolution image generation quickly, drastically cutting the 4K image generation time to under 10 seconds and improving efficiency across different models.

👉 Paper link: https://huggingface.co/papers/2509.10441

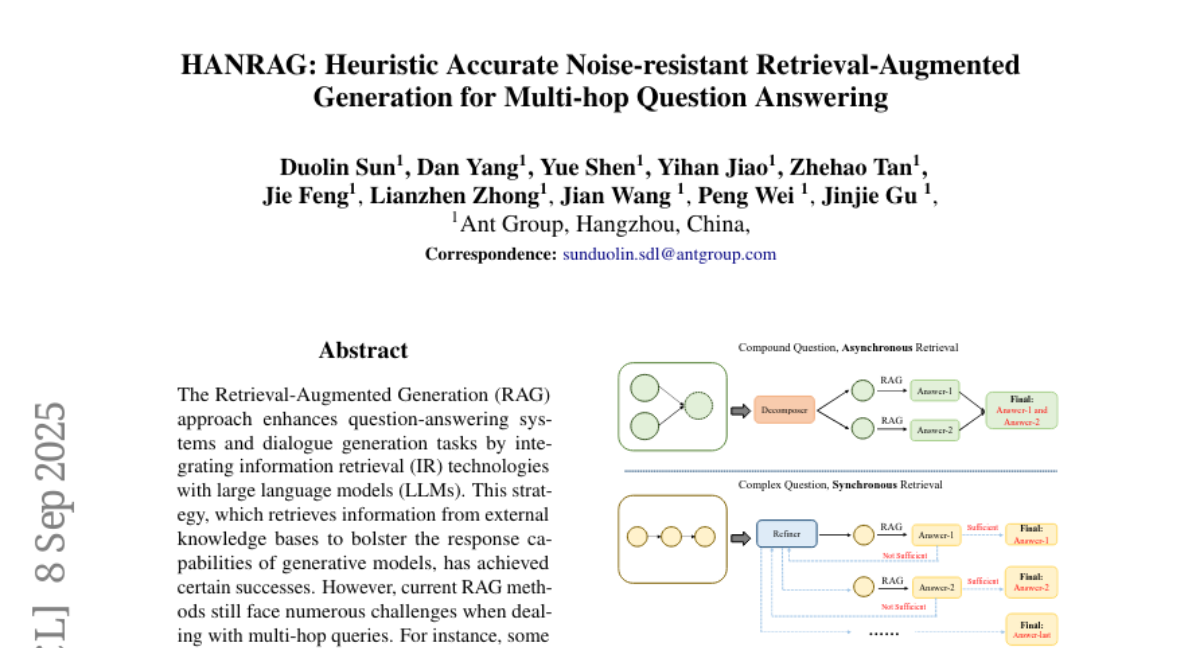

5. HANRAG: Heuristic Accurate Noise-resistant Retrieval-Augmented Generation for Multi-hop Question Answering

🔑 Keywords: HANRAG, Retrieval-Augmented Generation (RAG), multi-hop queries, large language models (LLMs), heuristic-based framework

💡 Category: Natural Language Processing

🌟 Research Objective:

– To introduce HANRAG, a heuristic-based framework, aimed at improving question-answering systems by efficiently handling multi-hop queries and reducing noise.

🛠️ Research Methods:

– The study utilizes a powerful revelator to route and decompose queries into sub-queries, filtering out noise to improve the adaptability and effectiveness of the system.

💬 Research Conclusions:

– HANRAG demonstrates superior performance compared to other leading methods in handling both single-hop and multi-hop question-answering tasks across various benchmarks.

👉 Paper link: https://huggingface.co/papers/2509.09713

6. Virtual Agent Economies

🔑 Keywords: AI agent economy, sandbox economy, autonomous AI agents, steerable AI markets

💡 Category: AI Ethics and Fairness

🌟 Research Objective:

– To analyze the emerging AI agent economy using the “sandbox economy” framework and explore facets like origins and permeability to design safe and steerable AI markets.

🛠️ Research Methods:

– Examination of systemic economic risk and inequality challenges.

– Consideration of auction mechanisms for fair resource allocation.

– Designing AI “mission economies” for coordination around collective goals.

– Discussion on socio-technical infrastructure for trust, safety, and accountability.

💬 Research Conclusions:

– The paper advocates for proactive design of steerable agent markets to align with long-term human collective flourishing, amidst opportunities and challenges posed by the spontaneous emergence of the autonomous AI agent economy.

👉 Paper link: https://huggingface.co/papers/2509.10147

7. VStyle: A Benchmark for Voice Style Adaptation with Spoken Instructions

🔑 Keywords: Voice Style Adaptation, Spoken Language Models, VStyle, Large Audio Language Model as a Judge

💡 Category: Human-AI Interaction

🌟 Research Objective:

– Introduce Voice Style Adaptation (VSA) to evaluate the ability of Spoken Language Models (SLMs) to adapt speaking styles based on spoken instructions.

🛠️ Research Methods:

– Development of VStyle, a bilingual benchmark (Chinese & English) for evaluating modifications in speaking styles across four speech generation categories.

– Introduction of a framework called Large Audio Language Model as a Judge for objective assessment of style adherence, textual faithfulness, and naturalness.

💬 Research Conclusions:

– Experiments indicate current models face significant challenges in controllable style adaptation, underscoring the novelty and difficulty of the VSA task.

– VStyle and its evaluation toolkit are released to aid in advancing human-centered spoken interaction.

👉 Paper link: https://huggingface.co/papers/2509.09716

8. FLOWER: Democratizing Generalist Robot Policies with Efficient Vision-Language-Action Flow Policies

🔑 Keywords: Vision-Language-Action (VLA), intermediate-modality fusion, Global-AdaLN conditioning, FLOWER

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To develop an efficient Vision-Language-Action (VLA) policy with reduced computational costs and resource requirements.

🛠️ Research Methods:

– Introduction of intermediate-modality fusion to prune up to 50% of LLM layers.

– Implementation of action-specific Global-AdaLN conditioning to reduce parameters by 20%.

💬 Research Conclusions:

– FLOWER, a 950 M-parameter VLA policy, achieves competitive performance on 190 tasks across multiple benchmarks.

– Establishes new SoTA of 4.53 on the CALVIN ABC benchmark, demonstrating robustness across diverse robotic embodiments.

👉 Paper link: https://huggingface.co/papers/2509.04996

9. Inpainting-Guided Policy Optimization for Diffusion Large Language Models

🔑 Keywords: IGPO, RL framework, inpainting, mathematical benchmarks, sample efficiency

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To enhance sample efficiency and achieve state-of-the-art results in mathematical benchmarks using a novel RL framework, IGPO, that utilizes inpainting in masked diffusion large language models.

🛠️ Research Methods:

– Introduction of IGPO (Inpainting Guided Policy Optimization) which strategically inserts partial ground-truth reasoning traces during online sampling.

– Application of IGPO to group-based optimization methods and incorporation of entropy-based filtering.

💬 Research Conclusions:

– IGPO significantly improves sample efficiency and restores meaningful gradients.

– Achieves new state-of-the-art results across mathematical benchmarks like GSM8K, Math500, and AMC for full-attention masked diffusion LLMs.

👉 Paper link: https://huggingface.co/papers/2509.10396

10. LoFT: Parameter-Efficient Fine-Tuning for Long-tailed Semi-Supervised Learning in Open-World Scenarios

🔑 Keywords: LoFT, Semi-Supervised Learning, Pseudolabels, Open-World Scenarios, Parameter-Efficient Fine-Tuning

💡 Category: Machine Learning

🌟 Research Objective:

– To enhance the reliability of pseudolabels and the discriminative ability in long-tailed semi-supervised learning, particularly under open-world conditions.

🛠️ Research Methods:

– Introduction of LoFT, a parameter-efficient fine-tuning framework that uses fine-tuned foundation models.

– Exploration of LoFT-OW for handling open-world scenarios where unlabeled data might include out-of-distribution samples.

💬 Research Conclusions:

– The proposed method, LoFT, outperforms previous LTSSL approaches by producing more reliable pseudolabels.

– Demonstrates superior performance in multiple benchmarks, even with minimal utilization of unlabeled data.

👉 Paper link: https://huggingface.co/papers/2509.09926

11. World Modeling with Probabilistic Structure Integration

🔑 Keywords: Probabilistic Structure Integration, probabilistic prediction, structure extraction, video prediction, conditional distributions

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to establish Probabilistic Structure Integration (PSI) as a system for developing richly controllable and flexibly promptable world models from data, enhancing video prediction and understanding.

🛠️ Research Methods:

– PSI adopts a three-step cycle: probabilistic prediction using a graphical model, structure extraction using causal inference, and integration by converting structures into new token types for continuous retraining.

💬 Research Conclusions:

– The model demonstrates state-of-the-art capabilities in optical flow, self-supervised depth, and object segmentation, proving its efficacy in video prediction and facilitating predictive improvements.

👉 Paper link: https://huggingface.co/papers/2509.09737

12. QuantAgent: Price-Driven Multi-Agent LLMs for High-Frequency Trading

🔑 Keywords: High-Frequency Trading, QuantAgent, Multi-Agent LLM, Technical Indicators, Predictive Accuracy

💡 Category: AI in Finance

🌟 Research Objective:

– To develop a multi-agent LLM framework, QuantAgent, specifically for high-frequency algorithmic trading.

🛠️ Research Methods:

– Decomposition of trading into four specialized agents: Indicator, Pattern, Trend, and Risk, each using domain-specific tools and structured reasoning.

💬 Research Conclusions:

– QuantAgent demonstrates superior predictive accuracy and cumulative return in zero-shot evaluations across financial instruments, outperforming existing neural and rule-based systems.

👉 Paper link: https://huggingface.co/papers/2509.09995

13. Color Me Correctly: Bridging Perceptual Color Spaces and Text Embeddings for Improved Diffusion Generation

🔑 Keywords: AI-generated summary, color fidelity, large language model (LLM), text-to-image (T2I) generation, diffusion models

💡 Category: Generative Models

🌟 Research Objective:

– To improve color accuracy in text-to-image generation by resolving ambiguous color terms using a large language model without additional training.

🛠️ Research Methods:

– Employs a training-free framework using a large language model to refine text embeddings based on spatial relationships of color terms in the CIELAB color space.

💬 Research Conclusions:

– The proposed framework enhances color alignment and accuracy without sacrificing image quality, effectively linking text semantics with visual generation.

👉 Paper link: https://huggingface.co/papers/2509.10058

14. Visual-TableQA: Open-Domain Benchmark for Reasoning over Table Images

🔑 Keywords: Visual reasoning, vision-language models, multimodal dataset, reasoning LLMs, LaTeX-rendered tables

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To introduce and evaluate Visual-TableQA, a large-scale, open-domain dataset designed to enhance visual reasoning over complex tabular data.

🛠️ Research Methods:

– Developed a modular, scalable, and autonomous generation pipeline using multiple reasoning LLMs for generation, validation, and inspiration roles.

💬 Research Conclusions:

– Models fine-tuned on Visual-TableQA generalize well to external benchmarks, outperforming several proprietary models, with the dataset and pipeline publicly available.

👉 Paper link: https://huggingface.co/papers/2509.07966

15. MCP-AgentBench: Evaluating Real-World Language Agent Performance with MCP-Mediated Tools

🔑 Keywords: MCP, MCP-AgentBench, benchmark, language agents, tool interactions

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To introduce MCP-AgentBench, a benchmark designed to evaluate language agent capabilities in MCP-mediated tool interactions.

🛠️ Research Methods:

– Established a robust MCP testbed with 33 operational servers using 188 distinct tools.

– Developed a benchmark with 600 systematically designed queries across 6 categories of interaction complexity.

– Introduced MCP-Eval, a novel evaluation methodology focusing on real-world task success.

💬 Research Conclusions:

– MCP-AgentBench provides foundational insights and a standardized framework for researchers to build, validate, and advance agents leveraging MCP’s benefits, accelerating progress towards capable and interoperable AI systems.

👉 Paper link: https://huggingface.co/papers/2509.09734

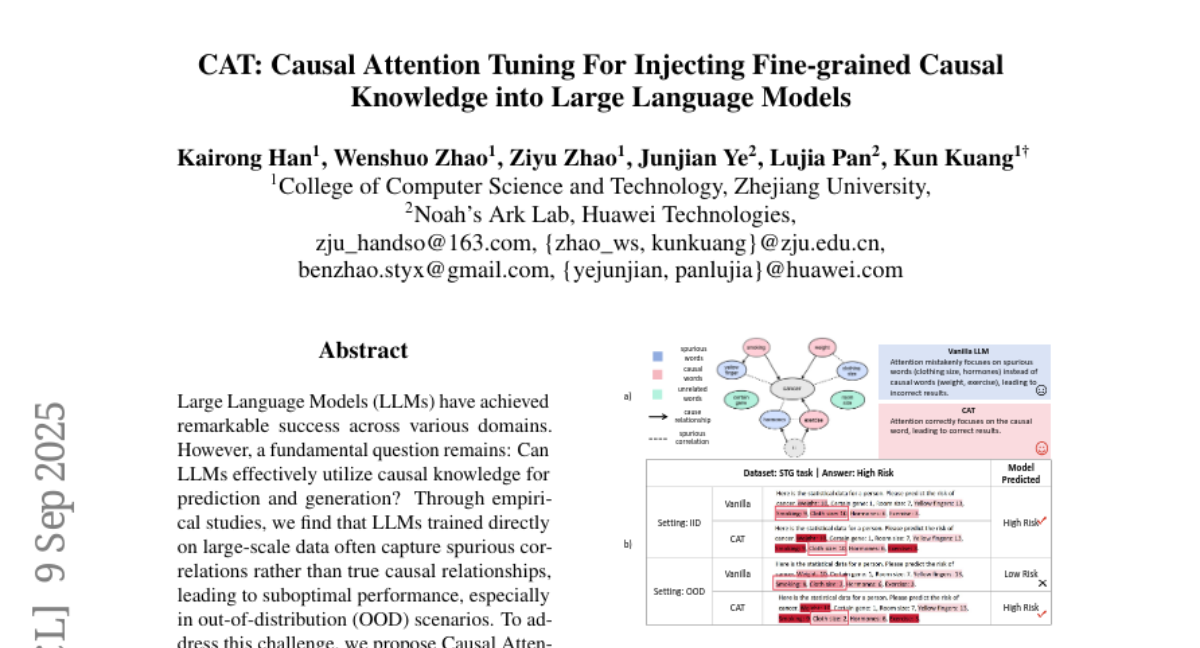

16. CAT: Causal Attention Tuning For Injecting Fine-grained Causal Knowledge into Large Language Models

🔑 Keywords: Causal Attention Tuning, Large Language Models, causal knowledge, out-of-distribution

💡 Category: Natural Language Processing

🌟 Research Objective:

– The objective is to enhance Large Language Models by incorporating causal knowledge into their attention mechanisms to improve their prediction accuracy and robustness, especially in out-of-distribution scenarios.

🛠️ Research Methods:

– The study introduces Causal Attention Tuning (CAT), using an automated pipeline leveraging human priors to generate token-level causal signals, along with the Re-Attention mechanism to guide model training.

💬 Research Conclusions:

– The proposed method effectively uses causal knowledge for predictions and maintains robustness in out-of-distribution scenarios, as demonstrated through experiments on the Spurious Token Game benchmark and multiple downstream tasks.

👉 Paper link: https://huggingface.co/papers/2509.01535

17. DeMeVa at LeWiDi-2025: Modeling Perspectives with In-Context Learning and Label Distribution Learning

🔑 Keywords: in-context learning, label distribution learning, RoBERTa, soft labels

💡 Category: Natural Language Processing

🌟 Research Objective:

– To explore in-context learning and label distribution learning for predicting annotator-specific annotations and generating soft labels.

🛠️ Research Methods:

– Utilization of large language models with example sampling strategies for in-context learning and evaluation of fine-tuning methods with RoBERTa for label distribution learning.

💬 Research Conclusions:

– In-context learning can effectively predict annotator-specific annotations, and aggregating predictions into soft labels yields competitive performance. Label distribution learning methods are promising for soft label predictions and warrant further exploration.

👉 Paper link: https://huggingface.co/papers/2509.09524

18. Large Language Model Hacking: Quantifying the Hidden Risks of Using LLMs for Text Annotation

🔑 Keywords: LLM hacking, Large language models, data annotation, human annotations, statistical conclusions

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study investigates the risks of variability and error introduced by LLM hacking in social science research and emphasizes the need for rigorous verification and human annotations.

🛠️ Research Methods:

– The researchers replicated 37 data annotation tasks using 18 different LLMs across 21 published studies, analyzing 13 million LLM labels and testing 2,361 hypotheses.

💬 Research Conclusions:

– LLM outputs can lead to incorrect statistical conclusions in one-third to one-half of cases. While higher model capabilities can reduce risks, significant errors can still occur, underscoring the importance of human involvement and caution against dependency on automated regression estimator corrections.

👉 Paper link: https://huggingface.co/papers/2509.08825

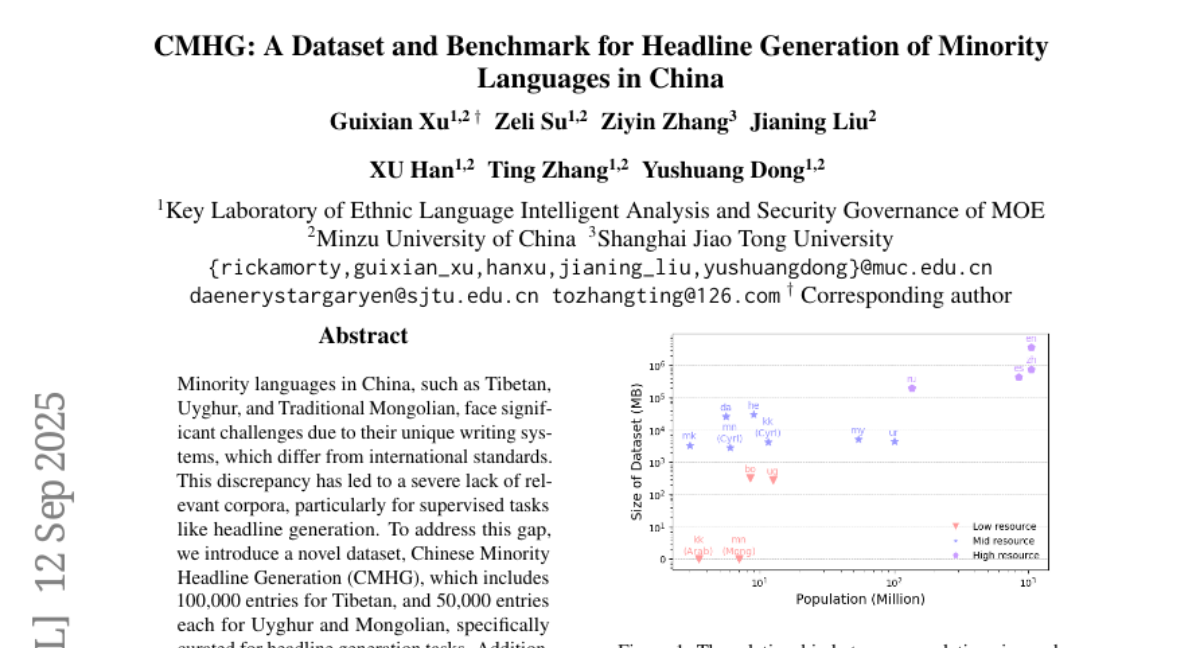

19. CMHG: A Dataset and Benchmark for Headline Generation of Minority Languages in China

🔑 Keywords: Minority languages, Headline generation, Dataset, Tibetan, Uyghur, Mongolian

💡 Category: Natural Language Processing

🌟 Research Objective:

– To introduce the CMHG dataset, which provides significant entries for headline generation tasks in Tibetan, Uyghur, and Mongolian languages, aiming to address the lack of relevant corpora.

🛠️ Research Methods:

– A novel dataset was created with 100,000 entries for Tibetan and 50,000 entries each for Uyghur and Mongolian. A high-quality test set annotated by native speakers was also developed to serve as a benchmark.

💬 Research Conclusions:

– The dataset is expected to become a valuable resource for advancing headline generation and related benchmarks in Chinese minority languages.

👉 Paper link: https://huggingface.co/papers/2509.09990

20. Context Engineering for Trustworthiness: Rescorla Wagner Steering Under Mixed and Inappropriate Contexts

🔑 Keywords: RW-Steering, LLM safety, Poisoned Context Testbed, Rescorla-Wagner model, context engineering

💡 Category: Natural Language Processing

🌟 Research Objective:

– To investigate how LLMs process mixed real-world contexts with disproportionate inappropriate content and to enhance LLM safety by improving response quality.

🛠️ Research Methods:

– Use of the Poisoned Context Testbed to pair queries with contexts containing relevant and inappropriate content.

– Adaptation of the Rescorla-Wagner model from neuroscience to measure the influence of contextual signals.

– Development of RW-Steering, a fine-tuning approach to enable ignoring inappropriate signals.

💬 Research Conclusions:

– LLMs tend to incorporate less prevalent information, posing risks with inappropriate content.

– RW-Steering improves response quality by 39.8%, effectively addressing the incorporation of inappropriate signals and proving to be a robust solution for LLM safety in diverse real-world contexts.

👉 Paper link: https://huggingface.co/papers/2509.04500

21.