AI Native Daily Paper Digest – 20250916

1. OmniWorld: A Multi-Domain and Multi-Modal Dataset for 4D World Modeling

🔑 Keywords: OmniWorld, 4D world modeling, multimodal learning, AI-generated summary

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Address the limitations of existing 4D world modeling datasets to enhance performance in 4D reconstruction and video generation.

🛠️ Research Methods:

– Introduction of OmniWorld, a large-scale, multi-domain, multi-modal dataset designed for 4D world modeling, featuring a newly collected OmniWorld-Game dataset and curated public datasets.

💬 Research Conclusions:

– By fine-tuning state-of-the-art methods on OmniWorld, significant performance gains are realized, establishing it as a powerful resource for advancing 4D world models and improving machines’ understanding of the physical world.

👉 Paper link: https://huggingface.co/papers/2509.12201

2. UI-S1: Advancing GUI Automation via Semi-online Reinforcement Learning

🔑 Keywords: Semi-online Reinforcement Learning, offline RL, online RL, GUI agents, dynamic benchmarks

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To address the limitations of offline and online reinforcement learning by proposing a novel paradigm called Semi-online Reinforcement Learning, which simulates online RL on offline trajectories.

🛠️ Research Methods:

– The method involves using a Patch Module to recover divergence in multi-turn dialogue rollouts, and incorporates discounted future returns and weighted step-level and episode-level advantages for policy optimization.

💬 Research Conclusions:

– Semi-online Reinforcement Learning achieves state-of-the-art performance in dynamic benchmarks, bridging the gap between offline training efficiency and online multi-turn reasoning. The approach significantly outperforms the base model in various benchmarks.

👉 Paper link: https://huggingface.co/papers/2509.11543

3. InternScenes: A Large-scale Simulatable Indoor Scene Dataset with Realistic Layouts

🔑 Keywords: InternScenes, Embodied AI, scene diversity, realistic layouts, 3D objects

💡 Category: Computer Vision

🌟 Research Objective:

– Introduce InternScenes, a novel large-scale, diverse, and realistic indoor scene dataset to enhance scene layout generation and point-goal navigation.

🛠️ Research Methods:

– Utilize approximately 40,000 diverse scenes integrating real-world scans, procedurally generated scenes, and designer-created scenes, including 1.96M 3D objects.

– Employ a comprehensive data processing pipeline for creating real-to-sim replicas, incorporating interactive objects, and resolving object collisions through physical simulations.

💬 Research Conclusions:

– Demonstrate the value of InternScenes in scene layout generation and point-goal navigation, highlighting the new challenges posed by complex and realistic layouts.

– Commit to open-sourcing the data, models, and benchmarks to advance community research.

👉 Paper link: https://huggingface.co/papers/2509.10813

4. SearchInstruct: Enhancing Domain Adaptation via Retrieval-Based Instruction Dataset Creation

🔑 Keywords: Supervised Fine-Tuning, large language models, domain-specific, model editing

💡 Category: Natural Language Processing

🌟 Research Objective:

– To propose SearchInstruct, a method designed to create high quality instruction datasets for Supervised Fine-Tuning in specific domains.

🛠️ Research Methods:

– By starting with a limited set of domain-specific questions, the method systematically expands them using a large language model and retrieves domain-relevant resources to generate accurate answers.

💬 Research Conclusions:

– SearchInstruct enhances the diversity and quality of datasets, thereby improving large language model performance in specialized domains. It also facilitates efficient model updates.

👉 Paper link: https://huggingface.co/papers/2509.10708

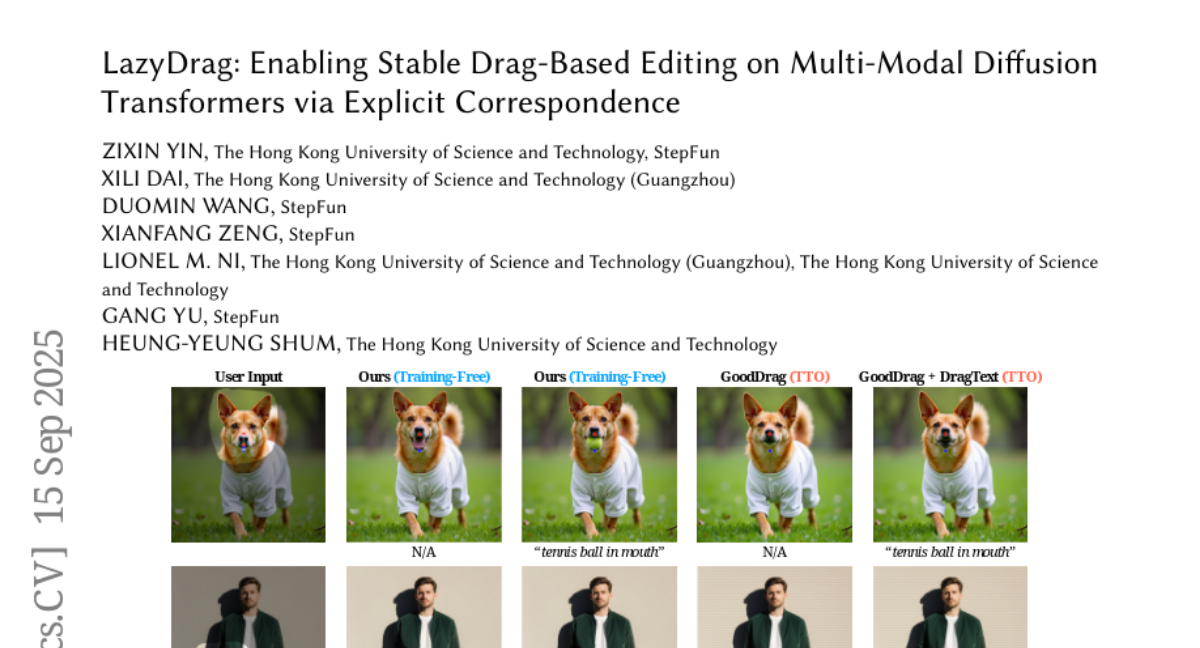

5. LazyDrag: Enabling Stable Drag-Based Editing on Multi-Modal Diffusion Transformers via Explicit Correspondence

🔑 Keywords: LazyDrag, Multi-Modal Diffusion Transformers, drag-based editing, attention, test-time optimization

💡 Category: Generative Models

🌟 Research Objective:

– To introduce LazyDrag, a drag-based image editing method for Multi-Modal Diffusion Transformers that eliminates reliance on implicit point matching to enhance generative capabilities.

🛠️ Research Methods:

– LazyDrag utilizes an explicit correspondence map from user drag inputs to boost attention control, supporting multi-round workflows with complex geometric and text-guided edits.

💬 Research Conclusions:

– LazyDrag outperforms baselines in drag accuracy and perceptual quality, establishing itself as a new state-of-the-art in the domain, effectively paving new paths in editing paradigms.

👉 Paper link: https://huggingface.co/papers/2509.12203

6. Locality in Image Diffusion Models Emerges from Data Statistics

🔑 Keywords: diffusion models, optimal denoiser, locality, convolutional neural networks, pixel correlations

💡 Category: Generative Models

🌟 Research Objective:

– To investigate the role of locality in deep diffusion models and demonstrate its emergence as a statistical property of image datasets rather than an inductive bias of convolutional neural networks.

🛠️ Research Methods:

– Theoretical and experimental analysis of an optimal parametric linear denoiser and its locality properties, focusing on the correlation between image pixels in datasets.

💬 Research Conclusions:

– Evidence shows locality in diffusion models is due to pixel correlations within image datasets. This insight led to the development of an analytical denoiser that better aligns with deep diffusion model scores.

👉 Paper link: https://huggingface.co/papers/2509.09672

7. Learning to Optimize Multi-Objective Alignment Through Dynamic Reward Weighting

🔑 Keywords: Multi-objective reinforcement learning, dynamic reward weighting, Pareto fronts, online preference alignment, non-convex mappings

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To introduce dynamic reward weighting in multi-objective reinforcement learning to overcome the limitations of fixed-weight scalarization for capturing non-convex Pareto fronts.

🛠️ Research Methods:

– Developed two approaches for dynamic reward weighting: hypervolume-guided weight adaptation and gradient-based weight optimization, tested with online reinforcement learning algorithms.

💬 Research Conclusions:

– Dynamic reward weighting effectively explores Pareto fronts and achieves Pareto dominant solutions with fewer training steps compared to fixed-weight linear scalarization, demonstrating applicability across multiple datasets and model families.

👉 Paper link: https://huggingface.co/papers/2509.11452

8. Lost in Embeddings: Information Loss in Vision-Language Models

🔑 Keywords: Vision-Language Models, pretrained vision encoder, modality fusion, latent representation space, visually grounded question-answering tasks

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To investigate and measure information loss during the projection of visual inputs into the language model’s embedding space in Vision-Language Models.

🛠️ Research Methods:

– Two approaches are used: analyzing k-nearest neighbor relationships before and after projection to assess semantic information preservation, and reconstructing visual embeddings to directly measure information loss at the image patch level.

💬 Research Conclusions:

– The projection process causes significant distortions in the local geometry of visual representations, affecting retrieval performance. Areas of high information loss are linked to challenges in visually grounded question-answering tasks.

👉 Paper link: https://huggingface.co/papers/2509.11986

9. Measuring Epistemic Humility in Multimodal Large Language Models

🔑 Keywords: HumbleBench, Multimodal Large Language Models, Hallucinations, Visual Question Answering, Epistemic Humility

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Develop HumbleBench to evaluate the ability of multimodal large language models (MLLMs) to reject incorrect answers and address hallucinations in visual question answering and decision-making.

🛠️ Research Methods:

– Utilization of a panoptic scene graph dataset to extract ground-truth entities and relations.

– Generation of multiple-choice questions using GPT-4-Turbo, followed by manual filtering.

– Inclusion of a “None of the above” option to assess models’ epistemic humility.

💬 Research Conclusions:

– HumbleBench addresses a gap in existing evaluation tools, offering a more realistic measure of MLLM reliability in safety-critical applications.

– The study highlights the importance of false-option rejection for trustworthy AI, enhancing the evaluation of MLLMs in real-world settings.

– The dataset and code are publicly available for further research and community insights.

👉 Paper link: https://huggingface.co/papers/2509.09658

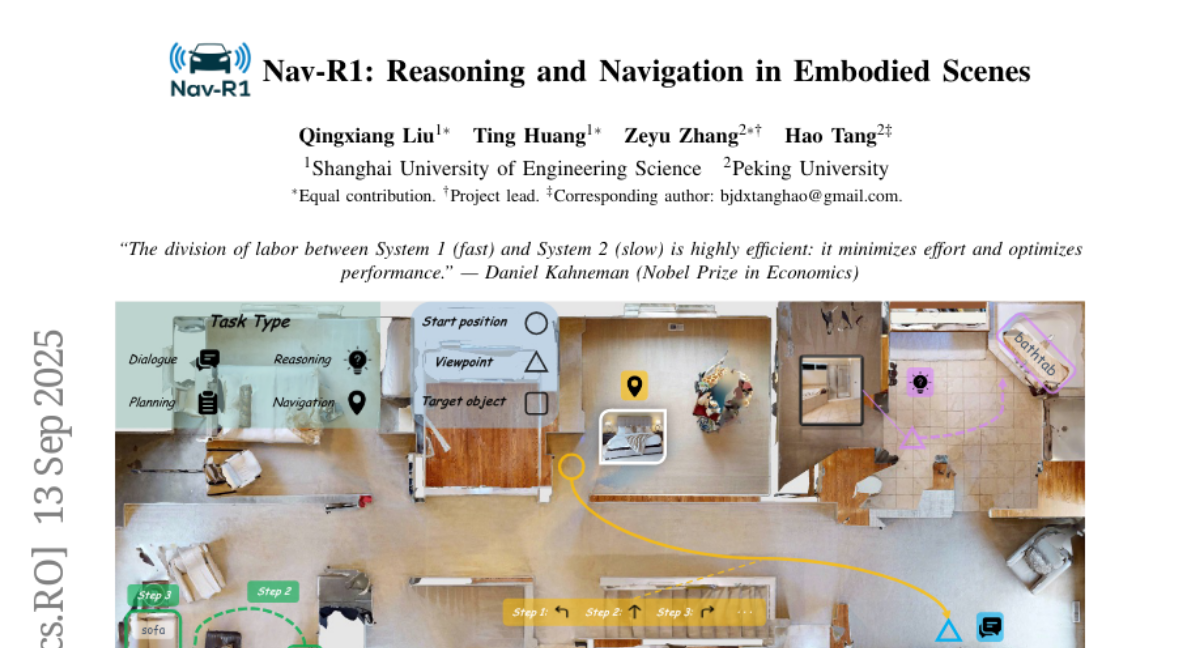

10. Nav-R1: Reasoning and Navigation in Embodied Scenes

🔑 Keywords: Embodied navigation, structured reasoning, decoupled control, semantic reasoning, reinforcement learning

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Develop Nav-R1, an embodied foundation model to enhance navigation by integrating structured reasoning and decoupled control mechanisms.

🛠️ Research Methods:

– Construct a large-scale dataset, Nav-CoT-110K, for embodied tasks and design a GRPO-based reinforcement learning framework with complementary rewards.

– Introduce a Fast-in-Slow reasoning paradigm to separate deliberate semantic reasoning from low-latency reactive control.

💬 Research Conclusions:

– Nav-R1 surpasses current models on benchmarks with over 8% average improvement in reasoning and navigation performance and demonstrates robustness in real-world mobile robot deployments.

👉 Paper link: https://huggingface.co/papers/2509.10884

11. CognitiveSky: Scalable Sentiment and Narrative Analysis for Decentralized Social Media

🔑 Keywords: CognitiveSky, transformer-based, sentiment analysis, decentralized networks, AI-generated summary

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce CognitiveSky, a scalable framework for sentiment, emotion, and narrative analysis on decentralized social media, specifically Bluesky.

🛠️ Research Methods:

– Utilize transformer-based models to process data from Bluesky’s API, creating structured outputs for dynamic dashboard visualization.

💬 Research Conclusions:

– CognitiveSky, built on a low-cost infrastructure, provides an accessible tool for computational social science, applicable in various domains such as mental health monitoring, disinformation detection, and civic sentiment analysis.

👉 Paper link: https://huggingface.co/papers/2509.11444

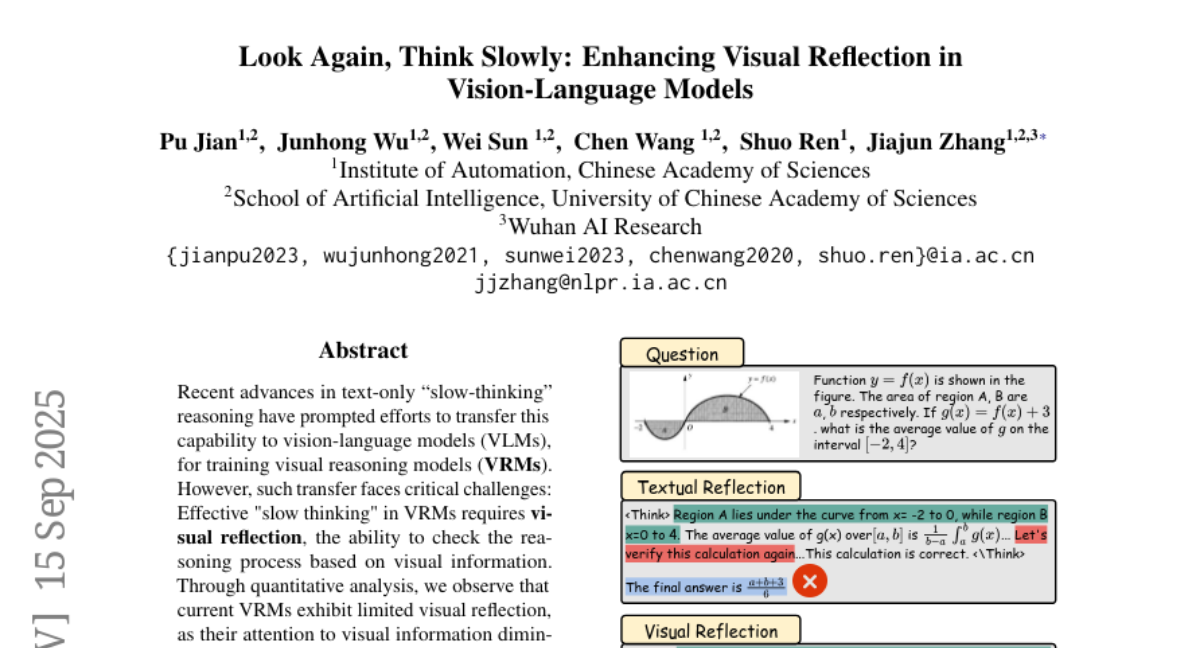

12. Look Again, Think Slowly: Enhancing Visual Reflection in Vision-Language Models

🔑 Keywords: Visual Attention, Visual Reflection, VRMs, Cold-Start, Reinforcement Learning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study introduces Reflection-V, a vision-centered model enhancing visual reflection in visual reasoning models (VRMs).

🛠️ Research Methods:

– Vision-centered reasoning data is constructed for a cold-start learning approach combined with a visual attention reward model for reinforcement learning.

💬 Research Conclusions:

– Reflection-V significantly improves visual reasoning performance and maintains stronger reliance on visual information compared to existing models.

👉 Paper link: https://huggingface.co/papers/2509.12132

13. PersonaX: Multimodal Datasets with LLM-Inferred Behavior Traits

🔑 Keywords: PersonaX, multimodal datasets, large language models, causal reasoning, behavioral traits

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To create PersonaX, a multimodal dataset combining behavioral traits, facial imagery, and biographical information for comprehensive analysis and causal reasoning using large language models.

🛠️ Research Methods:

– Development of PersonaX consisting of CelebPersona and AthlePersona datasets, including behavioral trait assessments, facial imagery, and structured biographical data.

– Application of statistical independence tests to examine high-level trait scores.

– Introduction of a causal representation learning (CRL) framework tailored to multimodal and multi-measurement data.

💬 Research Conclusions:

– PersonaX provides a comprehensive foundation for studying LLM-inferred behavioral traits in context with visual and biographical attributes.

– The approach advances multimodal trait analysis and causal reasoning, evidenced by experiments on synthetic and real-world data.

👉 Paper link: https://huggingface.co/papers/2509.11362

14. GAPrune: Gradient-Alignment Pruning for Domain-Aware Embeddings

🔑 Keywords: AI-generated summary, domain-specific embedding models, model compression, pruning, Domain Alignment Importance (DAI)

💡 Category: Natural Language Processing

🌟 Research Objective:

– The research aims to address the challenges of model deployment in resource-constrained environments by proposing GAPrune, a pruning framework that considers domain importance while preserving general linguistic foundations.

🛠️ Research Methods:

– The method involves using Fisher Information and general-domain gradient alignment to measure parameter importance and behavior, respectively. These signals are combined into a Domain Alignment Importance (DAI) scoring system to guide pruning decisions.

💬 Research Conclusions:

– GAPrune can maintain model performance close to dense models even at high sparsity levels, and it enhances domain-specific performance when retraining. This demonstrates that effective pruning strategies can compress models while boosting domain-specific capabilities.

👉 Paper link: https://huggingface.co/papers/2509.10844

15. ClaimIQ at CheckThat! 2025: Comparing Prompted and Fine-Tuned Language Models for Verifying Numerical Claims

🔑 Keywords: zero-shot prompting, parameter-efficient fine-tuning, evidence granularity, LLaMA, numerical fact verification

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper presents a system for verifying numerical and temporal claims in Task 3 of the CLEF 2025 CheckThat! Lab.

🛠️ Research Methods:

– Utilized zero-shot prompting with instruction-tuned large language models and parameter-efficient supervised fine-tuning using LoRA.

– Employed evidence selection strategies such as full-document input and top-k sentence filtering using BM25 and MiniLM.

💬 Research Conclusions:

– The best-performing model LLaMA, fine-tuned with LoRA, showed strong validation performance but faced challenges in generalization in the test set, emphasizing the need for evidence granularity and model adaptation.

👉 Paper link: https://huggingface.co/papers/2509.11492

16. EthicsMH: A Pilot Benchmark for Ethical Reasoning in Mental Health AI

🔑 Keywords: AI ethics, mental health, ethical reasoning, decision accuracy, professional norms

💡 Category: AI Ethics and Fairness

🌟 Research Objective:

– The study introduces EthicsMH, a dataset designed to evaluate AI systems’ ethical reasoning in mental health contexts.

🛠️ Research Methods:

– EthicsMH consists of 125 scenarios with structured fields for multiple decision options, expert-aligned reasoning, expected model behavior, real-world impact, and multi-stakeholder viewpoints.

💬 Research Conclusions:

– Although modest in scale, EthicsMH provides a framework bridging AI ethics and mental health decision-making, and is a seed resource for future contributions to responsibly handle sensitive decisions.

👉 Paper link: https://huggingface.co/papers/2509.11648

17. Dr.V: A Hierarchical Perception-Temporal-Cognition Framework to Diagnose Video Hallucination by Fine-grained Spatial-Temporal Grounding

🔑 Keywords: Video hallucination, Hierarchical framework, Spatial-temporal grounding, Cognitive reasoning, Video understanding

💡 Category: Computer Vision

🌟 Research Objective:

– The study aims to address video hallucinations in large video models by introducing a hierarchical framework, Dr.V, which enhances video understanding through fine-grained spatial-temporal grounding and cognitive reasoning.

🛠️ Research Methods:

– Dr.V is composed of a benchmark dataset, Dr.V-Bench, with 10k instances from 4,974 videos, and a satellite video agent, Dr.V-Agent, which applies a step-by-step pipeline to diagnose video hallucinations through spatial-temporal grounding at perceptive and temporal levels, followed by cognitive reasoning.

💬 Research Conclusions:

– Dr.V-Agent effectively identifies hallucinations and improves interpretability and reliability, providing a practical blueprint for robust video understanding in real-world scenarios. All related data and code are made available online.

👉 Paper link: https://huggingface.co/papers/2509.11866

18. LongEmotion: Measuring Emotional Intelligence of Large Language Models in Long-Context Interaction

🔑 Keywords: Large language models, Emotional Intelligence, LongEmotion, Retrieval-Augmented Generation, Collaborative Emotional Modeling

💡 Category: Natural Language Processing

🌟 Research Objective:

– To enhance large language models’ Emotional Intelligence (EI) in long-context scenarios using the specially designed LongEmotion benchmark.

🛠️ Research Methods:

– Utilization of Retrieval-Augmented Generation (RAG) and Collaborative Emotional Modeling (CoEM) to improve performance under realistic constraints, contrasting with standard prompt-based methods.

💬 Research Conclusions:

– Both RAG and CoEM methods consistently enhance EI-related performance across long-context tasks, advancing LLMs toward practical and real-world EI applications. Additionally, a comparative case study with the GPT series highlights EI differences among models.

👉 Paper link: https://huggingface.co/papers/2509.07403

19. ToolRM: Outcome Reward Models for Tool-Calling Large Language Models

🔑 Keywords: reward modeling, tool-calling scenarios, large language models, outcome-based evaluation, data-efficient fine-tuning

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to enhance reward models in tool-calling scenarios by introducing a benchmark named FC-RewardBench to systematically assess their performance and improve them through outcome-based evaluation.

🛠️ Research Methods:

– The research involves synthesizing data from open-weight large language models and training outcome-based reward models ranging from 1.7B to 14B parameters, with evaluations across seven out-of-domain benchmarks.

💬 Research Conclusions:

– The proposed models outperform general-purpose baselines by up to 25% in downstream task performance and enable data-efficient fine-tuning through reward-guided filtering.

👉 Paper link: https://huggingface.co/papers/2509.11963

20.