AI Native Daily Paper Digest – 20251015

1. Spatial Forcing: Implicit Spatial Representation Alignment for Vision-language-action Model

🔑 Keywords: Vision-language-action models, Spatial Forcing, 3D foundation models, Robotic tasks

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The research aims to enhance the spatial comprehension capabilities of vision-language-action (VLA) models, enabling them to execute precise actions in the 3D physical world without relying on explicit 3D inputs or depth estimators.

🛠️ Research Methods:

– The proposed method, Spatial Forcing (SF), aligns intermediate visual embeddings of VLA models with geometric representations from pretrained 3D foundation models to implicitly develop spatial comprehension.

💬 Research Conclusions:

– The Spatial Forcing approach achieves state-of-the-art results in both simulated and real-world environments, surpassing traditional 2D- and 3D-based VLA models. It also accelerates training by up to 3.8 times and enhances data efficiency across various robotic tasks.

👉 Paper link: https://huggingface.co/papers/2510.12276

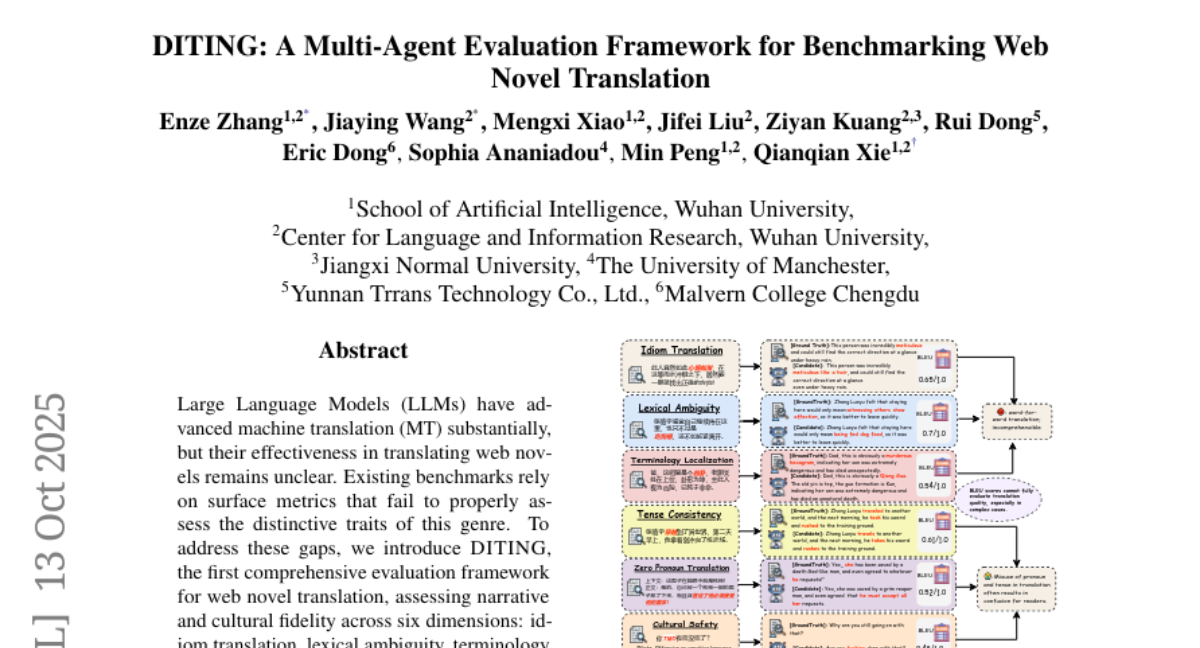

2. DITING: A Multi-Agent Evaluation Framework for Benchmarking Web Novel Translation

🔑 Keywords: DITING, AgentEval, Large Language Models, Machine Translation, Cultural Fidelity

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce DITING and AgentEval to assess the quality of web novel translations and demonstrate the superiority of Chinese-trained LLMs over larger foreign models.

🛠️ Research Methods:

– Developed DITING, an evaluation framework assessing six dimensions of translation quality, supported by over 18K Chinese-English sentence pairs.

– Proposed AgentEval, a reasoning-driven multi-agent evaluation framework simulating expert deliberation, achieving the highest correlation with human judgments.

💬 Research Conclusions:

– Chinese-trained LLMs outperform larger foreign counterparts in web novel translations.

– DeepSeek-V3 offers the most faithful and stylistically coherent translations among evaluated models.

👉 Paper link: https://huggingface.co/papers/2510.09116

3. Advancing End-to-End Pixel Space Generative Modeling via Self-supervised Pre-training

🔑 Keywords: Pixel-space generative models, Diffusion models, Consistency models, ImageNet, Training framework

💡 Category: Generative Models

🌟 Research Objective:

– Introduce a novel two-stage training framework to close the performance and efficiency gap of pixel-space diffusion and consistency models compared to latent-space models.

🛠️ Research Methods:

– Pre-train encoders on clean images in the first stage and align them with a deterministic sampling trajectory.

– In the second stage, integrate a randomly initialized decoder and fine-tune the complete model end-to-end for diffusion and consistency models.

💬 Research Conclusions:

– Achieved a significant improvement in generation quality and efficiency on the ImageNet dataset with diffusion and consistency models, surpassing prior pixel-space methods.

– The model achieved remarkable FID scores on ImageNet-256 and ImageNet-512, highlighting its superiority in high-resolution image training without relying on pre-trained VAEs or diffusion models.

👉 Paper link: https://huggingface.co/papers/2510.12586

4. Scaling Language-Centric Omnimodal Representation Learning

🔑 Keywords: Multimodal large language models, Contrastive learning, Cross-modal alignment, Language-Centric Omnimodal Embedding, Generation-Representation Scaling Law

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To explore the advantages of multimodal large language models (MLLMs) fine-tuned with contrastive learning and propose a Language-Centric Omnimodal Embedding framework (LCO-Emb).

🛠️ Research Methods:

– Analysis of anisotropy and kernel similarity structure to confirm latent cross-modal alignment within MLLM representations. Proposed the LCO-Emb framework leveraging generative and contrastive learning capabilities.

💬 Research Conclusions:

– Demonstrated that MLLMs achieve state-of-the-art performance across modalities and identified a Generation-Representation Scaling Law (GRSL) linking generative and representation capabilities. Provided theoretical explanations and empirical validation on complex tasks.

👉 Paper link: https://huggingface.co/papers/2510.11693

5. Robot Learning: A Tutorial

🔑 Keywords: Robot learning, Reinforcement Learning, Behavioral Cloning, Language-conditioned models, Autonomous systems

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To explore the shift from classical model-based methods to data-driven, learning-based paradigms in robot learning, focusing on the development of versatile, language-conditioned models across diverse tasks and robot types.

🛠️ Research Methods:

– The tutorial covers foundational principles such as Reinforcement Learning and Behavioral Cloning to create generalist models.

💬 Research Conclusions:

– This work serves as a guide equipped with conceptual understanding and practical tools, including examples implemented in lerobot, to empower researchers and practitioners to contribute to advancements in robot learning.

👉 Paper link: https://huggingface.co/papers/2510.12403

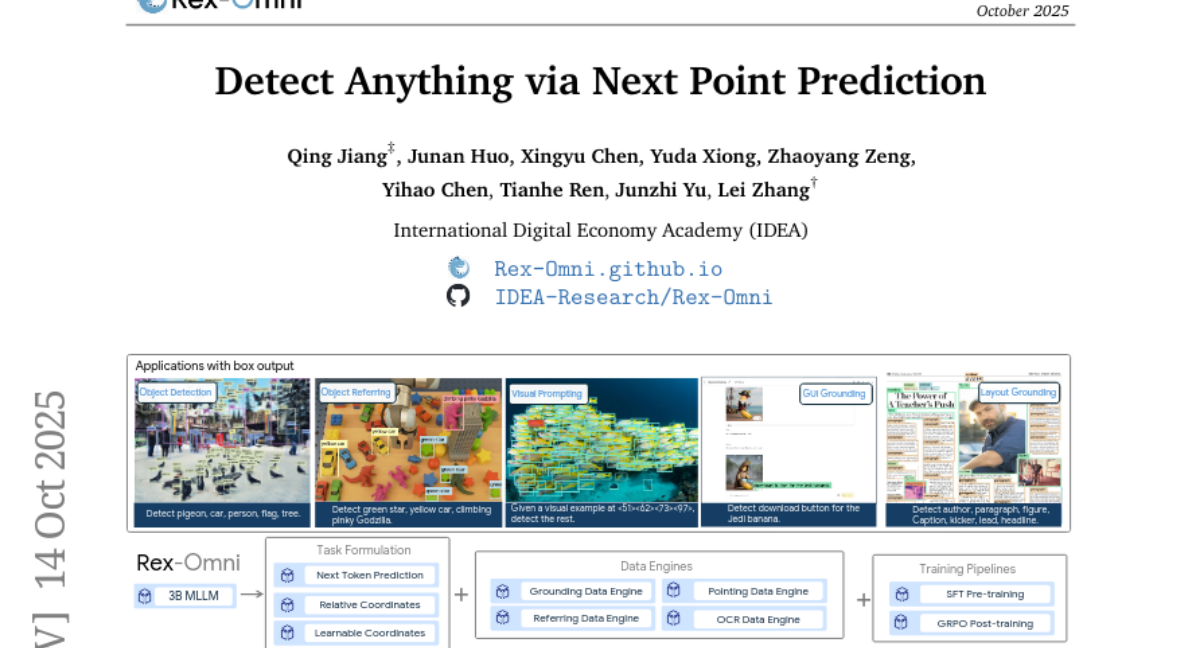

6. Detect Anything via Next Point Prediction

🔑 Keywords: MLLMs, Zero-Shot Setting, Coordination Prediction, Rex-Omni

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance object detection by overcoming the limitations of traditional coordinate regression models using a novel approach with MLLMs.

🛠️ Research Methods:

– Implementation of Rex-Omni, a 3B-scale MLLM with state-of-the-art performance facilitated by special token usage, construction of high-quality data engines, and a two-stage training process including GRPO-based reinforcement post-training.

💬 Research Conclusions:

– Rex-Omni achieves competitive performance compared to traditional models in a zero-shot setting and expands capabilities to various visual perception tasks through its inherent language understanding.

👉 Paper link: https://huggingface.co/papers/2510.12798

7. A Survey of Vibe Coding with Large Language Models

🔑 Keywords: Vibe Coding, Large Language Models (LLMs), Human-AI Collaboration, AI-Generated Implementations, Coding Agents

💡 Category: Human-AI Interaction

🌟 Research Objective:

– The paper aims to explore the under-explored effectiveness and challenges of “Vibe Coding” with large language models, offering a comprehensive and systematic review of this new development methodology.

🛠️ Research Methods:

– The study involves a systematic analysis of over 1000 research papers, examining the components of Vibe Coding, including LLMs for coding, coding agents, development environments, and feedback mechanisms. It formalizes Vibe Coding using a Constrained Markov Decision Process and synthesizes existing practices into five distinct development models.

💬 Research Conclusions:

– The research establishes that successful Vibe Coding relies on more than just agent capabilities; it is contingent upon systematic context engineering, robust development environments, and collaborative development models between humans and agents.

👉 Paper link: https://huggingface.co/papers/2510.12399

8. FlashVSR: Towards Real-Time Diffusion-Based Streaming Video Super-Resolution

🔑 Keywords: Diffusion models, Video Super-Resolution, Real-Time Performance, FlashVSR, VSR-120K

💡 Category: Computer Vision

🌟 Research Objective:

– The paper aims to make diffusion-based video super-resolution (VSR) practical by enhancing efficiency, scalability, and achieving real-time performance through the proposed FlashVSR framework.

🛠️ Research Methods:

– FlashVSR utilizes a combination of a three-stage distillation pipeline, locality-constrained sparse attention, and a tiny conditional decoder to optimize streaming super-resolution and reduce computational redundancy.

💬 Research Conclusions:

– FlashVSR delivers state-of-the-art performance with substantial speed improvements, up to 12x over existing one-step diffusion VSR models, and supports ultra-high resolutions, making it feasible for real-time applications.

👉 Paper link: https://huggingface.co/papers/2510.12747

9. Dr.LLM: Dynamic Layer Routing in LLMs

🔑 Keywords: Large Language Models, Dynamic routing, Monte Carlo Tree Search, Adaptive-depth methods, Transformers

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to improve computational efficiency and accuracy of Large Language Models (LLMs) by introducing a novel framework called Dr.LLM that dynamically routes layers without altering the base weights.

🛠️ Research Methods:

– The research employs a retrofittable framework using lightweight per-layer routers trained with explicit supervision via Monte Carlo Tree Search (MCTS) to determine optimal layer configurations.

💬 Research Conclusions:

– Dr.LLM effectively boosts accuracy by up to +3.4% while saving computation, demonstrated on tasks like ARC and DART. It generalizes well to out-of-domain tasks and surpasses previous routing methods by up to +7.7% without significant accuracy loss, achieving efficient and accurate inference.

👉 Paper link: https://huggingface.co/papers/2510.12773

10. Temporal Alignment Guidance: On-Manifold Sampling in Diffusion Models

🔑 Keywords: Diffusion models, Generative Models, Temporal Alignment Guidance (TAG), Sample Fidelity

💡 Category: Generative Models

🌟 Research Objective:

– The paper aims to address the off-manifold errors in diffusion models, particularly when arbitrary guidance compromises sample fidelity.

🛠️ Research Methods:

– The authors propose the use of a time predictor to estimate deviations from the desired data manifold, and introduce a novel guidance mechanism called Temporal Alignment Guidance (TAG) to correct these deviations during the generation process.

💬 Research Conclusions:

– Experiments show that TAG consistently enhances sample alignment with the desired manifold at every timestep, significantly improving generation quality across various tasks.

👉 Paper link: https://huggingface.co/papers/2510.11057

11. ERA: Transforming VLMs into Embodied Agents via Embodied Prior Learning and Online Reinforcement Learning

🔑 Keywords: Embodied AI, Vision Language Models, Reinforcement Learning, Scalable Embodied Intelligence

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The paper aims to address the shortcomings of current Vision Language Models (VLMs) used in embodied AI by introducing a new framework called Embodied Reasoning Agent (ERA).

🛠️ Research Methods:

– A two-stage framework is developed, consisting of Embodied Prior Learning and an online Reinforcement Learning pipeline, to integrate prior knowledge and enhance agent performance.

💬 Research Conclusions:

– The ERA-3B framework outperforms existing large models and baselines with substantial improvements, demonstrating strong generalization to unseen tasks and offering insights for scalable embodied intelligence.

👉 Paper link: https://huggingface.co/papers/2510.12693

12. SRUM: Fine-Grained Self-Rewarding for Unified Multimodal Models

🔑 Keywords: Unified Multimodal Models, self-rewarding, visual understanding, visual generation, feedback loop

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To address the gap between visual understanding and visual generation in Unified Multimodal Models (UMMs) by introducing a self-rewarding framework named SRUM.

🛠️ Research Methods:

– Implementation of SRUM, a post-training framework, which uses the model’s understanding module as an internal “evaluator” to create a feedback loop for self-improvement.

💬 Research Conclusions:

– SRUM enhances the capabilities and generalization of UMMs, improving performance significantly on benchmarks such as T2I-CompBench and T2I-ReasonBench, thus establishing a new paradigm for model self-enhancement through self-rewarding.

👉 Paper link: https://huggingface.co/papers/2510.12784

13. UniFusion: Vision-Language Model as Unified Encoder in Image Generation

🔑 Keywords: UniFusion, VIS-Language Model (VLM), Layerwise Attention Pooling (LAP), VERIFI, cross-modality knowledge transfer

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Develop a unified multimodal encoder using a diffusion-based generative model to enhance cross-modal reasoning and knowledge transfer without substantial computational resources.

🛠️ Research Methods:

– Introduce UniFusion leveraging a frozen large vision-language model (VLM) and Layerwise Attention Pooling (LAP) for conditioning diffusion generative models.

– Implement VERIFI to improve text-image alignment during in-model prompt rewriting by utilizing VLM’s reasoning capabilities.

💬 Research Conclusions:

– UniFusion exhibits superior performance in text-image alignment and visual information transfer from VLM, essential for editing tasks.

– The model demonstrates enhanced generalization capabilities, achieving zero-shot generalization to multiple image references when trained on single image editing, reinforcing the effectiveness of the unified encoder design.

👉 Paper link: https://huggingface.co/papers/2510.12789

14. Deconstructing Attention: Investigating Design Principles for Effective Language Modeling

🔑 Keywords: Transformer language models, dot-product attention mechanism, token mixing, sequence-dependency, mathematical form, cooperative effect

💡 Category: Natural Language Processing

🌟 Research Objective:

– To systematically analyze and deconstruct the essential design principles of attention mechanisms in Transformer models, particularly focusing on token mixing, sequence dependency, and mathematical form.

🛠️ Research Methods:

– The study designs controlled variants of Transformer models to selectively relax key design principles in a systematic manner, applying changes uniformly across all layers or in hybrid architectures.

💬 Research Conclusions:

– Token mixing is crucial as its absence results in models performing near-random. The exact mathematical form and sequence dependency can be relaxed considerably when preserved in some layers, showcasing a cooperative effect where even failing variants can boost performance when combined with standard attention.

👉 Paper link: https://huggingface.co/papers/2510.11602

15. Boundary-Guided Policy Optimization for Memory-efficient RL of Diffusion Large Language Models

🔑 Keywords: Boundary-Guided Policy Optimization, reinforcement learning, diffusion large language models, memory-efficient, likelihood approximation

💡 Category: Generative Models

🌟 Research Objective:

– The research aims to improve reinforcement learning for diffusion large language models by efficiently approximating likelihoods with a memory-efficient lower bound.

🛠️ Research Methods:

– The study introduces Boundary-Guided Policy Optimization (BGPO), a memory-efficient RL algorithm that maximizes a specially constructed lower bound of the ELBO-based objective.

💬 Research Conclusions:

– BGPO significantly outperforms previous RL algorithms for diffusion large language models in tasks such as math problem solving, code generation, and planning, due to its ability to adopt a large MC sample size and improve likelihood approximations.

👉 Paper link: https://huggingface.co/papers/2510.11683

16. Memory as Action: Autonomous Context Curation for Long-Horizon Agentic Tasks

🔑 Keywords: Large Language Models, Reinforcement Learning, Memory Management, Trajectory Fractures, Context Curation

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To reframe working memory management for language models as a learnable, intrinsic capability within a novel framework called Memory-as-Action.

🛠️ Research Methods:

– Introduced Dynamic Context Policy Optimization to handle trajectory fractures by segmenting trajectories at memory action points and employing trajectory-level advantages.

💬 Research Conclusions:

– Demonstrated that optimizing task reasoning and memory management jointly reduces computational consumption and enhances task performance through adaptive context curation strategies.

👉 Paper link: https://huggingface.co/papers/2510.12635

17. Verbalized Sampling: How to Mitigate Mode Collapse and Unlock LLM Diversity

🔑 Keywords: Typicality bias, Mode collapse, Verbalized Sampling, Creative writing, Preference data

💡 Category: Generative Models

🌟 Research Objective:

– Address mode collapse in LLMs by identifying the role of typicality bias in preference data and introducing Verbalized Sampling to enhance diversity.

🛠️ Research Methods:

– Theoretical formalization of typicality bias, empirical verification on preference datasets, and introduction of a training-free prompting strategy, Verbalized Sampling, to improve model diversity.

💬 Research Conclusions:

– Verbalized Sampling increases diversity significantly without sacrificing accuracy and safety, especially in creative applications such as writing, dialogues, and data generation. The strategy is particularly beneficial for more capable models.

👉 Paper link: https://huggingface.co/papers/2510.01171

18. HoneyBee: Data Recipes for Vision-Language Reasoners

🔑 Keywords: Vision-Language Models, Reasoning Capabilities, Data Curation, Chain-of-Thought, HoneyBee

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to understand the principles behind constructing effective Vision-Language (VL) reasoning datasets and the impact of various data curation approaches on VL reasoning capabilities.

🛠️ Research Methods:

– The paper introduces different data curation strategies, controlling training and evaluation setups to assess their effects. It explores context sources, data interventions, and scaling strategies involving images, questions, and Chain-of-Thought (CoT) solutions.

💬 Research Conclusions:

– The study discovers that the source of context in training data significantly influences VLM performance. Interventions using auxiliary signals and text-only reasoning improve outcomes, while scaling data dimensions enhances reasoning capabilities. The HoneyBee dataset is introduced, leading to superior VLM performance compared to state-of-the-art models.

👉 Paper link: https://huggingface.co/papers/2510.12225

19. SAIL-Embedding Technical Report: Omni-modal Embedding Foundation Model

🔑 Keywords: Multimodal Embedding Models, CLIP-based, Vision-Language Models, Cross-Modal Proficiency, Recommendation Scenarios

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– This research introduces SAIL-Embedding, an omni-modal embedding foundation model designed to overcome challenges in real-world applications and business scenarios, including limited modality support and industrial domain gaps.

🛠️ Research Methods:

– The study employs tailored training strategies and architectural design, featuring a multi-stage training scheme. This includes content-aware progressive training to enhance model adaptability, and collaboration-aware recommendation enhancement training to improve multimodal representation in recommendation scenarios.

💬 Research Conclusions:

– Experimental results demonstrate that SAIL-Embedding achieves state-of-the-art (SOTA) performance in various retrieval tasks. In online experiments, notable increases in Lifetime (LT) indicators for recommendation experiences were observed, such as significant gains in the Douyin-Selected scenario and improvements in AUC for the Douyin feed rank model.

👉 Paper link: https://huggingface.co/papers/2510.12709

20. DeepMMSearch-R1: Empowering Multimodal LLMs in Multimodal Web Search

🔑 Keywords: Multimodal Large Language Models, DeepMMSearch-R1, Multi-turn Web Searches, Online Reinforcement Learning, DeepMMSearchVQA

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Present DeepMMSearch-R1, a multimodal LLM that performs on-demand, multi-turn web searches and crafts dynamic image and text queries.

🛠️ Research Methods:

– Employ a two-stage training pipeline involving supervised finetuning and online reinforcement learning, using a novel dataset, DeepMMSearchVQA, created through automated pipelines and web search tools.

💬 Research Conclusions:

– Demonstrate the superiority of DeepMMSearch-R1 through extensive experiments on knowledge-intensive benchmarks and provide insights to advance multimodal web search.

👉 Paper link: https://huggingface.co/papers/2510.12801

21. R-WoM: Retrieval-augmented World Model For Computer-use Agents

🔑 Keywords: Large Language Models, world models, retrieval-augmented, long-horizon simulations

💡 Category: Foundations of AI

🌟 Research Objective:

– Investigate the appropriateness of LLMs as world models for enhancing agent decision-making in digital environments.

🛠️ Research Methods:

– Probing LLMs on tasks like next-state identification, full-procedure planning alignment, and milestone transition recognition.

💬 Research Conclusions:

– LLMs effectively predict immediate next states but degrade in performance for longer simulations.

– R-WoM improves long-horizon simulations by integrating up-to-date external knowledge, showing significant performance enhancements.

👉 Paper link: https://huggingface.co/papers/2510.11892

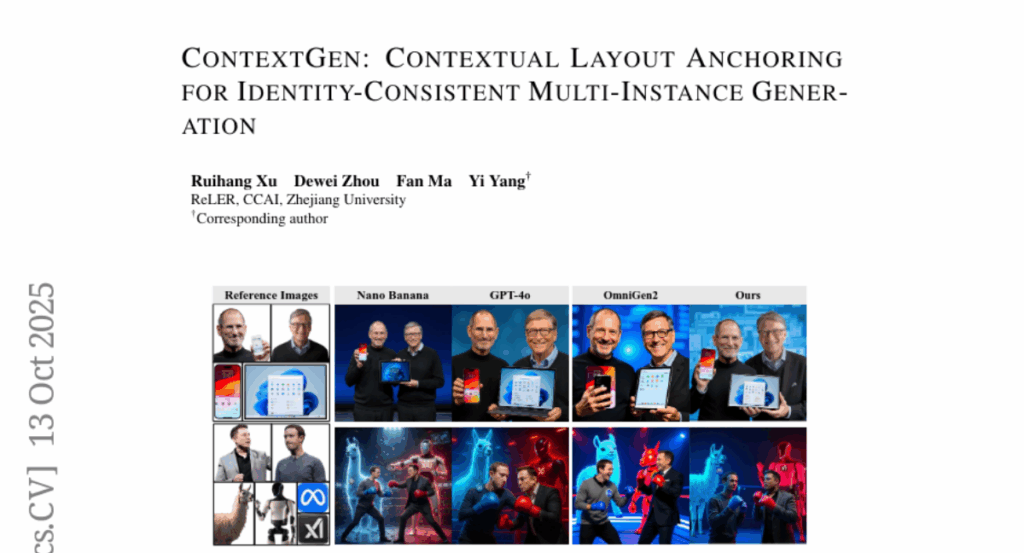

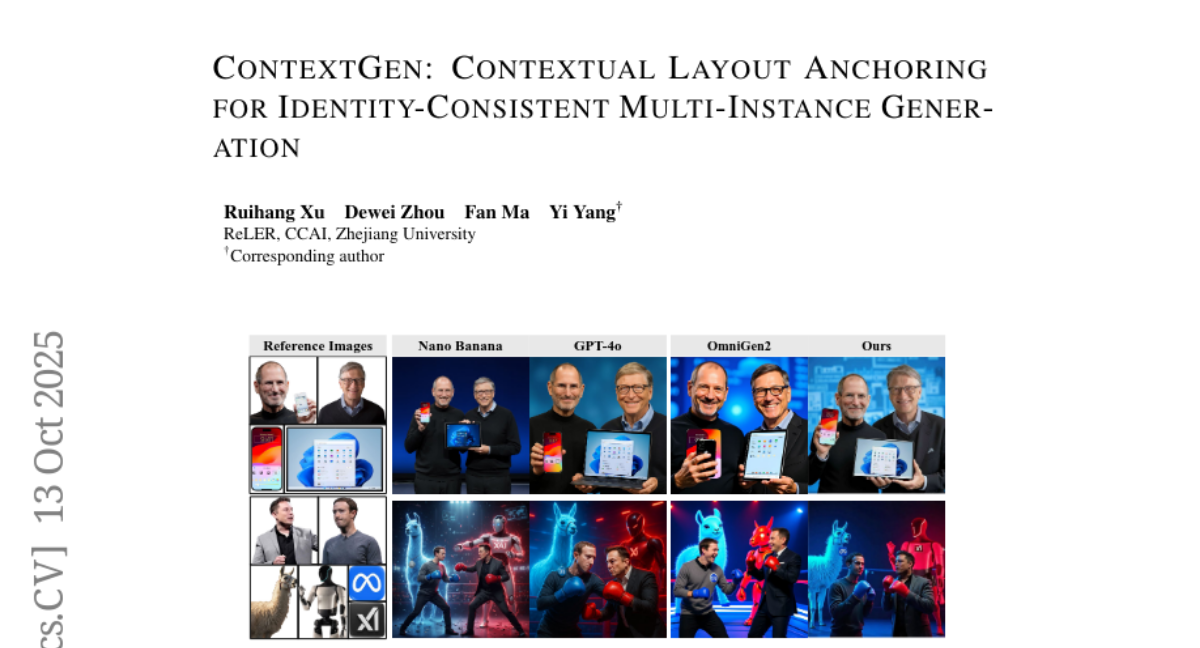

22. ContextGen: Contextual Layout Anchoring for Identity-Consistent Multi-Instance Generation

🔑 Keywords: ContextGen, Diffusion Transformer, Contextual Layout Anchoring, Identity Consistency Attention, IMIG-100K

💡 Category: Generative Models

🌟 Research Objective:

– The primary focus is to improve multi-instance image generation by overcoming challenges in object layout control and identity preservation using the ContextGen framework.

🛠️ Research Methods:

– ContextGen introduces the Contextual Layout Anchoring (CLA) mechanism for precise object placement and the Identity Consistency Attention (ICA) mechanism for maintaining identity consistency of multiple instances.

💬 Research Conclusions:

– ContextGen outperforms existing models, achieving state-of-the-art results in control precision, identity fidelity, and visual quality, demonstrated through extensive experiments on the IMIG-100K dataset.

👉 Paper link: https://huggingface.co/papers/2510.11000

23. LLM Reasoning for Machine Translation: Synthetic Data Generation over Thinking Tokens

🔑 Keywords: Large Reasoning Models, Machine Translation, Intermediate Tokens, Chain of Thought

💡 Category: Natural Language Processing

🌟 Research Objective:

– To explore the impact and benefits of generating intermediate tokens when performing Machine Translation across multiple language pairs and setups with different resource levels.

🛠️ Research Methods:

– Investigation of the use of synthetic chain of thought (CoT) explanations to fine-tune large reasoning models for step-by-step translation.

– Assessment of modular translation-specific prompting strategies to construct intermediate tokens.

💬 Research Conclusions:

– “Thinking tokens” are not beneficial for improving the performance of large reasoning models in machine translation.

– Fine-tuning with synthetic CoT explanations does not outperform standard input-output fine-tuning methods.

– The presence of translation attempts within intermediate tokens is crucial for their contribution during fine-tuning.

– Teacher refinement of target translations or expansion of parallel corpora is more effective than distilling CoT explanations for machine translation models.

👉 Paper link: https://huggingface.co/papers/2510.11919

24. MLLM as a UI Judge: Benchmarking Multimodal LLMs for Predicting Human Perception of User Interfaces

🔑 Keywords: Multimodal large language models, User interface design, Early evaluators, Human preferences

💡 Category: Human-AI Interaction

🌟 Research Objective:

– To explore the capability of multimodal large language models (MLLMs) as early evaluators in the user interface (UI) design process to mimic human preferences in subjective evaluations.

🛠️ Research Methods:

– Benchmarking models like GPT-4o, Claude, and Llama across 30 interfaces using data from a crowdsourcing platform to evaluate alignment with human judgments on various UI factors.

💬 Research Conclusions:

– MLLMs are able to approximate human preferences in some aspects of UI evaluation but show divergence in others, highlighting both their utility and limitations as tools for early UX research.

👉 Paper link: https://huggingface.co/papers/2510.08783

25. The Geometry of Reasoning: Flowing Logics in Representation Space

🔑 Keywords: Large Language Models, Geometric Framework, Reasoning Flows, Interpretability

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– This study aims to explore how Large Language Models (LLMs) “think” by utilizing a novel geometric framework to model their reasoning as flows within the representation space.

🛠️ Research Methods:

– The researchers disentangle logical structure from semantics using natural deduction propositions with varied semantic carriers. They connect reasoning with geometric quantities like position, velocity, and curvature to enable formal analysis.

💬 Research Conclusions:

– LLM reasoning corresponds to smooth flows in representation space, with logical statements acting as local controllers of flow velocities. The study provides a conceptual foundation and practical tools for understanding reasoning phenomena, enhancing interpretability of LLM behavior.

👉 Paper link: https://huggingface.co/papers/2510.09782

26. What If : Understanding Motion Through Sparse Interactions

🔑 Keywords: Flow Poke Transformer, Scene Dynamics, Motion Prediction, Multi-Modal Scene Motion, Local Interactions

💡 Category: Computer Vision

🌟 Research Objective:

– To develop a novel framework, the Flow Poke Transformer, for predicting the distribution of local motion based on sparse interactions termed “pokes”.

🛠️ Research Methods:

– Introduced a new interpretable framework that directly accesses multi-modal scene motion, emphasizing the dependency on physical interactions and inherent uncertainties.

💬 Research Conclusions:

– The Flow Poke Transformer surpasses traditional methods in various tasks, achieving superior results in dense face motion generation and articulated object motion estimation, demonstrating its flexibility and versatility.

👉 Paper link: https://huggingface.co/papers/2510.12777

27. SR-Scientist: Scientific Equation Discovery With Agentic AI

🔑 Keywords: SR-Scientist, LLMs, AI Native, data analysis, reinforcement learning

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To elevate Large Language Models from merely being equation proposers to autonomous AI scientists capable of generating, implementing, and optimizing scientific equations.

🛠️ Research Methods:

– Utilization of an end-to-end reinforcement learning framework to enhance the capabilities of the AI by using a set of tools for data analysis and equation evaluation.

💬 Research Conclusions:

– SR-Scientist outperforms baseline methods by a margin of 6% to 35% across multiple science disciplines, showcases robustness to noise, generalization to out-of-domain data, and exhibits symbolic accuracy.

👉 Paper link: https://huggingface.co/papers/2510.11661

28. One Life to Learn: Inferring Symbolic World Models for Stochastic Environments from Unguided Exploration

🔑 Keywords: OneLife, probabilistic programming, stochastic environment, conditionally-activated programmatic laws, dynamic computation graph

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To develop and evaluate the OneLife framework’s ability to model world dynamics in complex, stochastic environments.

🛠️ Research Methods:

– Utilization of conditionally-activated programmatic laws within a probabilistic programming framework.

– Introduction of a new evaluation protocol that measures state ranking and state fidelity.

– Implementation and testing on Crafter-OO, an object-oriented symbolic state environment.

💬 Research Conclusions:

– OneLife successfully learns key environment dynamics with minimal interaction and outperforms strong baselines in state ranking and fidelity.

– Demonstrates effective planning ability through simulated rollouts, identifying superior strategies.

– Establishes a foundation for autonomously constructing programmatic world models of unknown, complex environments.

👉 Paper link: https://huggingface.co/papers/2510.12088

29. Cautious Weight Decay

🔑 Keywords: Cautious Weight Decay, Weight Decay, Optimizer-Agnostic, Locally Pareto-Optimal, Large-Scale Models

💡 Category: Machine Learning

🌟 Research Objective:

– Introduce Cautious Weight Decay (CWD) to enhance optimizer performance by selectively applying weight decay.

🛠️ Research Methods:

– Utilizes CWD as a drop-in, optimizer-agnostic modification compatible with AdamW, Lion, and Muon, requiring no new hyperparameters or tuning.

💬 Research Conclusions:

– CWD improves accuracy and loss in large-scale language model pre-training and ImageNet classification, achieving results at million- to billion-parameter scales.

👉 Paper link: https://huggingface.co/papers/2510.12402