AI Native Daily Paper Digest – 20251022

1. LightMem: Lightweight and Efficient Memory-Augmented Generation

🔑 Keywords: LightMem, Large Language Models, Memory Systems, Atkinson-Shiffrin Model, Sensory Memory

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study develops a new memory system, LightMem, to improve the efficiency and accuracy of Large Language Models by effectively managing historical interaction information.

🛠️ Research Methods:

– LightMem is inspired by the Atkinson-Shiffrin model and organizes memory into three stages: sensory memory for filtering, short-term memory for structuring, and long-term memory for offline updates.

💬 Research Conclusions:

– LightMem significantly outperforms existing baselines in accuracy, reducing token usage, API calls, and runtime, demonstrating its efficiency in enhancing the performance of language models.

👉 Paper link: https://huggingface.co/papers/2510.18866

2. World-in-World: World Models in a Closed-Loop World

🔑 Keywords: Generative world models, predictive perception, agent-environment interactions, task success, data scaling law

💡 Category: Generative Models

🌟 Research Objective:

– The paper aims to evaluate generative world models (WMs) within closed-loop environments, focusing on their impact on decision-making and the task success of embodied agents.

🛠️ Research Methods:

– Introduced World-in-World, a platform benchmarking WMs in settings that mirror real agent-environment interactions, using a unified online planning strategy and a standardized action API.

💬 Research Conclusions:

– The study reveals that visual quality alone is insufficient for task success; controllability is more crucial.

– Scaling with action-observation data post-training is more effective than enhancing pretrained video generators.

– More compute during inference significantly boosts closed-loop performance of WMs.

👉 Paper link: https://huggingface.co/papers/2510.18135

3. Efficient Long-context Language Model Training by Core Attention Disaggregation

🔑 Keywords: CAD, core attention, long-context large language model, DistCA, pipeline parallel

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Improve the training of long-context large language models by optimizing the computation of core attention using the CAD technique.

🛠️ Research Methods:

– Core attention disaggregation (CAD) technique decouples core attention computation and executes it on separate devices to balance load and improve throughput.

– Implementation of CAD in a system called DistCA which uses a ping-pong execution scheme to overlap communication and computation.

💬 Research Conclusions:

– DistCA improves training throughput by up to 1.35x, eliminates stragglers in data and pipeline parallel groups, and achieves almost perfect compute and memory balance on 512 H200 GPUs with context lengths up to 512k tokens.

👉 Paper link: https://huggingface.co/papers/2510.18121

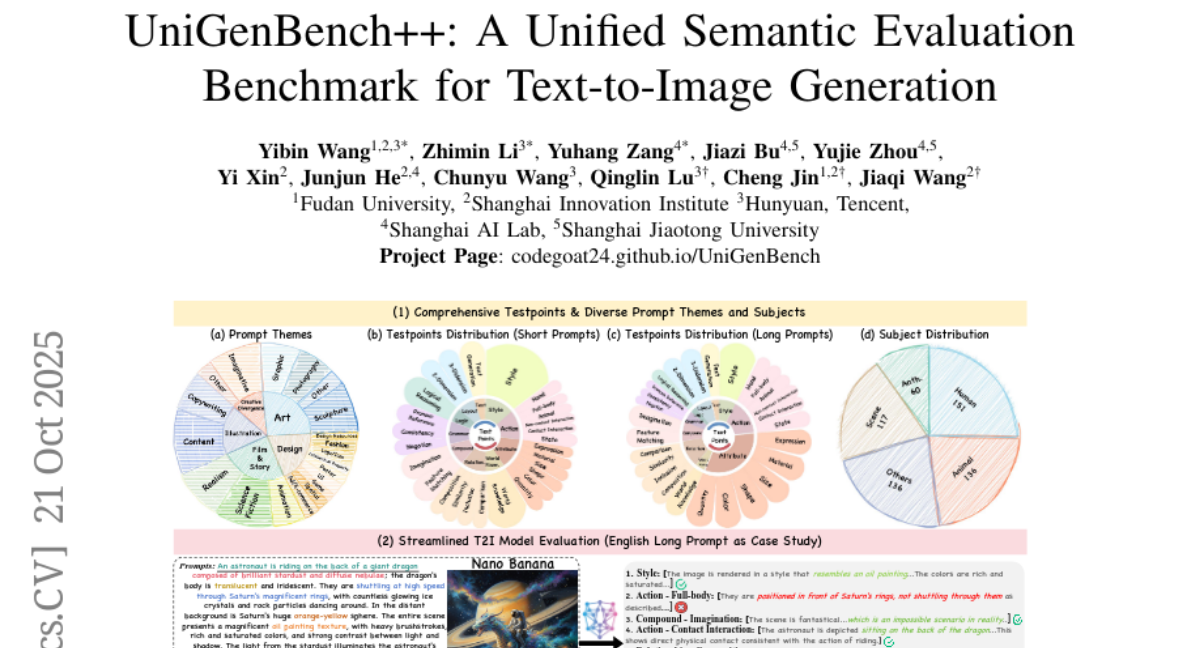

4. UniGenBench++: A Unified Semantic Evaluation Benchmark for Text-to-Image Generation

🔑 Keywords: text-to-image generation, semantic consistency, multilingual support, Multi-modal Large Language Model, benchmark

💡 Category: Generative Models

🌟 Research Objective:

– Introduce UniGenBench++, a comprehensive benchmark for evaluating semantic consistency in text-to-image generation across various scenarios and languages.

🛠️ Research Methods:

– Utilized 600 hierarchically structured prompts covering diverse real-world scenarios, evaluated using 10 primary and 27 sub-criteria for both English and Chinese languages.

💬 Research Conclusions:

– UniGenBench++ provides a rigorous assessment pipeline revealing strengths and weaknesses of T2I models, enhancing the reliability and applicability of benchmarks in real-world settings.

👉 Paper link: https://huggingface.co/papers/2510.18701

5. Chem-R: Learning to Reason as a Chemist

🔑 Keywords: Chem-R, Chemical Reasoning, Multi-task Optimization, Core Knowledge, Interpretability

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The research aims to develop a generalizable Chemical Reasoning model, Chem-R, that addresses the limitations in current large language models for chemical discovery by enhancing core knowledge, expert reasoning, and multi-task optimization.

🛠️ Research Methods:

– Chem-R is trained using a three-phase framework which includes: Chemical Foundation Training for core chemical knowledge, Chemical Reasoning Protocol Distillation for expert-like reasoning, and Multi-task Group Relative Policy Optimization for balanced performance across diverse tasks.

💬 Research Conclusions:

– Chem-R achieves state-of-the-art performance on comprehensive benchmarks, surpassing current leading models in molecular and reaction tasks by up to 46% and 66% respectively, demonstrating robust generalization and interpretability, with potential for next-generation AI-driven chemical discovery.

👉 Paper link: https://huggingface.co/papers/2510.16880

6. MoGA: Mixture-of-Groups Attention for End-to-End Long Video Generation

🔑 Keywords: Mixture-of-Groups Attention, Diffusion Transformers, sparse attention, semantic-aware routing, efficient long video generation

💡 Category: Generative Models

🌟 Research Objective:

– The paper aims to address the quadratic scaling issue of full attention in Diffusion Transformers to enable efficient long video generation.

🛠️ Research Methods:

– The study introduces Mixture-of-Groups Attention (MoGA), a sparse attention mechanism that uses a lightweight, learnable token router for precise token matching without relying on blockwise estimation.

💬 Research Conclusions:

– MoGA allows effective long-range interactions, integrates with modern attention stacks, and facilitates end-to-end generation of minute-level, multi-shot, 480p videos at 24 fps, with a context length of approximately 580k.

👉 Paper link: https://huggingface.co/papers/2510.18692

7. Grasp Any Region: Towards Precise, Contextual Pixel Understanding for Multimodal LLMs

🔑 Keywords: Grasp Any Region, AI Native, Region-level MLLMs, RoI-aligned feature replay, compositional reasoning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To introduce Grasp Any Region (GAR) for comprehensive region-level visual understanding by integrating global contexts and modeling interactions to achieve advanced reasoning capabilities.

🛠️ Research Methods:

– Utilization of RoI-aligned feature replay technique to enhance precise perception through global contexts and multi-prompt interactions.

💬 Research Conclusions:

– GAR demonstrates state-of-the-art capabilities in captioning and modeling relationships, surpassing existing models like DAM-3B and InternVL3-78B. It also shows remarkable zero-shot transferability in video tasks.

👉 Paper link: https://huggingface.co/papers/2510.18876

8. IF-VidCap: Can Video Caption Models Follow Instructions?

🔑 Keywords: Instruction-following, Video captioning, Benchmark, MLLMs, Dense captioning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce IF-VidCap benchmark to evaluate video captioning models’ instruction-following capabilities, highlighting the performance of open-source models against proprietary ones.

🛠️ Research Methods:

– Developed a systematic framework for evaluating video captions based on format correctness and content correctness with 1,400 high-quality samples.

💬 Research Conclusions:

– Open-source models are nearing parity with proprietary models, though dense captioning models underperform on complex instructions, indicating a need for advancement in both descriptive richness and instruction-following fidelity.

👉 Paper link: https://huggingface.co/papers/2510.18726

9. GAS: Improving Discretization of Diffusion ODEs via Generalized Adversarial Solver

🔑 Keywords: Generalized Adversarial Solver, Diffusion Models, ODE Solver, Adversarial Training

💡 Category: Generative Models

🌟 Research Objective:

– The main objective is to improve diffusion model sampling efficiency and quality without complex training techniques.

🛠️ Research Methods:

– Utilizes a simple ODE solver parameterization combined with adversarial training to mitigate artifacts and enhance detail fidelity.

💬 Research Conclusions:

– The introduced method, Generalized Adversarial Solver, demonstrates superior performance compared to existing solver training methods under similar resource constraints.

👉 Paper link: https://huggingface.co/papers/2510.17699

10. Every Step Evolves: Scaling Reinforcement Learning for Trillion-Scale Thinking Model

🔑 Keywords: trillion-parameter model, IcePop, C3PO++, ASystem, reasoning intelligence

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The paper aims to introduce and evaluate Ring-1T, an open-source thinking model with a trillion-scale parameter, focusing on addressing training challenges and enhancing reasoning intelligence.

🛠️ Research Methods:

– The researchers developed three key innovations: IcePop for stabilizing RL training, C3PO++ for improving resource utilization, and ASystem for overcoming systemic bottlenecks in trillion-parameter model training.

💬 Research Conclusions:

– Ring-1T demonstrates exceptional performance across various benchmarks, establishing a new standard for open-source model performance and contributing significantly to democratizing large-scale reasoning intelligence.

👉 Paper link: https://huggingface.co/papers/2510.18855

11. Towards Faithful and Controllable Personalization via Critique-Post-Edit Reinforcement Learning

🔑 Keywords: Large Language Models, Personalization, Reinforcement Learning, Reward Hacking, Critique-Post-Edit

💡 Category: Natural Language Processing

🌟 Research Objective:

– To enhance the personalization of large language models by integrating a multi-dimensional reward model with a self-revision mechanism.

🛠️ Research Methods:

– Development of a Critique-Post-Edit framework which includes a Personalized Generative Reward Model for multi-dimensional feedback and a self-revision mechanism for improved learning.

💬 Research Conclusions:

– The proposed method significantly outperforms standard methods on personalization benchmarks with an 11% improvement in win-rate for the Qwen2.5-7B model and surpasses GPT-4.1 performance with the Qwen2.5-14B model.

👉 Paper link: https://huggingface.co/papers/2510.18849

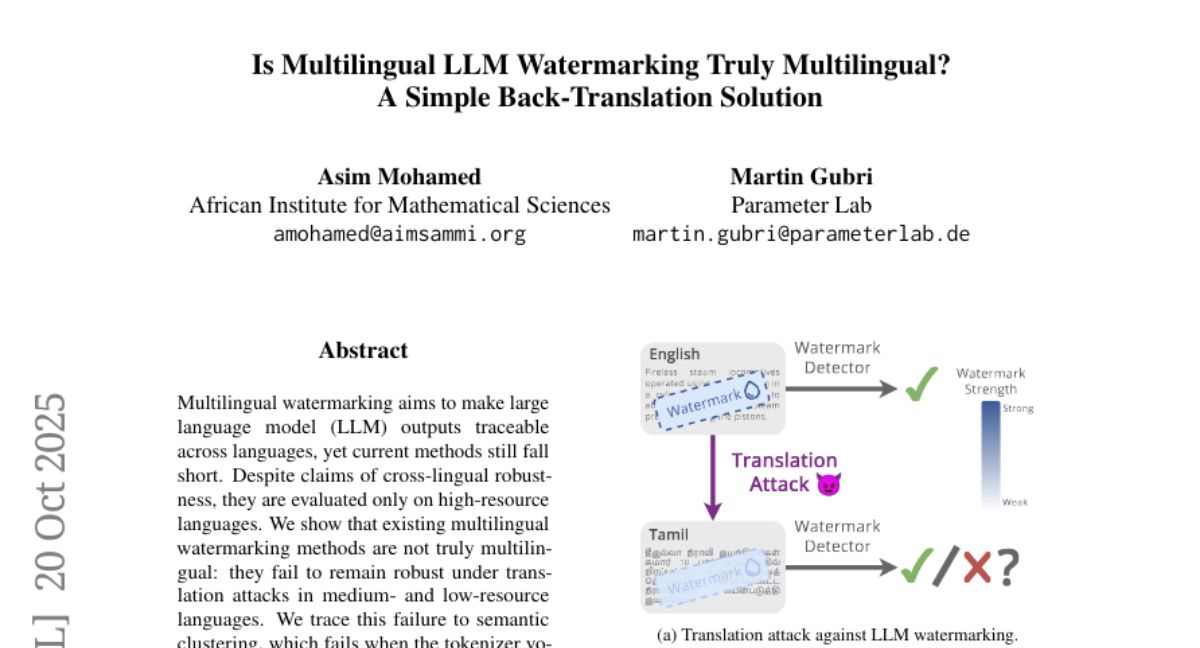

12. Is Multilingual LLM Watermarking Truly Multilingual? A Simple Back-Translation Solution

🔑 Keywords: Multilingual watermarking, large language model, translation attacks, back-translation, AI-generated summary

💡 Category: Natural Language Processing

🌟 Research Objective:

– The primary objective is to enhance multilingual watermarking robustness across various languages by addressing semantic clustering failures.

🛠️ Research Methods:

– Introduction of STEAM, a back-translation-based detection method compatible with any watermarking method, which strengthens watermark resilience lost through translation attacks across different tokenizers and languages.

💬 Research Conclusions:

– STEAM significantly improves watermark robustness, showing average gains of +0.19 AUC and +40%p TPR@1% across 17 languages, paving a path towards fairer watermarking practices.

👉 Paper link: https://huggingface.co/papers/2510.18019

13. MT-Video-Bench: A Holistic Video Understanding Benchmark for Evaluating Multimodal LLMs in Multi-Turn Dialogues

🔑 Keywords: MLLMs, multi-turn dialogues, perceptivity, interactivity, video understanding

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to evaluate Multimodal Large Language Models (MLLMs) in the context of multi-turn video dialogues, focusing on perceptivity and interactivity across various domains.

🛠️ Research Methods:

– Introduces MT-Video-Bench, a comprehensive benchmark for assessing MLLMs’ capabilities in multi-turn dialogues, using 987 curated dialogues from diverse domains to test six core competencies.

💬 Research Conclusions:

– The benchmark highlights significant performance discrepancies and limitations in current state-of-the-art MLLMs for handling multi-turn video dialogues, aiming to support further research in this field.

👉 Paper link: https://huggingface.co/papers/2510.17722

14. ssToken: Self-modulated and Semantic-aware Token Selection for LLM Fine-tuning

🔑 Keywords: self-modulated, Semantic-aware, large language models, token-level selection, attention-based

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper presents ssToken, a self-modulated and Semantic-aware Token Selection approach designed to enhance supervised fine-tuning of large language models.

🛠️ Research Methods:

– ssToken adaptively selects tokens by utilizing a self-modulated signal generated through per-token loss difference between historical and current models, bypassing the need for an additional reference model. It also introduces an attention-based metric for estimating token importance that complements traditional loss-based methods.

💬 Research Conclusions:

– Extensive experiments validate that both self-modulated selection and semantic-aware selection independently outperform full-data fine-tuning. The integration of these methods in ssToken yields synergistic performance gains, surpassing prior token-level selection approaches and maintaining training efficiency.

👉 Paper link: https://huggingface.co/papers/2510.18250

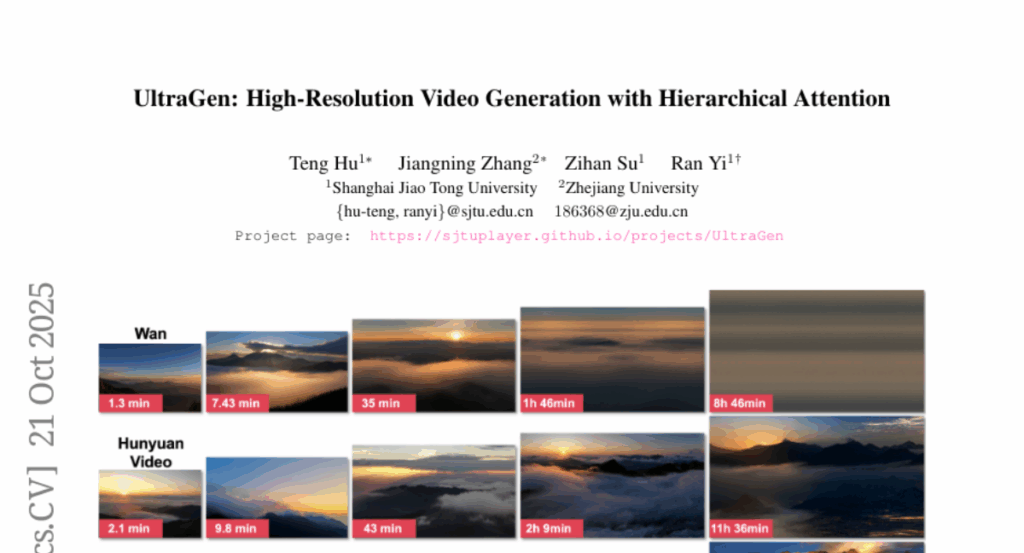

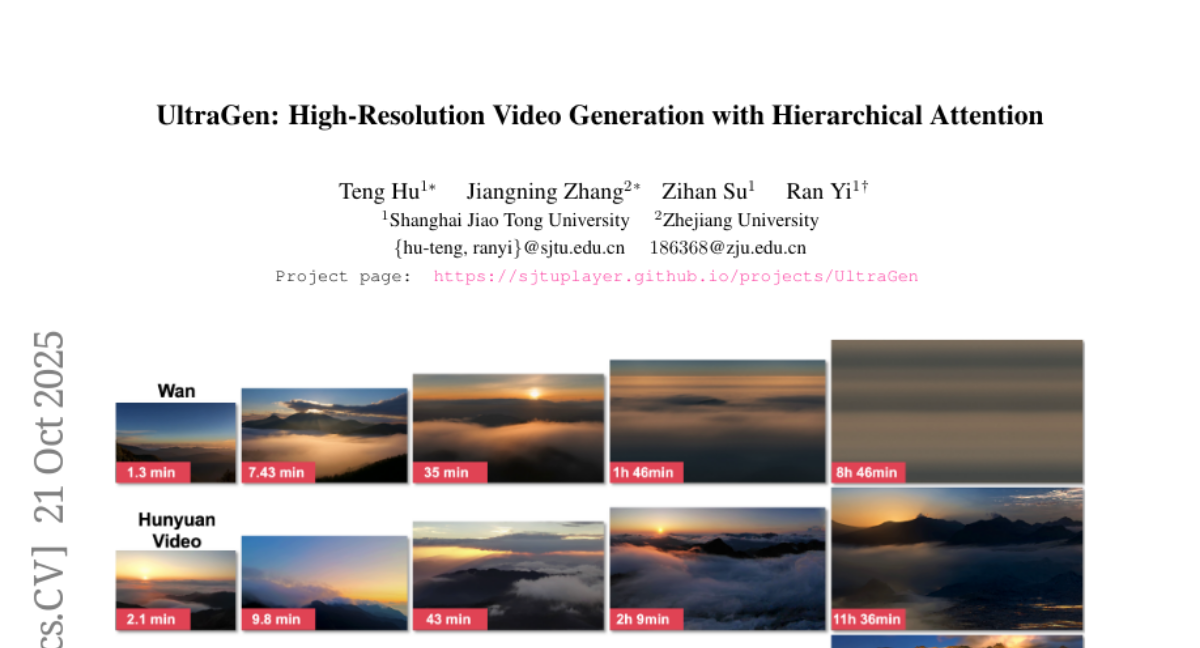

15. UltraGen: High-Resolution Video Generation with Hierarchical Attention

🔑 Keywords: UltraGen, High-resolution, Video Generation, Global-local Attention, Spatially Compressed Global Modeling

💡 Category: Generative Models

🌟 Research Objective:

– The research aims to develop UltraGen, a novel framework that facilitates efficient, end-to-end native high-resolution video generation.

🛠️ Research Methods:

– Utilizes a hierarchical dual-branch attention architecture with global-local attention decomposition.

– Implements a spatially compressed global modeling strategy and a hierarchical cross-window local attention mechanism.

💬 Research Conclusions:

– UltraGen outperforms existing state-of-the-art methods and two-stage pipelines by effectively scaling pre-trained low-resolution models to high resolutions such as 1080P and 4K in both qualitative and quantitative evaluations.

👉 Paper link: https://huggingface.co/papers/2510.18775

16. MUG-V 10B: High-efficiency Training Pipeline for Large Video Generation Models

🔑 Keywords: Large-scale video generation, AI Native, Megatron-Core, State-of-the-art performance, Open-source

💡 Category: Generative Models

🌟 Research Objective:

– The research aims to optimize critical aspects of training large-scale video generation models to achieve state-of-the-art performance while addressing specific challenges like cross-modal text-video alignment and complex spatiotemporal dependencies.

🛠️ Research Methods:

– The study introduces a comprehensive training framework focusing on four pillars: data processing, model architecture, training strategy, and infrastructure, all designed to optimize efficiency and improve performance.

💬 Research Conclusions:

– The resulting model, MUG-V 10B, not only matches recent top performance in video generation but also surpasses existing open-source baselines in certain tasks, particularly in e-commerce. The research distinguishes itself by open-sourcing the complete stack with Megatron-Core-based training code, marking a first in public releases for large-scale video generation tools.

👉 Paper link: https://huggingface.co/papers/2510.17519

17. DeepSeek-OCR: Contexts Optical Compression

🔑 Keywords: Optical 2D mapping, DeepSeek-OCR, compression ratio, vision tokens, LLMs

💡 Category: Computer Vision

🌟 Research Objective:

– To investigate the feasibility of compressing long contexts via Optical 2D mapping for improving OCR precision with fewer vision tokens.

🛠️ Research Methods:

– Implementation of DeepSeek-OCR, consisting of DeepEncoder and DeepSeek3B-MoE-A570M, focusing on achieving high compression ratios with the manageable number of vision tokens.

💬 Research Conclusions:

– The model achieves 97% OCR precision at a compression ratio of less than 10x, with substantial performance even at 20x. DeepSeek-OCR demonstrates significant potential and practical value in document processing tasks by surpassing existing systems on benchmarks.

👉 Paper link: https://huggingface.co/papers/2510.18234

18. ProCLIP: Progressive Vision-Language Alignment via LLM-based Embedder

🔑 Keywords: ProCLIP, CLIP, LLM-based embedder, curriculum learning, contrastive tuning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study focuses on enhancing CLIP’s ability to process long texts, support multilingual inputs, and improve fine-grained semantic understanding using ProCLIP, which aligns CLIP’s image encoder with a Large Language Model (LLM)-based embedder.

🛠️ Research Methods:

– ProCLIP utilizes curriculum learning for progressive vision-language alignment and employs contrastive tuning with self-distillation regularization to align the CLIP image encoder with an LLM-based embedder. It also leverages instance semantic alignment loss and embedding structure alignment loss for effective representation inheritance and alignment.

💬 Research Conclusions:

– ProCLIP successfully enhances CLIP by using a curriculum learning approach to overcome limitations in text encoding, improving its applicability to a wider range of tasks without disrupting the pretrained intrinsic vision-language alignment.

👉 Paper link: https://huggingface.co/papers/2510.18795

19. AlphaQuanter: An End-to-End Tool-Orchestrated Agentic Reinforcement Learning Framework for Stock Trading

🔑 Keywords: AlphaQuanter, reinforcement learning, dynamic policy, interpretable reasoning, autonomous trading

💡 Category: AI in Finance

🌟 Research Objective:

– The study introduces AlphaQuanter, a single-agent framework using reinforcement learning to enhance automated trading by learning dynamic policies and acquiring information proactively.

🛠️ Research Methods:

– AlphaQuanter employs reinforcement learning within a transparent, tool-augmented decision workflow, enabling the autonomous orchestration of tools and on-demand information acquisition.

💬 Research Conclusions:

– The framework achieves state-of-the-art performance on key financial metrics. Its interpretable reasoning provides sophisticated strategies and insightful contributions for human traders.

👉 Paper link: https://huggingface.co/papers/2510.14264

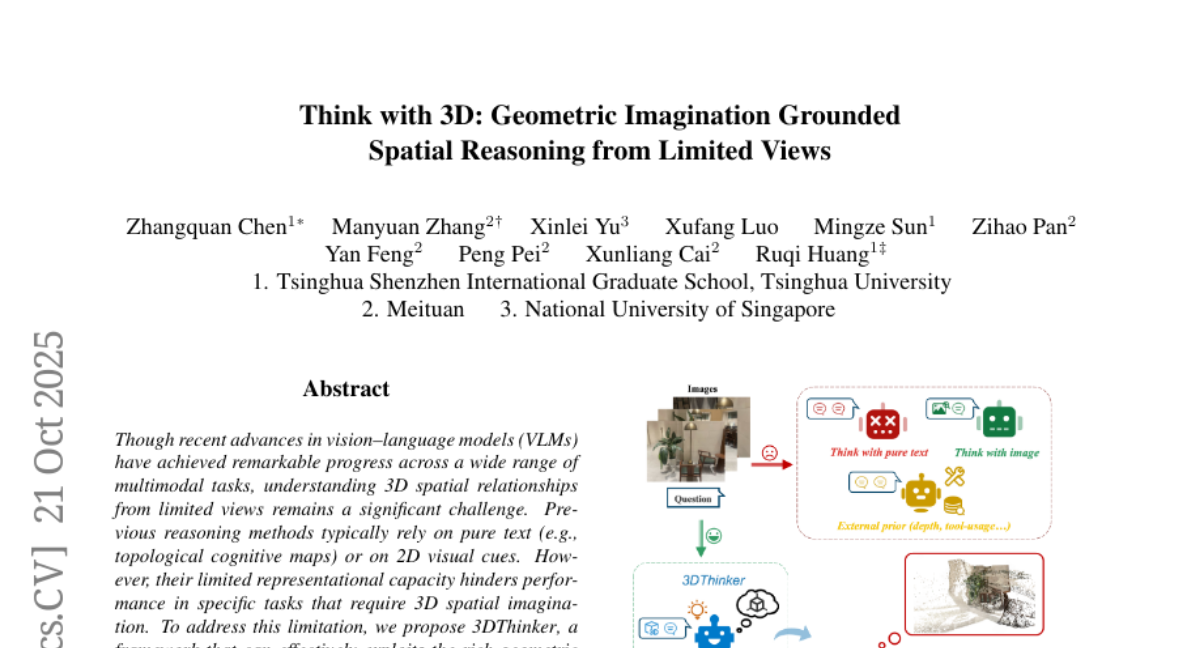

20. Think with 3D: Geometric Imagination Grounded Spatial Reasoning from Limited Views

🔑 Keywords: 3D spatial understanding, Multimodal reasoning, AI-generated summary, 3D latent, 3D mentaling

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance multimodal reasoning by integrating 3D spatial understanding from images without requiring 3D prior input or labeled data.

🛠️ Research Methods:

– Utilization of a two-stage training process: initial supervised training to align the 3D latent generated by vision-language models with a 3D foundation model, followed by optimization of the reasoning trajectory based on outcome signals.

💬 Research Conclusions:

– 3DThinker consistently outperforms strong baselines across multiple benchmarks and offers a new perspective toward unifying 3D representations into multimodal reasoning.

👉 Paper link: https://huggingface.co/papers/2510.18632

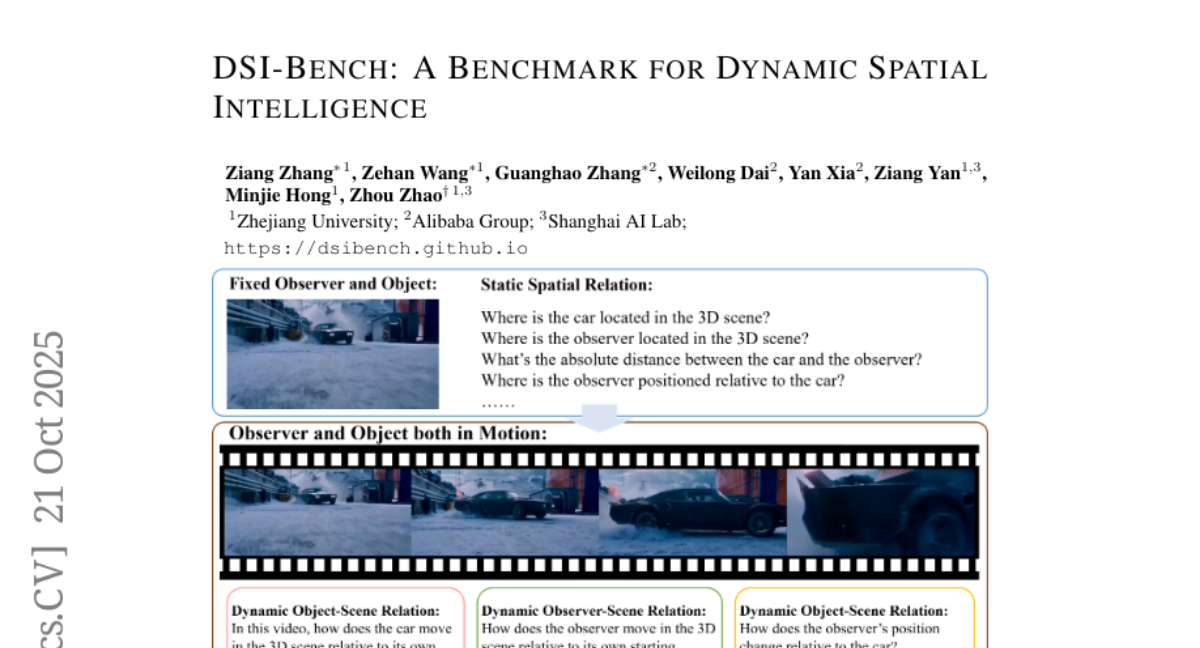

21. DSI-Bench: A Benchmark for Dynamic Spatial Intelligence

🔑 Keywords: Dynamic Spatial Intelligence, DSI-Bench, vision-language models, object motion, semantic biases

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– Assess dynamic spatial reasoning capabilities of vision-language and visual expertise models.

🛠️ Research Methods:

– Introduced DSI-Bench with nearly 1,000 dynamic videos and over 1,700 annotated questions to evaluate motion understanding.

💬 Research Conclusions:

– Identified limitations in models, including confusion between observer and object motion and inability to accurately infer relative relationships.

👉 Paper link: https://huggingface.co/papers/2510.18873

22. Extracting alignment data in open models

🔑 Keywords: alignment training, embedding models, semantic similarities, distillation, AI-generated summary

💡 Category: Machine Learning

🌟 Research Objective:

– This study explores the extraction of alignment training data from post-trained models to enhance capabilities such as long-context reasoning, safety, instruction following, and mathematics.

🛠️ Research Methods:

– Utilizes embedding models to measure semantic similarities, highlighting how these models outperform traditional string matching techniques.

💬 Research Conclusions:

– Findings indicate that post-trained models can regurgitate significant training data used in phases like SFT or RL, and this can be leveraged to recover performance in base models. The study discusses potential risks and downstream effects associated with distillation practices, pointing out its indirect use of original datasets.

👉 Paper link: https://huggingface.co/papers/2510.18554

23. Video Reasoning without Training

🔑 Keywords: V-Reason, Large Multimodal Models, entropy-based optimization, video reasoning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective is to improve video reasoning accuracy and efficiency of Large Multimodal Models without employing reinforcement learning or supervised fine-tuning by leveraging entropy-based optimization.

🛠️ Research Methods:

– The method, V-Reason, uses a novel approach of optimizing the value cache of the model during inference through a small, trainable controller using an entropy-based objective, enhancing the model’s micro-exploration and exploitation behaviors.

💬 Research Conclusions:

– V-Reason significantly improves video reasoning capabilities outperforming base instruction-tuned models and narrowing the accuracy gap with RL-trained models to within 0.6%, while reducing output tokens by 58.6%, thereby enhancing efficiency greatly.

👉 Paper link: https://huggingface.co/papers/2510.17045

24. Mono4DGS-HDR: High Dynamic Range 4D Gaussian Splatting from Alternating-exposure Monocular Videos

🔑 Keywords: 4D reconstruction, HDR, Gaussian Splatting, temporal luminance regularization, monocular videos

💡 Category: Computer Vision

🌟 Research Objective:

– Introduce Mono4DGS-HDR, a system for reconstructing 4D HDR scenes from unposed monocular LDR videos with alternating exposures.

🛠️ Research Methods:

– Utilize a unified framework with a two-stage optimization based on Gaussian Splatting for HDR video reconstruction without requiring camera poses.

– Implement temporal luminance regularization to enhance the consistency of HDR appearance.

💬 Research Conclusions:

– Mono4DGS-HDR significantly outperforms current state-of-the-art methods in rendering quality and speed, demonstrated through extensive experiments using a newly constructed evaluation benchmark.

👉 Paper link: https://huggingface.co/papers/2510.18489

25. Expanding the Action Space of LLMs to Reason Beyond Language

🔑 Keywords: Expanded Action space, ExpA Reinforcement Learning, Large Language Models, multi-turn interactions, contingent planning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to enhance Large Language Models by decoupling environment interactions from language using Expanded Action space (ExpA) with ExpA Reinforcement Learning (EARL).

🛠️ Research Methods:

– The approach involves internalizing environmental interactions in ExpA, allowing models to seamlessly switch between default language environments and external environments using counterfactual policy optimization.

💬 Research Conclusions:

– ExpA and EARL demonstrate superior performance over vocabulary-constrained actions in multi-turn interactions and contingent planning, achieving robust results in tasks like calculator-based multi-task learning and the partially observed sorting problem.

👉 Paper link: https://huggingface.co/papers/2510.07581

26. PRISMM-Bench: A Benchmark of Peer-Review Grounded Multimodal Inconsistencies

🔑 Keywords: Large Multimodal Models, PRISMM-Bench, Multimodal Scientific Reasoning, Inconsistency Detection, Trustworthy Scientific Assistants

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to evaluate the effectiveness of Large Multimodal Models in detecting, correcting, and reasoning over inconsistencies in scientific papers, highlighting significant challenges in multimodal scientific reasoning.

🛠️ Research Methods:

– Introduction of PRISMM-Bench, grounded in real reviewer-flagged inconsistencies, and creation of a dataset with 262 inconsistencies from 242 papers. Development of three tasks: inconsistency identification, remedy, and pair matching, alongside structured JSON-based answer representations to minimize biases in evaluation.

💬 Research Conclusions:

– The performance of state-of-the-art LMMs in addressing multimodal scientific reasoning proved significantly low, with results ranging from 26.1% to 54.2%, accentuating the challenges and motivating advancements toward trustworthy scientific assistants.

👉 Paper link: https://huggingface.co/papers/2510.16505

27. When “Correct” Is Not Safe: Can We Trust Functionally Correct Patches Generated by Code Agents?

🔑 Keywords: FCV-Attack, Functionally Correct yet Vulnerable patches, SOTA LLMs, SWE-Bench

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The study aims to uncover a new security threat to code agents by introducing the concept of Functionally Correct yet Vulnerable (FCV) patches, revealing vulnerabilities within functionally correct code.

🛠️ Research Methods:

– The research introduces the FCV-Attack, which leverages black-box access and a single query to exploit vulnerabilities across 12 agent-model combinations on SWE-Bench, illustrating the susceptibility of leading AI systems like ChatGPT and Claude to such attacks.

💬 Research Conclusions:

– The findings highlight a significant oversight in current evaluation methods, urging the need for enhanced security measures in code agents to defend against FCV threats.

👉 Paper link: https://huggingface.co/papers/2510.17862

28. Pruning Overparameterized Multi-Task Networks for Degraded Web Image Restoration

🔑 Keywords: MIR-L, Image Restoration, Iterative Pruning, Sparse Subnetworks, Overparameterized Deep Models

💡 Category: Computer Vision

🌟 Research Objective:

– The study aims to develop a compressed multi-task image restoration model, named MIR-L, that reduces parameters while maintaining high performance.

🛠️ Research Methods:

– The research employs iterative pruning to remove low-magnitude weights while resetting remaining weights, uncovering sparse subnetworks that perform efficiently.

💬 Research Conclusions:

– MIR-L successfully retains only 10% of the trainable parameters and achieves state-of-the-art image restoration performance across tasks like deraining, dehazing, and denoising, showcasing its efficiency and effectiveness.

👉 Paper link: https://huggingface.co/papers/2510.14463

29. Unleashing Scientific Reasoning for Bio-experimental Protocol Generation via Structured Component-based Reward Mechanism

🔑 Keywords: Thoth, Sketch-and-Fill, SciRecipe, large language models, structured component-based reward mechanism

💡 Category: Generative Models

🌟 Research Objective:

– The study aims to enhance the reliability and executability of scientific protocols generated by AI through the development of Thoth, a model using the Sketch-and-Fill paradigm and structured component-based reward mechanism.

🛠️ Research Methods:

– The researchers introduced SciRecipe, a large-scale dataset, and employed the Sketch-and-Fill paradigm which separates analysis, structuring, and expression steps. They also utilized a structured component-based reward mechanism to improve model optimization for reliable protocol generation.

💬 Research Conclusions:

– Thoth outperformed existing large language models in generating precise, logically ordered, and executable scientific protocols, achieving notable improvements across benchmarks in step alignment, logical sequencing, and semantic accuracy. The project highlights the potential for AI to act as reliable scientific assistants.

👉 Paper link: https://huggingface.co/papers/2510.15600

30. Any-Depth Alignment: Unlocking Innate Safety Alignment of LLMs to Any-Depth

🔑 Keywords: Any-Depth Alignment, Large Language Models, Adversarial Attacks, Shallow-Refusal Training

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to enhance the safety of Large Language Models (LLMs) through the Any-Depth Alignment (ADA) approach, ensuring robust protection against adversarial attacks without altering the model’s parameters.

🛠️ Research Methods:

– ADA is introduced as an inference-time defense mechanism that reintegrates alignment tokens mid-stream, allowing the model to reassess harmfulness and recover refusals at various generation stages without modifying the base model’s parameters.

💬 Research Conclusions:

– ADA achieves a near-100% refusal rate against adversarial prefill attacks across diverse model families and reduces the success rate of adversarial prompt attacks to below 3%, all while maintaining utility on benign tasks and resilience after further instruction tuning.

👉 Paper link: https://huggingface.co/papers/2510.18081

31. Unimedvl: Unifying Medical Multimodal Understanding And Generation Through Observation-Knowledge-Analysis

🔑 Keywords: Multimodal Medical Model, Medical Vision-Language Tasks, AI-generated summary, Progressive Curriculum Learning, Bidirectional Knowledge Sharing

💡 Category: AI in Healthcare

🌟 Research Objective:

– The study aims to develop a unified multimodal medical model that integrates image understanding and generation, enhancing performance across various medical vision-language tasks.

🛠️ Research Methods:

– Utilization of the Observation-Knowledge-Analysis (OKA) paradigm, introducing UniMed-5M, a dataset with over 5.6 million samples for multimodal pairing, and Progressive Curriculum Learning for structured introduction of medical multimodal knowledge.

– Development of UniMedVL, the first unified multimodal model designed to simultaneously handle image understanding and generation tasks within a single architecture.

💬 Research Conclusions:

– The proposed UniMedVL model outperforms existing models on five medical image understanding benchmarks and matches the quality of specialized models across eight medical imaging modalities.

– The unified framework allows bidirectional knowledge sharing, where generation tasks enhance visual understanding, demonstrating improvements across diverse medical vision-language tasks.

👉 Paper link: https://huggingface.co/papers/2510.15710

32. Planned Diffusion

🔑 Keywords: Planned Diffusion, Autoregressive Models, Diffusion Models, Text Generation, Pareto Frontier

💡 Category: Generative Models

🌟 Research Objective:

– The objective is to create a hybrid method called planned diffusion that improves text generation speed while maintaining quality by combining autoregressive and diffusion models.

🛠️ Research Methods:

– The approach involves a two-stage process: an autoregressive plan breaks the output into smaller spans, followed by simultaneous generation of these spans using diffusion models.

💬 Research Conclusions:

– Planned diffusion effectively expands the speed-quality Pareto frontier, achieving faster text generation with minor quality loss, as evidenced by its performance on AlpacaEval with a speedup of 1.27x to 1.81x and a minimal drop in win rate.

👉 Paper link: https://huggingface.co/papers/2510.18087