AI Native Daily Paper Digest – 20251104

1. Every Activation Boosted: Scaling General Reasoner to 1 Trillion Open Language Foundation

🔑 Keywords: Ling 2.0, Mixture-of-Experts, sparse activation, reasoning accuracy, computational efficiency

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– Present Ling 2.0, a reasoning-oriented language model that scales from billions to a trillion parameters, emphasizing high sparsity and computational efficiency.

🛠️ Research Methods:

– Utilize the Mixture-of-Experts paradigm along with innovative training techniques, including high-sparsity MoE, reinforcement-based fine-tuning, and full-scale FP8 training.

💬 Research Conclusions:

– Ling 2.0 establishes a new Pareto frontier by aligning sparse activation with reasoning objectives, thus providing an efficient foundation for future reasoning and thinking models.

👉 Paper link: https://huggingface.co/papers/2510.22115

2. Generalizing Test-time Compute-optimal Scaling as an Optimizable Graph

🔑 Keywords: Agent-REINFORCE, multi-LLM collaboration graph, Test-Time Scaling, probabilistic graph optimization, textual gradient

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Optimize multi-LLM collaboration graphs for better sample efficiency and search performance under accuracy and latency constraints.

🛠️ Research Methods:

– Reformulated the problem as probabilistic graph optimization and developed the Agent-REINFORCE framework that mirrors the REINFORCE pipeline by using sampling-feedback-update method.

💬 Research Conclusions:

– Agent-REINFORCE outperforms traditional and LLM-based baselines in sample efficiency and search performance, successfully identifying optimal graphs for accuracy and inference latency objectives.

👉 Paper link: https://huggingface.co/papers/2511.00086

3. The Underappreciated Power of Vision Models for Graph Structural Understanding

🔑 Keywords: Vision models, Graph Neural Networks, GraphAbstract, scale-invariant reasoning, global structural understanding

💡 Category: Foundations of AI

🌟 Research Objective:

– To explore the potential of vision models for understanding global graph structures compared to Graph Neural Networks (GNNs).

🛠️ Research Methods:

– Introduction of the GraphAbstract benchmark to evaluate models’ ability to perceive global graph properties like humans.

💬 Research Conclusions:

– Vision models outperform GNNs on tasks requiring holistic structural understanding and maintain generalizability across varying graph scales.

– GNNs struggle with global pattern abstraction and degrade with increasing graph size, highlighting the underutilized capabilities of vision models for tasks that involve global topological awareness.

👉 Paper link: https://huggingface.co/papers/2510.24788

4. UniLumos: Fast and Unified Image and Video Relighting with Physics-Plausible Feedback

🔑 Keywords: AI Native, RGB-space, flow matching, physical consistency, relighting quality

💡 Category: Computer Vision

🌟 Research Objective:

– The paper introduces UniLumos, a unified relighting framework that aims to enhance physical plausibility in image and video relighting by integrating RGB-space geometry feedback into a flow matching backbone.

🛠️ Research Methods:

– Utilizes depth and normal maps for supervising the model to align lighting effects with scene structure while employing path consistency learning to reduce computational costs.

– Develops a six-dimensional annotation protocol for capturing illumination attributes and proposes LumosBench for evaluating lighting controllability via large vision-language models.

💬 Research Conclusions:

– UniLumos demonstrates state-of-the-art relighting quality with improved physical consistency and achieves a 20x speedup in both image and video relighting processes.

👉 Paper link: https://huggingface.co/papers/2511.01678

5. ROVER: Benchmarking Reciprocal Cross-Modal Reasoning for Omnimodal Generation

🔑 Keywords: Reciprocal cross-modal reasoning, Unified multimodal models, Visual generation quality, Symbolic reasoning, Verbal prompts

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To evaluate reciprocal cross-modal reasoning in unified multimodal models using a new benchmark called ROVER, which assesses how effectively these models integrate text and image understanding.

🛠️ Research Methods:

– The study employs ROVER, a human-annotated benchmark with 1312 tasks and 1876 images, targeting reciprocal cross-modal reasoning through verbally and visually augmented tasks for image and verbal generation.

💬 Research Conclusions:

– Cross-modal reasoning plays a crucial role in determining visual generation quality, with interleaved models outperforming non-interleaved ones. Additionally, models struggle with symbolic reasoning, showing a gap between interpreting perceptual concepts and generating visual abstractions for symbolic tasks.

👉 Paper link: https://huggingface.co/papers/2511.01163

6. PHUMA: Physically-Grounded Humanoid Locomotion Dataset

🔑 Keywords: Motion Imitation, Humanoid Locomotion, Motion Capture Datasets, Physical Artifacts, Data Curation

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The research aims to improve motion imitation for humanoid robotics by creating a large-scale and physically reliable locomotion dataset, called PHUMA, which addresses issues found in traditional human video data such as physical artifacts.

🛠️ Research Methods:

– The methods involve using large-scale human video data with physics-constrained retargeting and careful data curation to eliminate physical artifacts. PHUMA ensures joint limits, ground contact, and eliminates foot skating to ensure physically reliable motions.

💬 Research Conclusions:

– The PHUMA dataset significantly outperforms existing datasets like Humanoid-X and AMASS in imitating a variety of humanlike behaviors. The policies trained using PHUMA demonstrated substantial improvements in tests such as imitation of unseen motion and path following with pelvis-only guidance.

👉 Paper link: https://huggingface.co/papers/2510.26236

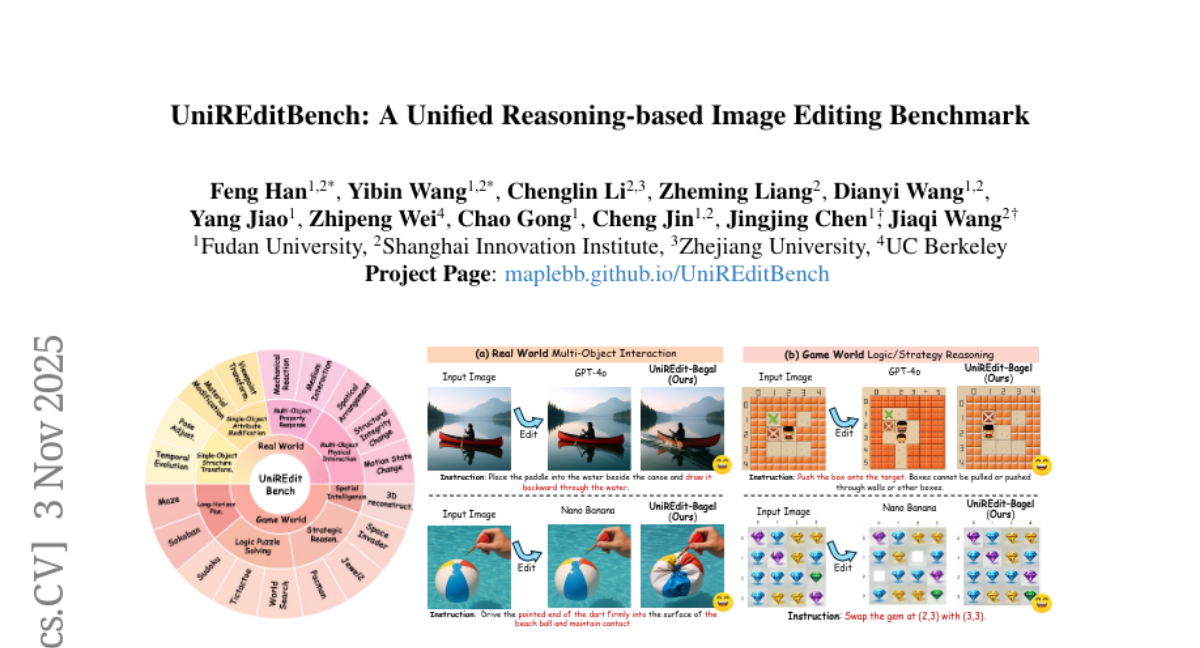

7. UniREditBench: A Unified Reasoning-based Image Editing Benchmark

🔑 Keywords: reasoning-based image editing, multi-object interactions, game-world scenarios, multimodal dual-reference evaluation, synthetic dataset

💡 Category: Computer Vision

🌟 Research Objective:

– To address limitations of existing benchmarks in image editing by proposing UniREditBench, focusing on reasoning-based evaluation in both real-life and game-world scenarios.

🛠️ Research Methods:

– Development of a comprehensive benchmark with 2,700 curated samples covering diverse scenarios and dimensions; introduction of multimodal dual-reference evaluation for improved reliability; construction of a large-scale synthetic dataset, UniREdit-Data-100K, for fine-tuning and evaluating models.

💬 Research Conclusions:

– UniREditBench demonstrates improvements in handling complex image editing tasks requiring implicit reasoning, providing robust assessment across existing and new benchmarks through open-source and closed-source model evaluation.

👉 Paper link: https://huggingface.co/papers/2511.01295

8. World Simulation with Video Foundation Models for Physical AI

🔑 Keywords: Physical AI, Cosmos-Predict2.5, Cosmos-Transfer2.5, Sim2Real, Text2World

💡 Category: Generative Models

🌟 Research Objective:

– Introduce advanced Physical AI models, Cosmos-Predict2.5 and Cosmos-Transfer2.5, that unify text, image, and video generation and enhance video quality and instruction alignment.

🛠️ Research Methods:

– Used a flow-based architecture and a Physical AI vision-language model, trained on 200 million curated video clips with reinforcement learning-based post-training.

💬 Research Conclusions:

– Cosmos-Predict2.5 and Cosmos-Transfer2.5 establish themselves as versatile tools for scaling embodied intelligence, with open resources provided to lower adoption barriers and foster innovation.

👉 Paper link: https://huggingface.co/papers/2511.00062

9. ToolScope: An Agentic Framework for Vision-Guided and Long-Horizon Tool Use

🔑 Keywords: ToolScope, Multimodal Large Language Models, Visual Question Answering, Perceive tool, AI-generated summary

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance multimodal large language models (MLLMs) for visual question answering by integrating external tools through the ToolScope framework.

🛠️ Research Methods:

– ToolScope incorporates three primary components: Global Navigator for strategic guidance, Agentic Executor for integrating external tools like Search, Code, and Perceive for local perception, and Response Synthesizer for organizing reasoning outputs.

💬 Research Conclusions:

– ToolScope exhibits strong generalization capabilities, with up to +6.69% performance improvement across four VQA benchmarks in diverse domains.

👉 Paper link: https://huggingface.co/papers/2510.27363

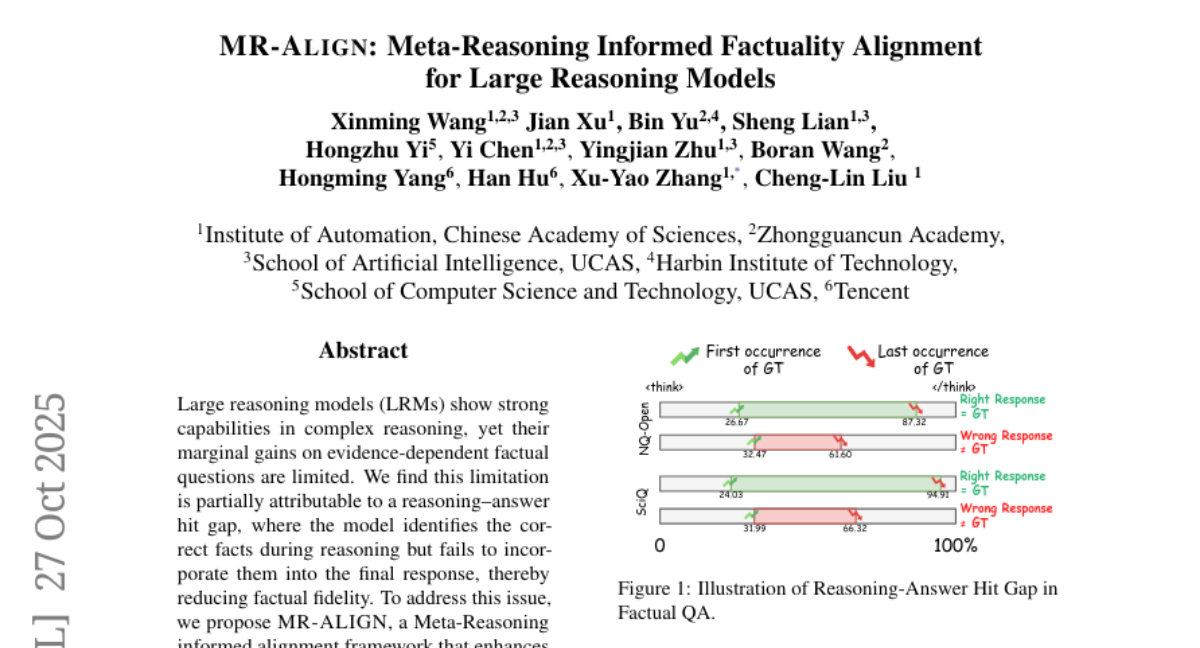

10. MR-Align: Meta-Reasoning Informed Factuality Alignment for Large Reasoning Models

🔑 Keywords: MR-ALIGN, Meta-Reasoning, Large reasoning models, factuality, coherent reasoning trajectories

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The objective is to enhance the factuality of Large reasoning models by aligning their reasoning process with MR-ALIGN, improving accuracy and reducing misleading outcomes.

🛠️ Research Methods:

– MR-ALIGN utilizes a Meta-Reasoning informed alignment framework to quantify state transition probabilities and construct a transition-aware implicit reward that promotes beneficial reasoning patterns while suppressing defective ones.

💬 Research Conclusions:

– Empirical evaluations show that MR-ALIGN consistently improves accuracy and truthfulness across factual QA datasets and benchmarks, highlighting the importance of aligning the reasoning process itself in advancing factuality in Large reasoning models.

👉 Paper link: https://huggingface.co/papers/2510.24794

11. OpenSIR: Open-Ended Self-Improving Reasoner

🔑 Keywords: OpenSIR, Self-Play, Large Language Models, Mathematical Discovery, Open-Ended Learning

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To enhance reasoning abilities in Large Language Models through a self-play framework that allows for open-ended problem generation and solving without external supervision.

🛠️ Research Methods:

– Implementing OpenSIR, a self-play framework where LLMs alternate between teacher and student roles to generate and solve novel problems by focusing on difficulty and diversity.

💬 Research Conclusions:

– OpenSIR significantly improves instruction models by advancing mathematical discovery and learning autonomously from basic to advanced mathematics.

– It demonstrated notable performance improvements in models like Llama-3.2-3B-Instruct and Gemma-2-2B-Instruct on benchmarks such as GSM8K and College Math.

👉 Paper link: https://huggingface.co/papers/2511.00602

12. Towards Universal Video Retrieval: Generalizing Video Embedding via Synthesized Multimodal Pyramid Curriculum

🔑 Keywords: Video Retrieval, Zero-Shot Generalization, Universal Video Retrieval Benchmark, Modality Pyramid

💡 Category: Computer Vision

🌟 Research Objective:

– To develop a framework with diagnostic benchmarks and data synthesis for achieving state-of-the-art zero-shot generalization in video retrieval.

🛠️ Research Methods:

– Introduction of the Universal Video Retrieval Benchmark (UVRB) to diagnose capability gaps.

– Development of a scalable synthesis workflow generating 1.55 million high-quality data pairs.

– Creation of the Modality Pyramid curriculum for training the General Video Embedder.

💬 Research Conclusions:

– The framework enables superior zero-shot generalization in video retrieval.

– Existing benchmarks inadequately predict general ability, with overlooked scenarios in retrieval being highlighted.

👉 Paper link: https://huggingface.co/papers/2510.27571

13. LongCat-Flash-Omni Technical Report

🔑 Keywords: LongCat-Flash-Omni, Omni-modal Model, AI-generated summary, Audio-visual Interaction, Multimodal Perception

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To introduce and evaluate LongCat-Flash-Omni, a 560 billion parameter omni-modal model designed for real-time audio-visual interactions.

🛠️ Research Methods:

– Curriculum-inspired progressive training strategy and modality-decoupled parallelism scheme to enhance multimodal capabilities.

💬 Research Conclusions:

– LongCat-Flash-Omni demonstrates state-of-the-art performance on omni-modal benchmarks and excels in various modality-specific tasks, achieving low-latency real-time audio-visual interaction.

👉 Paper link: https://huggingface.co/papers/2511.00279

14. TIR-Bench: A Comprehensive Benchmark for Agentic Thinking-with-Images Reasoning

🔑 Keywords: Visual Reasoning, Multimodal Models, TIR-Bench, Chain-of-Thought, Thinking-with-Images

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To develop and introduce TIR-Bench, a benchmark for assessing advanced visual reasoning in multimodal models, focusing on tasks that require tool use and thought processes involving images.

🛠️ Research Methods:

– Evaluation of 22 multimodal large language models using the TIR-Bench benchmark across 13 diverse tasks, focusing on the integration of novel tool use in visual processing.

💬 Research Conclusions:

– Demonstrates the universal challenge presented by TIR-Bench, indicating that strong performance necessitates genuine visual reasoning and thinking-with-images capabilities.

– A pilot study compares the effectiveness of direct versus agentic fine-tuning in enhancing model performance.

👉 Paper link: https://huggingface.co/papers/2511.01833

15. EBT-Policy: Energy Unlocks Emergent Physical Reasoning Capabilities

🔑 Keywords: EBT-Policy, Energy-Based Models, Robotics, Zero-Shot Recovery, Uncertainty-Aware Inference

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The research introduces EBT-Policy, an energy-based architecture, aiming to solve core challenges in robotic and real-world settings by improving robustness and reducing computational cost.

🛠️ Research Methods:

– EBT-Policy leverages energy landscapes to enhance robustness and reduce exposure bias, with the model demonstrating effectiveness both in high-dimensional spaces and real-world tasks.

💬 Research Conclusions:

– EBT-Policy consistently outperforms diffusion-based policies in robotic tasks, requiring less training and computation, and shows emergent capabilities such as zero-shot recovery and dynamic compute allocation.

👉 Paper link: https://huggingface.co/papers/2510.27545

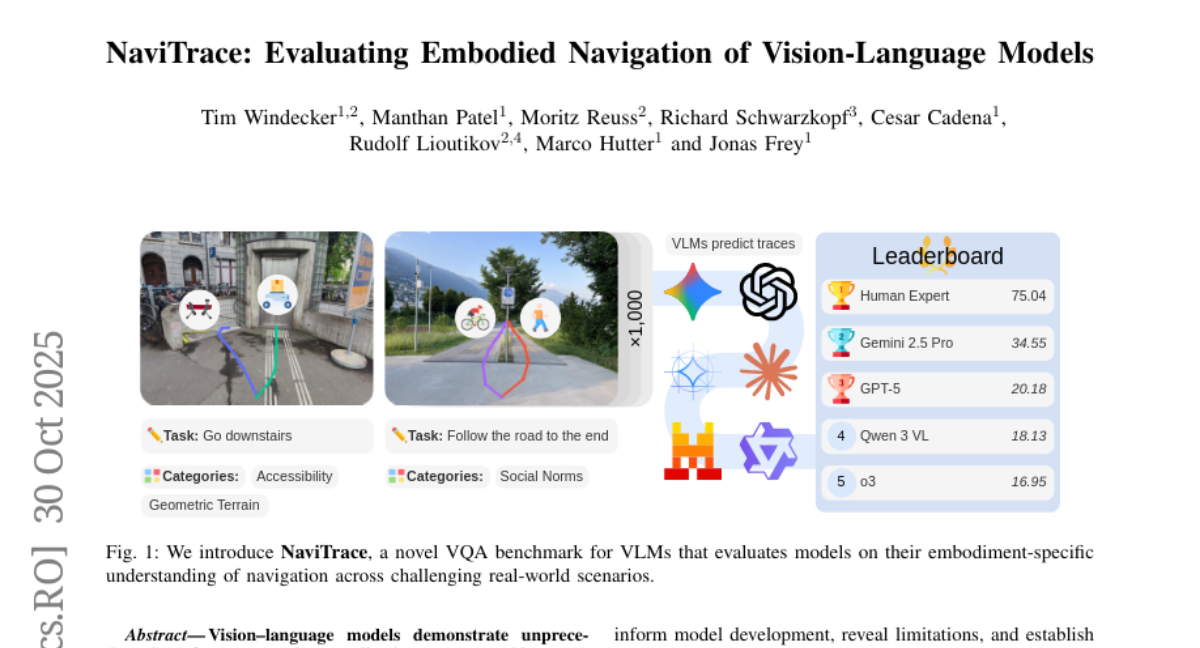

16. NaviTrace: Evaluating Embodied Navigation of Vision-Language Models

🔑 Keywords: NaviTrace, Visual Question Answering, robotic navigation, Vision-language models, semantic-aware trace score

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The paper introduces NaviTrace, a benchmark designed to evaluate robotic navigation capabilities through a semantic-aware trace score.

🛠️ Research Methods:

– Evaluation of eight state-of-the-art Vision-language models using a newly introduced semantic-aware trace score across 1000 scenarios and more than 3000 expert traces.

💬 Research Conclusions:

– The study finds a significant gap between model and human performance due to poor spatial grounding and goal localization, establishing NaviTrace as a scalable benchmark for real-world robotic navigation.

👉 Paper link: https://huggingface.co/papers/2510.26909

17. Do Vision-Language Models Measure Up? Benchmarking Visual Measurement Reading with MeasureBench

🔑 Keywords: MeasureBench, Vision-Language Models, Indicator Localization, Reinforcement Learning, Fine-Grained Spatial Grounding

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper introduces MeasureBench to evaluate the ability of vision-language models to read measurements from images, both real-world and synthesized, aiming to identify challenges in this domain.

🛠️ Research Methods:

– They utilize a data synthesis pipeline to procedurally generate various types of gauges with controllable visual aspects and conduct evaluations on popular proprietary and open-weight vision-language models.

💬 Research Conclusions:

– Vision-language models struggle with indicator localization, leading to significant errors despite correct textual reasoning. Preliminary experiments with reinforcement learning yielded positive results on synthetic data, but not on real-world images, highlighting a limitation in fine-grained spatial grounding.

👉 Paper link: https://huggingface.co/papers/2510.26865

18. left|,circlearrowright,text{BUS},right|: A Large and Diverse Multimodal Benchmark for evaluating the ability of Vision-Language Models to understand

Rebus Puzzles

🔑 Keywords: Rebus Puzzles, Vision-Language Models, structured reasoning, in-context example selection

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance the performance of Vision-Language Models in solving Rebus Puzzles through structured reasoning and optimal example selection.

🛠️ Research Methods:

– Development of a benchmark with 1,333 diverse Rebus Puzzles covering 18 categories.

– Proposal of RebusDescProgICE, a model-agnostic framework incorporating unstructured descriptions and code-based structured reasoning.

💬 Research Conclusions:

– The proposed framework improves performance by 2.1-4.1% for closed-source and 20-30% for open-source Vision-Language Models compared to existing methods.

👉 Paper link: https://huggingface.co/papers/2511.01340

19. Actial: Activate Spatial Reasoning Ability of Multimodal Large Language Models

🔑 Keywords: Viewpoint Learning, Multimodal Large Language Models, 3D reasoning, Supervised Fine-Tuning, Reinforcement Learning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper aims to enhance the spatial reasoning capabilities of Multimodal Large Language Models (MLLMs) for robust 3D reasoning tasks.

🛠️ Research Methods:

– Introduces Viewpoint Learning with a two-stage fine-tuning strategy and a hybrid cold-start initialization method.

– Utilizes a dataset, Viewpoint-100K, comprising diverse object-centric image pairs and question-answer sets.

– Employs Supervised Fine-Tuning (SFT) for foundational knowledge injection followed by generalization through Reinforcement Learning with the Group Relative Policy Optimization (GRPO) algorithm.

💬 Research Conclusions:

– The methods substantially improve the spatial reasoning ability of MLLMs, benefiting both in-domain and out-of-domain tasks.

– Suggests the development of foundational spatial skills in MLLMs to support advancements in robotics, autonomous systems, and 3D scene understanding.

👉 Paper link: https://huggingface.co/papers/2511.01618

20. Trove: A Flexible Toolkit for Dense Retrieval

🔑 Keywords: Trove, retrieval toolkit, data management, multi-node execution, inference pipeline

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The objective of the research is to introduce Trove, a novel open-source retrieval toolkit designed to streamline data management and improve research experimentation with customizable and efficient on-the-fly processing.

🛠️ Research Methods:

– Trove utilizes efficient data management techniques to handle and process retrieval datasets dynamically with minimal code. It supports customizable components and offers a low-code pipeline for evaluation and hard negative mining.

💬 Research Conclusions:

– Trove significantly reduces memory consumption and demonstrates increased inference speed aligned with the number of processing nodes. It facilitates exploratory research by simplifying retrieval experiments and supporting extensive customization.

👉 Paper link: https://huggingface.co/papers/2511.01857

21. Data-Efficient RLVR via Off-Policy Influence Guidance

🔑 Keywords: Reinforcement Learning, Verifiable Rewards, Influence Functions, Off-Policy Estimation, Sparse Random Projection

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To enhance data selection in Reinforcement Learning with Verifiable Rewards (RLVR) and improve the efficiency of training large language models.

🛠️ Research Methods:

– Proposed a theoretically-grounded approach leveraging influence functions to estimate data contribution and introduced off-policy influence estimation and sparse random projection for efficient computation.

💬 Research Conclusions:

– Developed Curriculum RL with Off-Policy Influence guidance (CROPI), which significantly accelerates training, reducing data usage and demonstrating the potential of influence-based data selection in RLVR.

👉 Paper link: https://huggingface.co/papers/2510.26491

22. Towards Robust Mathematical Reasoning

🔑 Keywords: IMO-Bench, Mathematical Reasoning, Foundation Models, Gemini Deep Think

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– The objective of the study is to advance the mathematical reasoning capabilities of foundation models using a specially designed suite of benchmarks called IMO-Bench, focused on IMO-level problems.

🛠️ Research Methods:

– IMO-Bench, including IMO-AnswerBench and IMO-Proof Bench, along with detailed grading guidelines, are used to evaluate models’ performance on mathematical reasoning tasks with both short answer and proof-writing capabilities.

💬 Research Conclusions:

– The study achieved gold-level performance with Gemini Deep Think at IMO 2025, with the model outperforming previous models significantly on both IMO-AnswerBench and IMO-Proof Bench. The results show strong correlation with human evaluations, utilizing IMO-GradingBench for further advancements.

👉 Paper link: https://huggingface.co/papers/2511.01846

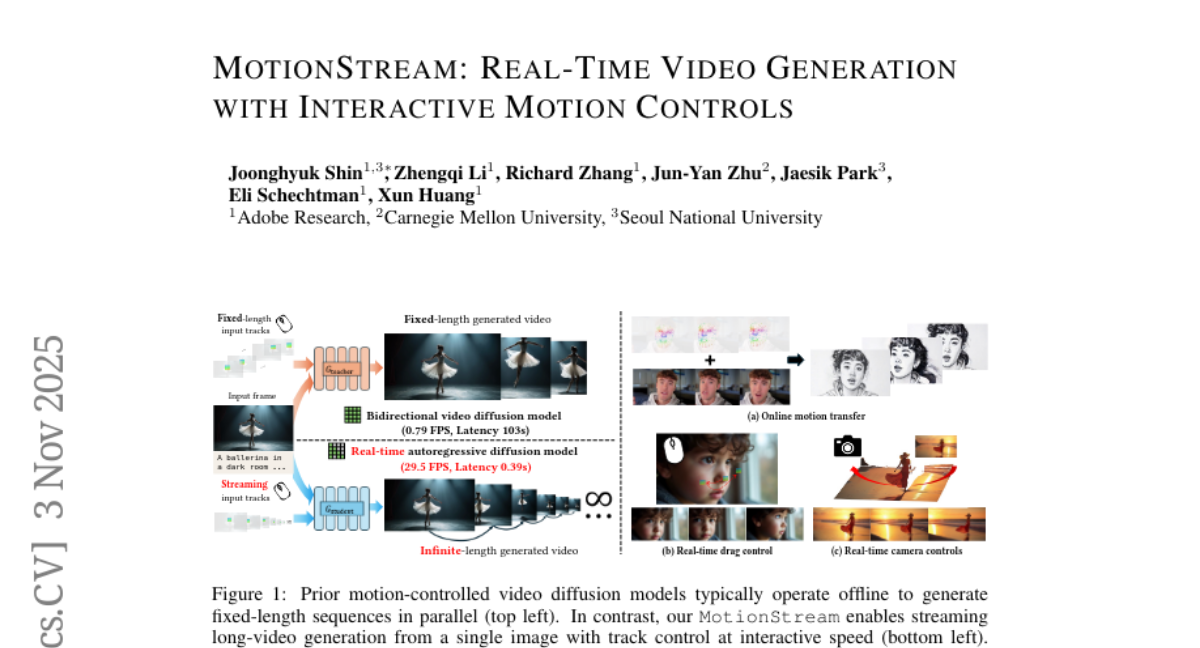

23. MotionStream: Real-Time Video Generation with Interactive Motion Controls

🔑 Keywords: MotionStream, text-to-video model, motion control, real-time streaming, sliding-window causal attention

💡 Category: Generative Models

🌟 Research Objective:

– The research seeks to enable real-time video generation with sub-second latency, reaching up to 29 frames per second (FPS) through MotionStream, incorporating motion control into text-to-video models.

🛠️ Research Methods:

– The approach involves distilling a bidirectional text-to-video model with motion control into a causal student using Self Forcing with Distribution Matching Distillation alongside sliding-window causal attention with attention sinks.

💬 Research Conclusions:

– MotionStream delivers state-of-the-art results in motion following and video quality with significantly reduced latency, uniquely supporting infinite-length streaming. It allows for an interactive experience where users can control video elements in real-time.

👉 Paper link: https://huggingface.co/papers/2511.01266

24. How Far Are Surgeons from Surgical World Models? A Pilot Study on Zero-shot Surgical Video Generation with Expert Assessment

🔑 Keywords: Video Generation, Surgical AI, Surgical Plausibility Pyramid, Zero-shot Prediction, Visual Perceptual Plausibility

💡 Category: AI in Healthcare

🌟 Research Objective:

– To address the gap between visual plausibility and causal understanding in surgical AI models by introducing the SurgVeo benchmark and the Surgical Plausibility Pyramid.

🛠️ Research Methods:

– The study presented SurgVeo, an expert-curated benchmark, and evaluated the Veo-3 model on a zero-shot prediction task using surgical clips assessed by surgeons through the four-tiered SPP framework.

💬 Research Conclusions:

– The analysis underscores a “plausibility gap” where the Veo-3 model excels in visual mimicry but fails in demonstrating causal understanding, particularly at higher tiers of the SPP, highlighting directions for future AI model improvements in healthcare.

👉 Paper link: https://huggingface.co/papers/2511.01775

25. UME-R1: Exploring Reasoning-Driven Generative Multimodal Embeddings

🔑 Keywords: generative multimodal embedding, enhancement, reasoning-driven generation, reinforcement learning, inference-time scalability

💡 Category: Generative Models

🌟 Research Objective:

– To pioneer the exploration of generative embeddings by unifying embedding tasks within a generative paradigm, enhancing performance through reasoning-driven generation and reinforcement learning.

🛠️ Research Methods:

– Introduces UME-R1, a framework with a two-stage training strategy: cold-start supervised fine-tuning to equip with reasoning capabilities, and reinforcement learning to optimize generative embedding quality.

💬 Research Conclusions:

– Generative embeddings achieve substantial performance gains over discriminative models.

– The combination of discriminative and generative embeddings surpasses the performance of either type alone.

– Reinforcement learning effectively enhances generative embeddings establishing a scalable optimization paradigm.

– Repeated sampling at inference boosts task coverage, highlighting scalability potential.

👉 Paper link: https://huggingface.co/papers/2511.00405

26. Unified Diffusion VLA: Vision-Language-Action Model via Joint Discrete Denoising Diffusion Process

🔑 Keywords: Unified Diffusion VLA, Joint Discrete Denoising Diffusion Process, vision-language-action, faster inference, hybrid attention mechanism

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The research aims to enhance vision-language-action tasks through a synchronous denoising process that integrates multiple modalities for joint understanding, generation, and action.

🛠️ Research Methods:

– The study introduces the Unified Diffusion VLA model and JD3P, employing a unified tokenized space and hybrid attention mechanism, along with a two-stage training pipeline to optimize performance and efficiency.

💬 Research Conclusions:

– The proposed approach achieves state-of-the-art results on several benchmarks with significantly faster inference compared to autoregressive methods, demonstrating effectiveness in both theoretical and real-world evaluations.

👉 Paper link: https://huggingface.co/papers/2511.01718

27. GUI-AIMA: Aligning Intrinsic Multimodal Attention with a Context Anchor for GUI Grounding

🔑 Keywords: GUI Grounding, MLLMs, Attention-based, Coordinate-free, Data Efficiency

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Develop an attention-based and coordinate-free framework, GUI-AIMA, to enhance GUI grounding with minimal training data.

🛠️ Research Methods:

– Utilize alignment of MLLM attention with patch-wise signals and employ multi-head aggregation on query-visual attention matrices for adaptive signal calculation.

💬 Research Conclusions:

– GUI-AIMA achieves state-of-the-art performance with exceptional data efficiency, demonstrating an average accuracy of 58.6% on ScreenSpot-Pro and 62.2% on OSWorld-G using only 85k screenshots.

👉 Paper link: https://huggingface.co/papers/2511.00810

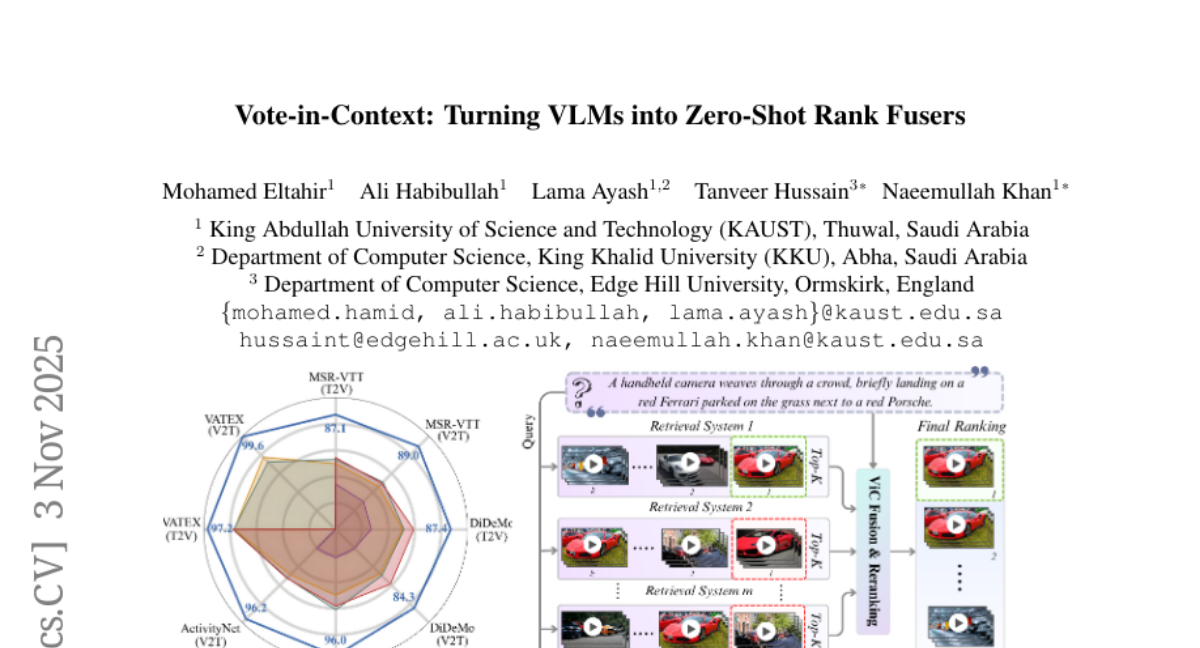

28. Vote-in-Context: Turning VLMs into Zero-Shot Rank Fusers

🔑 Keywords: Vote-in-Context, Vision-Language Models, zero-shot retrieval, fusion, list-wise reranking

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce Vote-in-Context, a training-free framework for zero-shot reranking and fusion in cross-modal video retrieval using Vision-Language Models.

🛠️ Research Methods:

– Serialize content evidence and retriever metadata within Vision-Language Model prompts.

– Utilize a compact serialization map called S-Grid, representing each video as an image grid, optionally paired with subtitles.

– Evaluate ViC as both a single-list reranker and an ensemble fuser across benchmarks such as ActivityNet and VATEX.

💬 Research Conclusions:

– ViC outperforms previous baselines in zero-shot retrieval scenarios, notably improving Recall@1 scores and establishing new state-of-the-art performance.

– The framework effectively handles complex visual, temporal, and textual signals, demonstrating its adaptability and robustness.

👉 Paper link: https://huggingface.co/papers/2511.01617

29. AthenaBench: A Dynamic Benchmark for Evaluating LLMs in Cyber Threat Intelligence

🔑 Keywords: Large Language Models, Cyber Threat Intelligence, AthenaBench, Risk Mitigation, Reasoning Capabilities

💡 Category: Natural Language Processing

🌟 Research Objective:

– The research aims to enhance the benchmarking of Large Language Models in the context of Cyber Threat Intelligence by developing AthenaBench, which improves dataset quality and evaluation metrics.

🛠️ Research Methods:

– The study involves extending CTIBench with AthenaBench, evaluating twelve LLMs, including high-end proprietary and open-source models, across various CTI tasks, focusing on reasoning-intensive tasks like threat actor attribution and risk mitigation.

💬 Research Conclusions:

– Proprietary LLMs showcase stronger capabilities yet fall short in reasoning-intensive tasks, while open-source models perform even worse, highlighting the need for models specifically designed for CTI automation.

👉 Paper link: https://huggingface.co/papers/2511.01144

30. Multi-Step Knowledge Interaction Analysis via Rank-2 Subspace Disentanglement

🔑 Keywords: Parametric Knowledge, Context Knowledge, Natural Language Explanations, Large Language Models, knowledge interactions

💡 Category: Natural Language Processing

🌟 Research Objective:

– This study proposes a novel rank-2 projection subspace to analyze the multi-step interactions between Parametric Knowledge and Context Knowledge in Large Language Models.

🛠️ Research Methods:

– The research utilizes a rank-2 subspace approach for the first time to disentangle and assess the contributions of PK and CK across longer NLE sequences. Experiments were conducted on four QA datasets using three instruction-tuned Large Language Models.

💬 Research Conclusions:

– The findings reveal that diverse knowledge interactions are inadequately represented in a rank-1 subspace but are effectively captured using the rank-2 projection. Hallucinated NLEs tend to align with PK, while context-faithful NLEs balance both PK and CK. Additionally, Chain-of-Thought prompting shifts NLEs towards CK by reducing reliance on PK.

👉 Paper link: https://huggingface.co/papers/2511.01706