AI Native Daily Paper Digest – 20251107

1. Thinking with Video: Video Generation as a Promising Multimodal Reasoning Paradigm

🔑 Keywords: Thinking with Video, Video Generation Models, Multimodal Reasoning, Video Thinking Benchmark, Sora-2

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective is to enhance multimodal reasoning by integrating video generation models, bridging visual and textual reasoning within a unified temporal framework.

🛠️ Research Methods:

– The methods include developing the Video Thinking Benchmark (VideoThinkBench), which encompasses vision-centric and text-centric tasks to test the capabilities of the Sora-2 video generation model.

💬 Research Conclusions:

– The findings suggest that Sora-2 is comparable or superior to current state-of-the-art Vision Language Models on certain vision-centric tasks and shows high accuracy on text-centric tasks, demonstrating its potential as a unified model for multimodal reasoning.

👉 Paper link: https://huggingface.co/papers/2511.04570

2. V-Thinker: Interactive Thinking with Images

🔑 Keywords: Multimodal Reasoning, Reinforcement Learning, Image-Interactive Thinking, Vision-Centric Tasks, VTBench

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The aim is to develop V-Thinker, a multimodal reasoning assistant that improves image-interactive thinking using reinforcement learning for enhanced performance in vision-centric tasks.

🛠️ Research Methods:

– Introduced V-Thinker with two key components: a Data Evolution Flywheel for dataset synthesis across diversity, quality, and difficulty, and a Visual Progressive Training Curriculum for aligning perception and integrating reasoning via a two-stage reinforcement learning framework.

💬 Research Conclusions:

– V-Thinker outperforms strong LMM-based baselines in general and interactive reasoning scenarios, showing significant advances in image-interactive reasoning applications.

👉 Paper link: https://huggingface.co/papers/2511.04460

3. Scaling Agent Learning via Experience Synthesis

🔑 Keywords: DreamGym, Reinforcement Learning, AI Native, Experience Model, Curriculum Learning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Introduce DreamGym, a unified framework to synthesize diverse experiences for scalable online reinforcement learning (RL) training, enhancing agent performance and minimizing real-world interactions.

🛠️ Research Methods:

– Use a reasoning-based experience model to produce consistent state transitions and feedback signals, coupled with an experience replay buffer initialized with offline data.

– Implement adaptive task generation to better challenge and optimize the agent’s policy through curriculum learning.

💬 Research Conclusions:

– DreamGym significantly improves RL training across synthetic settings and real-world simulations, outperforming baselines and reducing reliance on costly real-world interactions.

👉 Paper link: https://huggingface.co/papers/2511.03773

4. Cambrian-S: Towards Spatial Supersensing in Video

🔑 Keywords: Supersensing, Semantic Perception, Spatial Cognition, Predictive Modeling, Predictive Sensing

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper aims to advance multimodal intelligence through the development of spatial supersensing, which goes beyond linguistic understanding to include semantic perception, event cognition, spatial cognition, and predictive modeling.

🛠️ Research Methods:

– The authors introduce VSI-SUPER benchmarks, consisting of VSR (Visual Spatial Recall) and VSC (Visual Spatial Counting), and explore data scaling with a self-supervised approach using the Cambrian-S model to test the limits of spatial cognition.

💬 Research Conclusions:

– The study finds that current benchmarks are insufficient for true world modeling and that simply scaling data does not improve spatial supersensing. Instead, a shift toward a predictive sensing approach is necessary, demonstrating superiority over existing models by using prediction error to drive memory and event segmentation.

👉 Paper link: https://huggingface.co/papers/2511.04670

5. GUI-360: A Comprehensive Dataset and Benchmark for Computer-Using Agents

🔑 Keywords: GUI-360°, Computer-Using Agents, GUI Grounding, Screen Parsing, Action Prediction

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To address gaps in real-world tasks, data collection, and evaluation for computer-using agents by introducing a comprehensive dataset and benchmark, GUI-360°.

🛠️ Research Methods:

– Developed an LLM-augmented pipeline for query sourcing, task instantiation, and LLM-driven quality filtering to automate the dataset’s creation process.

💬 Research Conclusions:

– Benchmarking state-of-the-art vision-language models exposed shortcomings in grounding and action prediction, highlighting the need for supervised fine-tuning and reinforcement learning to enhance performance.

👉 Paper link: https://huggingface.co/papers/2511.04307

6. Contamination Detection for VLMs using Multi-Modal Semantic Perturbation

🔑 Keywords: Vision-Language Models, Test-set leakage, Multi-modal semantic perturbation, Detection methods

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Address the issue of inflated performance in Vision-Language Models due to test-set leakage and propose a new detection method.

🛠️ Research Methods:

– Propose a novel detection method based on multi-modal semantic perturbation to identify contaminated Vision-Language Models.

💬 Research Conclusions:

– The new detection method demonstrates robustness and effectiveness across various contamination strategies and will be publicly released for further validation.

👉 Paper link: https://huggingface.co/papers/2511.03774

7. NVIDIA Nemotron Nano V2 VL

🔑 Keywords: Nemotron Nano V2 VL, Mamba-Transformer, token reduction techniques, document understanding, video comprehension

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce Nemotron Nano V2 VL designed for enhanced document and video understanding, as well as reasoning tasks.

🛠️ Research Methods:

– Utilization of a hybrid Mamba-Transformer LLM and innovative token reduction techniques to improve inference throughput.

💬 Research Conclusions:

– Significant improvements over previous models achieved through enhancements in architecture, datasets, and training recipes.

– Model checkpoints available in multiple formats, with datasets, recipes, and training code shared publicly.

👉 Paper link: https://huggingface.co/papers/2511.03929

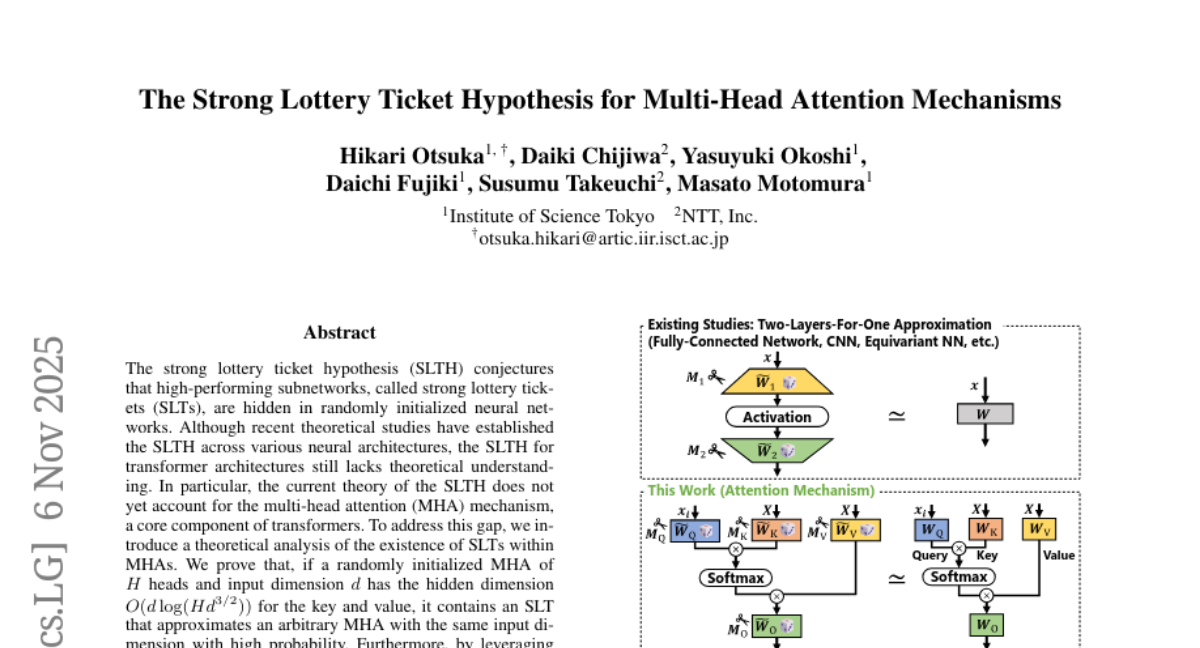

8. The Strong Lottery Ticket Hypothesis for Multi-Head Attention Mechanisms

🔑 Keywords: Strong lottery tickets, Multi-head attention, Transformers, Neural networks

💡 Category: Foundations of AI

🌟 Research Objective:

– To theoretically analyze the existence of strong lottery tickets within multi-head attention mechanisms and extend the strong lottery ticket hypothesis to transformers without normalization layers.

🛠️ Research Methods:

– Theoretical analysis and empirical validation of the approximation error between the strong lottery ticket within a source model and its approximate target counterpart.

💬 Research Conclusions:

– Proven existence of high-performing subnetworks (strong lottery tickets) in randomly initialized multi-head attention models.

– Extension of the strong lottery ticket hypothesis to transformers without normalization layers, demonstrating exponentially decreasing approximation error with increased hidden dimensions.

👉 Paper link: https://huggingface.co/papers/2511.04217

9. Benchmark Designers Should “Train on the Test Set” to Expose Exploitable Non-Visual Shortcuts

🔑 Keywords: Multimodal Large Language Models, bias score, Test-set Stress-Test, Iterative Bias Pruning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective is to create a framework for diagnosing and debiasing multimodal benchmarks to improve the robustness of Multimodal Large Language Models by mitigating non-visual biases.

🛠️ Research Methods:

– A diagnostic principle was adopted for benchmark design with two main components: Test-set Stress-Test (TsT) methodology and Iterative Bias Pruning (IBP) procedure.

– TsT involves fine-tuning using k-fold cross-validation on non-visual textual inputs, assigning a bias score to each sample.

– Light Random Forest-based diagnostic facilitates fast, interpretable auditing.

💬 Research Conclusions:

– The application of this framework uncovered significant non-visual biases in various benchmarks.

– The case study on VSI-Bench-Debiased demonstrated reduced non-visual solvability and increased vision-blind performance gap compared to the original.

👉 Paper link: https://huggingface.co/papers/2511.04655

10. How to Evaluate Speech Translation with Source-Aware Neural MT Metrics

🔑 Keywords: Source-aware metrics, ASR transcripts, back-translations, cross-lingual re-segmentation

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to improve speech-to-text evaluation by incorporating source information and addressing alignment issues through the use of source-aware metrics.

🛠️ Research Methods:

– Two strategies were explored: utilizing automatic speech recognition (ASR) transcripts and back-translations as textual proxies to address alignment mismatches in translation evaluation.

💬 Research Conclusions:

– Experiments on language pairs and ST systems showed that when word error rate is below 20%, ASR transcripts are more reliable than back-translations. A novel cross-lingual re-segmentation algorithm was introduced to facilitate the use of source-aware metrics, enhancing the accuracy of evaluation methodologies for speech translation.

👉 Paper link: https://huggingface.co/papers/2511.03295

11. Learning Vision-Driven Reactive Soccer Skills for Humanoid Robots

🔑 Keywords: Unified reinforcement learning, Adversarial Motion Priors, Encoder-decoder architecture, Motion imitation, Visually grounded dynamic control

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To develop a unified reinforcement learning-based controller for humanoid robots in soccer, integrating visual perception and motion control.

🛠️ Research Methods:

– Utilizes Adversarial Motion Priors and an encoder-decoder architecture with a virtual perception system to model real-world visual characteristics, enabling coherent and reactive behaviors.

💬 Research Conclusions:

– The proposed controller effectively executes coherent and robust soccer behaviors, demonstrating strong reactivity in dynamic environments like real RoboCup matches.

👉 Paper link: https://huggingface.co/papers/2511.03996

12. RDMA Point-to-Point Communication for LLM Systems

🔑 Keywords: TransferEngine, Large Language Models, disaggregated inference, Mixture-of-Experts, Network Interface Controllers

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To introduce TransferEngine, which provides a uniform interface for flexible point-to-point communication to enable large language model integration across different hardware.

🛠️ Research Methods:

– Utilized TransferEngine to manage common NICs through a uniform interface that supports disaggregated inference, reinforcement learning, and Mixture-of-Experts routing.

💬 Research Conclusions:

– Proven ability of TransferEngine to achieve peak throughput of 400 Gbps on NVIDIA ConnectX-7 and AWS Elastic Fabric Adapter through three production systems, enhancing integration and avoiding hardware lock-in.

👉 Paper link: https://huggingface.co/papers/2510.27656

13. SIMS-V: Simulated Instruction-Tuning for Spatial Video Understanding

🔑 Keywords: Spatial Reasoning, Multimodal Language Models, 3D Simulators, Video Training Data, Systematic Ablations

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance spatial reasoning in multimodal language models using a novel data-generation framework, SIMS-V, which leverages 3D simulators to refine video training data.

🛠️ Research Methods:

– Implementation of the SIMS-V framework to systematically explore the impact of simulated data properties through ablations, focusing on question types, mixes, and scales.

💬 Research Conclusions:

– The research identifies three key question categories—metric measurement, perspective-dependent reasoning, and temporal tracking—as most effective for transferable spatial intelligence.

– The approach allows efficient training, as demonstrated by a 7B-parameter video LLM that surpasses a 72B baseline using only 25K simulated examples.

– Demonstrates significant advancements in embodied and real-world spatial tasks, while maintaining general video understanding capabilities.

👉 Paper link: https://huggingface.co/papers/2511.04668

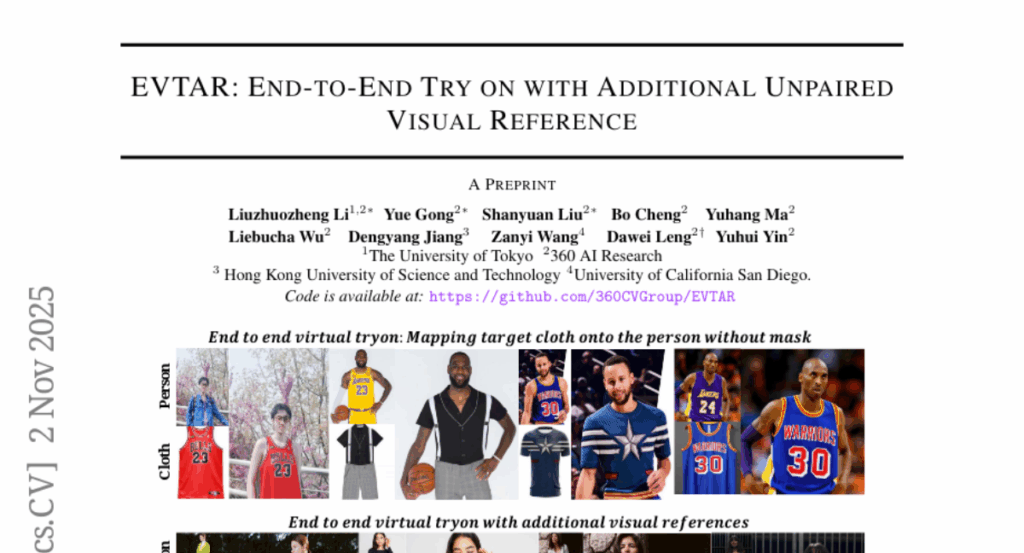

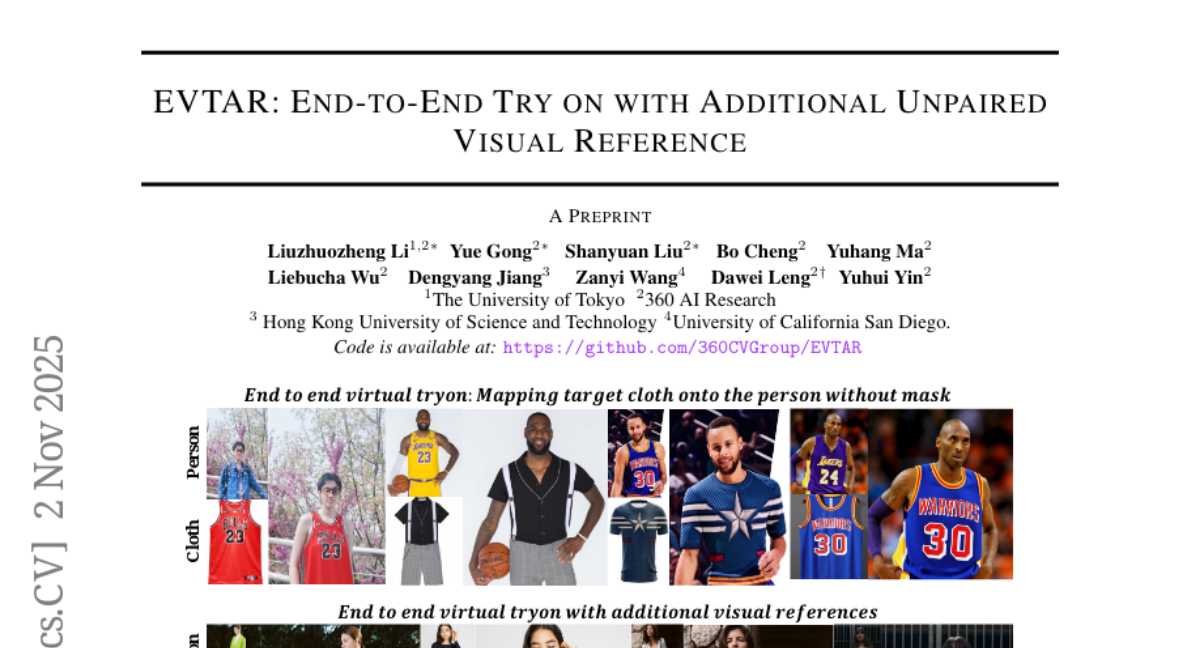

14. EVTAR: End-to-End Try on with Additional Unpaired Visual Reference

🔑 Keywords: EVTAR, End-to-End Virtual Try-on, garment texture, reference images, two-stage training strategy

💡 Category: Computer Vision

🌟 Research Objective:

– To propose EVTAR, an End-to-End Virtual Try-on model that enhances try-on accuracy by incorporating reference images and simplifying the inference process.

🛠️ Research Methods:

– Uses a two-stage training strategy with only a source image and target garment, without relying on masks, densepose, or segmentation maps.

– Leverages additional reference images and unpaired person images to preserve garment texture and fine-grained details.

💬 Research Conclusions:

– Evaluations on two benchmarks demonstrate the model’s effectiveness in improving realistic dressing effects and accuracy.

👉 Paper link: https://huggingface.co/papers/2511.00956

15. SAIL-RL: Guiding MLLMs in When and How to Think via Dual-Reward RL Tuning

🔑 Keywords: SAIL-RL, large language models, reinforcement learning, dual reward system, hallucinations

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The research introduces SAIL-RL, a post-training reinforcement learning framework aimed at enhancing the reasoning abilities of multimodal large language models (MLLMs).

🛠️ Research Methods:

– SAIL-RL uses a dual reward system comprising the Thinking Reward, which evaluates reasoning quality, and the Judging Reward, which determines the need for deep reasoning.

💬 Research Conclusions:

– SAIL-RL improves reasoning and multimodal understanding benchmarks, reduces hallucinations, and competes with commercial models like GPT-4o.

👉 Paper link: https://huggingface.co/papers/2511.02280

16.