AI Native Daily Paper Digest – 20251112

1. Grounding Computer Use Agents on Human Demonstrations

🔑 Keywords: GroundCUA, GroundNext, desktop environments, natural language instructions, human-verified annotations

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce GroundCUA, a large-scale desktop grounding dataset to enhance model training for mapping instructions to UI elements.

🛠️ Research Methods:

– Utilize expert human demonstrations across 87 applications to build GroundCUA, consisting of 56K screenshots and over 3.56M annotations. Develop GroundNext models using supervised fine-tuning and reinforcement learning to optimize performance.

💬 Research Conclusions:

– GroundNext models achieve state-of-the-art results with significantly less training data than previous models and demonstrate superiority on the OSWorld benchmark when evaluated in an agentic setting.

👉 Paper link: https://huggingface.co/papers/2511.07332

2. Tiny Model, Big Logic: Diversity-Driven Optimization Elicits Large-Model Reasoning Ability in VibeThinker-1.5B

🔑 Keywords: VibeThinker-1.5B, Spectrum-to-Signal Principle, small models, reasoning capabilities

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To demonstrate that smaller models like VibeThinker-1.5B can achieve superior reasoning capabilities at a lower cost compared to larger models.

🛠️ Research Methods:

– Developed the VibeThinker-1.5B model using the Spectrum-to-Signal Principle, which includes a Two-Stage Diversity-Exploring Distillation and MaxEnt-Guided Policy Optimization.

💬 Research Conclusions:

– VibeThinker-1.5B, with 1.5B parameters, shows superior reasoning and performance on mathematical benchmarks compared to much larger models, while significantly reducing costs in AI research and development.

👉 Paper link: https://huggingface.co/papers/2511.06221

3. Adaptive Multi-Agent Response Refinement in Conversational Systems

🔑 Keywords: Large Language Models, conversational systems, personalization, multi-agent framework, coherence

💡 Category: Natural Language Processing

🌟 Research Objective:

– To refine conversational responses by addressing factuality, personalization, and coherence using a multi-agent framework.

🛠️ Research Methods:

– Implementing a multi-agent framework where each agent addresses a specific aspect of conversation quality, using a dynamic communication strategy for adaptive collaboration.

💬 Research Conclusions:

– The multi-agent framework significantly outperforms single-agent methods on challenging datasets, particularly in tasks requiring knowledge or user persona.

👉 Paper link: https://huggingface.co/papers/2511.08319

4. Wasm: A Pipeline for Constructing Structured Arabic Interleaved Multimodal Corpora

🔑 Keywords: Arabic multimodal dataset, pre-training datasets, semantic alignment, Common Crawl

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Develop a pipeline, Wasm, that processes the Common Crawl dataset to create an Arabic multimodal dataset, preserving document structure for effective pre-training.

🛠️ Research Methods:

– Utilize a comprehensive comparative analysis of data processing pipelines, focusing on structural integrity while maintaining flexibility for both text-only and multimodal scenarios.

💬 Research Conclusions:

– The new pipeline provides markdown output and comparative insights on filtering strategies, and a publicly available dataset dump supports future Arabic multimodal research.

👉 Paper link: https://huggingface.co/papers/2511.07080

5. KLASS: KL-Guided Fast Inference in Masked Diffusion Models

🔑 Keywords: KL-Adaptive Stability Sampling, diffusion-based generation, token-level KL divergence, Greedy Decoding, AI-generated

💡 Category: Generative Models

🌟 Research Objective:

– To accelerate diffusion-based generation via KL-Adaptive Stability Sampling by improving speed and quality of predictions across various domains.

🛠️ Research Methods:

– Introduced KL-Adaptive Stability Sampling (KLASS), utilizing token-level KL divergence for faster, high-confidence predictions without additional model training.

💬 Research Conclusions:

– KLASS achieves up to 2.78 times speedup over traditional methods while enhancing performance on reasoning benchmarks, with applicability across text, image, and molecular generation.

👉 Paper link: https://huggingface.co/papers/2511.05664

6. VideoSSR: Video Self-Supervised Reinforcement Learning

🔑 Keywords: Video Self-Supervised Reinforcement Learning, Multimodal Large Language Models, Video Understanding, Self-Supervised Pretext Tasks, VideoSSR

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Leveraging intrinsic video information to enhance the performance of Multimodal Large Language Models (MLLMs) by developing a video self-supervised reinforcement learning framework called VideoSSR.

🛠️ Research Methods:

– The introduction of three self-supervised pretext tasks: Anomaly Grounding, Object Counting, and Temporal Jigsaw, and the construction of the Video Intrinsic Understanding Benchmark (VIUBench) to evaluate model capabilities.

💬 Research Conclusions:

– The VideoSSR framework shows over 5% performance improvement across 17 benchmarks in four major video domains, establishing it as a foundational framework for advanced video understanding in MLLMs.

👉 Paper link: https://huggingface.co/papers/2511.06281

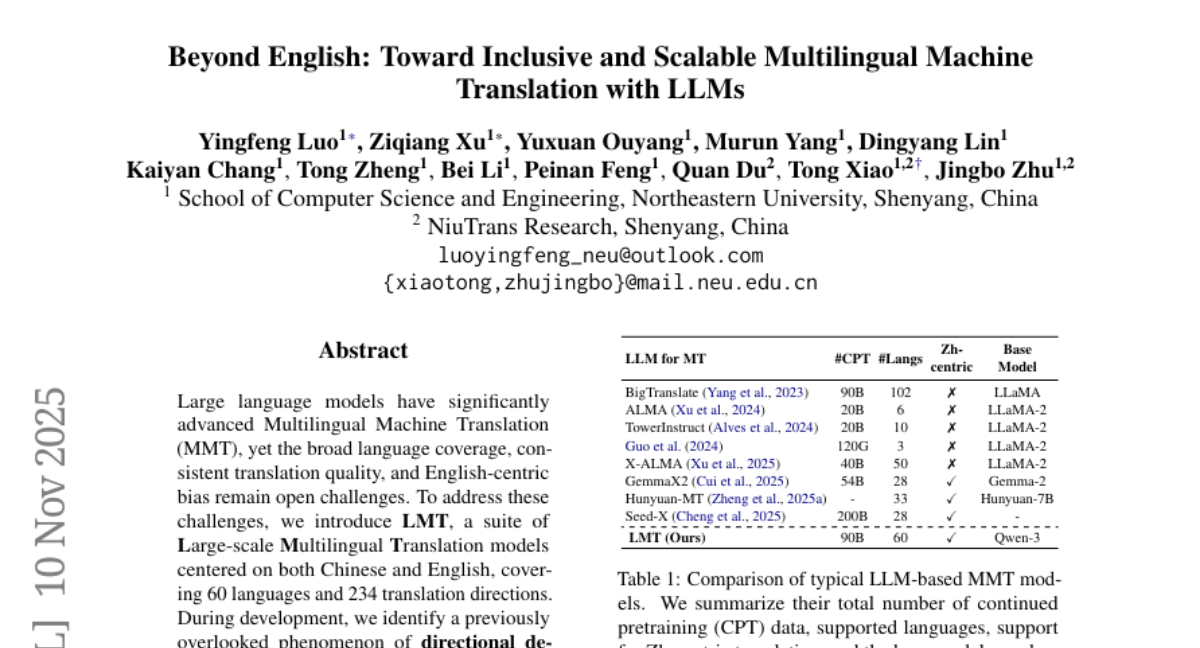

7. Beyond English: Toward Inclusive and Scalable Multilingual Machine Translation with LLMs

🔑 Keywords: Multilingual Machine Translation, LMT, Strategic Downsampling, Parallel Multilingual Prompting, Cross-lingual Transfer

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce LMT, a suite of large-scale multilingual translation models, designed to overcome challenges in multilingual machine translation, particularly focusing on broad language coverage and consistent translation quality.

🛠️ Research Methods:

– Utilize Strategic Downsampling to address directional degeneration, and implement Parallel Multilingual Prompting to enhance cross-lingual transfer through typologically related auxiliary languages.

💬 Research Conclusions:

– LMT achieves state-of-the-art performance across 60 languages and 234 translation directions, with the 4B model significantly outperforming larger models like Aya-101-13B and NLLB-54B, offering strong baselines for future multilingual machine translation research.

👉 Paper link: https://huggingface.co/papers/2511.07003

8. The Path Not Taken: RLVR Provably Learns Off the Principals

🔑 Keywords: Reinforcement Learning, Large Language Models, Verifiable Rewards, Parameter-level Characterization, Sparse Fine-Tuning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To enhance the reasoning performance of large language models through Reinforcement Learning with Verifiable Rewards (RLVR).

🛠️ Research Methods:

– Implementing the Three-Gate Theory consisting of KL-constrained updates, steering into low-curvature subspaces, and hiding micro-updates in non-preferred regions.

💬 Research Conclusions:

– RLVR improves model reasoning reliably with minimal parameter modifications and provides a new understanding of training dynamics, differing from supervised fine-tuning methods.

– It suggests that directly adapting parameter-efficient methods from supervised fine-tuning can be problematic.

👉 Paper link: https://huggingface.co/papers/2511.08567

9. Beyond Fact Retrieval: Episodic Memory for RAG with Generative Semantic Workspaces

🔑 Keywords: Large Language Models, Generative Semantic Workspace, neuro-inspired generative memory, episodic memory, long-context reasoning

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To enhance Large Language Models with a neuro-inspired generative memory framework that improves performance on long-context reasoning and episodic memory tasks.

🛠️ Research Methods:

– Developed the Generative Semantic Workspace, comprising an Operator and a Reconciler, to create coherent, interpretable semantic structures and integrate them into a persistent workspace.

💬 Research Conclusions:

– The Generative Semantic Workspace significantly outperforms existing methods, with up to 20% improvement on Episodic Memory Benchmarks and reduces query-time context tokens by 51%.

– Provides a blueprint for LLMs with human-like episodic memory, enhancing their ability to reason over long horizons.

👉 Paper link: https://huggingface.co/papers/2511.07587

10. Intelligence per Watt: Measuring Intelligence Efficiency of Local AI

🔑 Keywords: Local inference, Small language models, Intelligence per watt, Local accelerators, Task accuracy

💡 Category: Natural Language Processing

🌟 Research Objective:

– To evaluate whether local inference using small language models can redistribute demand from centralized cloud infrastructure by measuring their efficiency and accuracy on power-constrained devices.

🛠️ Research Methods:

– Conducted a large-scale empirical study using over 20 state-of-the-art local language models and 8 accelerators across 1 million real-world queries, measuring key metrics such as accuracy, energy, latency, and power.

💬 Research Conclusions:

– Local language models accurately answered 88.7% of single-turn chat and reasoning queries, with accuracy varying by domain.

– The intelligence per watt (IPW) metric improved by 5.3x from 2023 to 2025, and local query coverage increased from 23.2% to 71.3%.

– Local accelerators achieved at least 1.4x lower IPW compared to cloud accelerators, indicating potential for optimization and effective demand redistribution.

👉 Paper link: https://huggingface.co/papers/2511.07885

11. DynaAct: Large Language Model Reasoning with Dynamic Action Spaces

🔑 Keywords: DynaAct, sequential decision-making, action space, large language models, efficient inference

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– Introduce a framework called DynaAct for constructing a compact action space to improve efficiency in sequential decision-making.

🛠️ Research Methods:

– Utilizes large language models to estimate a proxy for a complete action space.

– Employs a submodular function and a greedy algorithm for optimal action selection.

💬 Research Conclusions:

– The proposed framework significantly enhances performance across diverse benchmarks without adding substantial latency.

👉 Paper link: https://huggingface.co/papers/2511.08043

12. Optimizing Diversity and Quality through Base-Aligned Model Collaboration

🔑 Keywords: BACo, Alignment, Diversity, Quality, Token-Level Model Collaboration

💡 Category: Generative Models

🌟 Research Objective:

– Enhance the diversity and quality of outputs from large language models through token-level model collaboration between a base model and its aligned counterpart.

🛠️ Research Methods:

– Implement BACo, which uses dynamic routing strategies based on token predictions to optimize between the base LLM and aligned model, evaluated across three open-ended generation tasks and using 13 metrics.

💬 Research Conclusions:

– BACo outperforms state-of-the-art baselines in both diversity and quality with a 21.3% joint improvement, as supported by human evaluations, demonstrating the efficacy of collaboration between base and aligned models.

👉 Paper link: https://huggingface.co/papers/2511.05650

13. Walking the Tightrope of LLMs for Software Development: A Practitioners’ Perspective

🔑 Keywords: LLMs, AI-generated summary, socio-technical grounded theory, software development

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Investigate the impact of Large Language Models (LLMs) on software development and strategies to manage this impact from a developer’s perspective.

🛠️ Research Methods:

– Conducted 22 interviews with software practitioners over three rounds of data collection and analysis between October 2024 and September 2025. Used socio-technical grounded theory (STGT) for data analysis.

💬 Research Conclusions:

– Identified benefits such as improved workflow, enhanced developers’ mental models, and entrepreneurship encouragement, alongside disadvantages like negative effects on developers’ personalities and reputations. Provided best practices for adopting LLMs, useful for software team leaders and IT managers.

👉 Paper link: https://huggingface.co/papers/2511.06428

14. BiCA: Effective Biomedical Dense Retrieval with Citation-Aware Hard Negatives

🔑 Keywords: BiCA, hard negatives, citation links, domain adaptation, zero-shot dense retrieval

💡 Category: AI in Healthcare

🌟 Research Objective:

– Introduce BiCA, a method for biomedical dense retrieval using citation-aware hard negatives to enhance retrieval performance with minimal fine-tuning.

🛠️ Research Methods:

– Utilized citation links from 20,000 PubMed articles to perform hard-negative mining, fine-tuning the GTE_small and GTE_Base models.

💬 Research Conclusions:

– Achieved consistent improvements in zero-shot dense retrieval and demonstrated potential for data-efficient domain adaptation, outperforming baselines on long-tailed topics.

👉 Paper link: https://huggingface.co/papers/2511.08029

15.