AI Native Daily Paper Digest – 20251117

1. DoPE: Denoising Rotary Position Embedding

🔑 Keywords: Denoising Positional Encoding, Transformer models, retrieval accuracy, reasoning stability, positional embeddings

💡 Category: Natural Language Processing

🌟 Research Objective:

– The paper aims to enhance length generalization in Transformer models by introducing Denoising Positional Encoding (DoPE) to improve retrieval accuracy and reasoning stability.

🛠️ Research Methods:

– A training-free method based on truncated matrix entropy is proposed to detect noisy frequency bands in positional embeddings, which are then reparameterized using a parameter-free Gaussian distribution.

💬 Research Conclusions:

– Experimentation shows that DoPE can significantly improve retrieval accuracy and reasoning stability in extended contexts by mitigating attention sinks and restoring balanced attention patterns, offering an effective solution for length generalization.

👉 Paper link: https://huggingface.co/papers/2511.09146

2. WEAVE: Unleashing and Benchmarking the In-context Interleaved Comprehension and Generation

🔑 Keywords: WEAVE, unified multimodal models, multi-turn generation, image editing, visual-memory capabilities

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The primary goal of the paper is to tackle the limitations of existing datasets and benchmarks, which mainly focus on single-turn interactions, by introducing WEAVE, a suite aimed at enhancing multi-turn, context-dependent image generation and editing in unified multimodal models.

🛠️ Research Methods:

– The research introduces WEAVE-100k, a large-scale dataset with 100K interleaved samples and WEAVEBench, a human-annotated benchmark with 100 tasks, used to assess multi-turn generation, visual memory, and world-knowledge reasoning with a hybrid VLM judger evaluation framework.

💬 Research Conclusions:

– Training with WEAVE-100k enhances vision comprehension, image editing, and comprehension-generation collaboration, unlocking emergent visual-memory capabilities. However, evaluations on WEAVEBench reveal ongoing challenges in multi-turn, context-aware image generation and editing, suggesting areas for further research in the multi-modal community.

👉 Paper link: https://huggingface.co/papers/2511.11434

3. GGBench: A Geometric Generative Reasoning Benchmark for Unified Multimodal Models

🔑 Keywords: Unified Multimodal Models, generative reasoning, geometric construction, language comprehension, visual generation

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To introduce GGBench as a benchmark for evaluating geometric generative reasoning in multimodal models, addressing the gap in assessing integrated cognitive processes.

🛠️ Research Methods:

– Utilize geometric construction as a testbed to evaluate the fusion of language comprehension and precise visual generation.

💬 Research Conclusions:

– GGBench provides a comprehensive framework to diagnose and measure models’ abilities in understanding, reasoning, and constructing solutions, setting a rigorous standard for future intelligent systems.

👉 Paper link: https://huggingface.co/papers/2511.11134

4. UI2Code^N: A Visual Language Model for Test-Time Scalable Interactive UI-to-Code Generation

🔑 Keywords: AI-generated summary, visual language models, UI-to-code, reinforcement learning, multi-turn feedback

💡 Category: Generative Models

🌟 Research Objective:

– The objective is to enhance the performance of UI-to-code generation using a visual language model named UI2Code$^\text{N}$, which integrates multimodal coding capabilities.

🛠️ Research Methods:

– Utilized staged pretraining, fine-tuning, and reinforcement learning to train the visual language model.

– Implemented an interactive paradigm that employs systematic multi-turn feedback for UI generation and polishing.

💬 Research Conclusions:

– UI2Code$^\text{N}$ set a new benchmark in open-source models, rivalling leading closed-source models like Claude-4-Sonnet and GPT-5.

– The model successfully unifies and improves UI-to-code generation, editing, and polishing capabilities.

👉 Paper link: https://huggingface.co/papers/2511.08195

5. AIonopedia: an LLM agent orchestrating multimodal learning for ionic liquid discovery

🔑 Keywords: Ionic Liquids, AIonopedia, Large Language Models, multimodal domain foundation model, wet-lab validation

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance property prediction and molecular design for Ionic Liquids using AIonopedia, an AI agent powered by Large Language Models and a multimodal foundation model.

🛠️ Research Methods:

– Utilization of LLM-augmented multimodal domain foundation model for accurate property predictions, including hierarchical search architecture for molecular screening and design.

💬 Research Conclusions:

– AIonopedia demonstrated superior performance on newly curated datasets and literature-reported systems, with practical efficacy confirmed through real-world wet-lab validation, showcasing its ability to generalize effectively and accelerate real-world Ionic Liquids discovery.

👉 Paper link: https://huggingface.co/papers/2511.11257

6. LiteAttention: A Temporal Sparse Attention for Diffusion Transformers

🔑 Keywords: LiteAttention, diffusion attention, temporal coherence, video generation, FlashAttention

💡 Category: Generative Models

🌟 Research Objective:

– The primary goal is to accelerate video generation by leveraging the temporal coherence in diffusion attention without compromising quality.

🛠️ Research Methods:

– Introduction of LiteAttention, which utilizes temporal coherence to skip unnecessary computations in video diffusion models, combining the benefits of dynamic and static methods.

💬 Research Conclusions:

– LiteAttention significantly speeds up video generation processes in diffusion models while maintaining quality, demonstrating effective application over existing FlashAttention technology.

👉 Paper link: https://huggingface.co/papers/2511.11062

7. Virtual Width Networks

🔑 Keywords: Virtual Width Networks, Model Efficiency, Representational Width, Token-Efficient, Loss Reduction

💡 Category: Machine Learning

🌟 Research Objective:

– To introduce Virtual Width Networks (VWN) that improve model efficiency by expanding representational width without increasing computational cost.

🛠️ Research Methods:

– VWN decouples representational width from backbone width, enabling significant expansions in embedding space while maintaining constant backbone compute in large-scale experiments.

💬 Research Conclusions:

– An 8-times expansion in representational width accelerates optimization over 2 times for next-token prediction and 3 times for next-2-token prediction, demonstrating increasing effectiveness and token efficiency as scale increases.

– Identification of a log-linear scaling relation between virtual width and loss reduction suggests new possibilities for large-model efficiency improvements.

👉 Paper link: https://huggingface.co/papers/2511.11238

8. Simulating the Visual World with Artificial Intelligence: A Roadmap

🔑 Keywords: video foundation models, implicit world models, physical plausibility, agent-environment interactions, task planning

💡 Category: Generative Models

🌟 Research Objective:

– To provide a systematic overview of the evolution of video foundation models, integrating world simulation and rendering for creating physically plausible and interactive videos.

🛠️ Research Methods:

– Conceptualization of modern video foundation models as a combination of an implicit world model and a video renderer, tracing the progression through four generations.

💬 Research Conclusions:

– Video foundation models are advancing towards embodying intrinsic physical plausibility, enabling real-time multimodal interaction and comprehensive planning across multiple spatiotemporal scales, with applications in domains like robotics and autonomous driving.

👉 Paper link: https://huggingface.co/papers/2511.08585

9. SpatialThinker: Reinforcing 3D Reasoning in Multimodal LLMs via Spatial Rewards

🔑 Keywords: SpatialThinker, 3D-aware MLLM, Reinforcement Learning, spatial understanding, multi-step reasoning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To enhance 3D spatial understanding in multimodal large language models (MLLMs) by integrating structured spatial grounding and multi-step reasoning.

🛠️ Research Methods:

– Developed a data synthesis pipeline to create a high-quality spatial VQA dataset named STVQA-7K.

– Employed online reinforcement learning with multi-objective dense spatial reward to enforce spatial grounding.

💬 Research Conclusions:

– SpatialThinker-7B outperformed existing models and supervised fine-tuning in spatial understanding and real-world VQA benchmarks.

– The model showcased significant improvements in visual reasoning with limited data, nearly doubling performance compared to the sparse RL baseline.

👉 Paper link: https://huggingface.co/papers/2511.07403

10. HI-TransPA: Hearing Impairments Translation Personal Assistant

🔑 Keywords: HI-TransPA, Omni-Model, audio-visual personal assistant, hearing-impaired, high-frame-rate lip dynamics

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce the Omni-Model paradigm to assistive technology for hearing-impaired communication, presenting HI-TransPA as an instruction-driven audio-visual personal assistant.

🛠️ Research Methods:

– Develop a comprehensive preprocessing and curation pipeline to handle noisy data, detect facial landmarks, and stabilize the lip region.

– Implement curriculum learning and use a SigLIP encoder with a Unified 3D-Resampler to encode lip motion effectively.

💬 Research Conclusions:

– HI-TransPA achieves state-of-the-art performance in translating and dialogue, with improved literal accuracy and semantic fidelity, establishing a foundation for future research in assistive communication technology.

👉 Paper link: https://huggingface.co/papers/2511.09915

11. MarsRL: Advancing Multi-Agent Reasoning System via Reinforcement Learning with Agentic Pipeline Parallelism

🔑 Keywords: MarsRL, Multi-agent reasoning systems, Reinforcement Learning, Agent-specific reward mechanisms, Pipeline-inspired training

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To improve accuracy in complex reasoning tasks by jointly optimizing all agents in multi-agent reasoning systems using the MarsRL framework.

🛠️ Research Methods:

– MarsRL employs agentic pipeline parallelism and agent-specific reward mechanisms to mitigate reward noise and improve the efficiency of handling long trajectories.

💬 Research Conclusions:

– MarsRL significantly improves accuracy from 86.5% to 93.3% in AIME2025 and from 64.9% to 73.8% in BeyondAIME, showcasing its potential to advance multi-agent reasoning systems and expand their application across diverse reasoning tasks.

👉 Paper link: https://huggingface.co/papers/2511.11373

12. RF-DETR: Neural Architecture Search for Real-Time Detection Transformers

🔑 Keywords: RF-DETR, real-time detection, detection transformer, NAS, accuracy-latency tradeoff

💡 Category: Computer Vision

🌟 Research Objective:

– Introduce RF-DETR, a light-weight detection transformer, to optimize accuracy and latency for real-time detection across diverse datasets using weight-sharing neural architecture search (NAS).

🛠️ Research Methods:

– Implement fine-tuning of a pre-trained base network on target datasets to evaluate multiple network configurations with various accuracy-latency tradeoffs without the need for re-training.

💬 Research Conclusions:

– RF-DETR significantly enhances previous state-of-the-art real-time methods on datasets such as COCO and Roboflow100-VL, achieving notable accuracy improvements at comparable or faster speeds.

👉 Paper link: https://huggingface.co/papers/2511.09554

13. DiscoX: Benchmarking Discourse-Level Translation task in Expert Domains

🔑 Keywords: DiscoX, Metric-S, LLMs, professional-grade machine translation, reference-free system

💡 Category: Natural Language Processing

🌟 Research Objective:

– The objective is to develop a new benchmark, DiscoX, and an evaluation system, Metric-S, to enhance the assessment of discourse-level and expert-level Chinese-English translation.

🛠️ Research Methods:

– Introduced DiscoX consisting of 200 expert-curated texts from seven domains.

– Developed Metric-S, a reference-free system for evaluating accuracy, fluency, and appropriateness.

💬 Research Conclusions:

– Metric-S exhibits strong consistency with human judgments and surpasses existing evaluation metrics.

– Advanced LLMs still fall short compared to human translators, highlighting the challenges in achieving professional-grade machine translation.

👉 Paper link: https://huggingface.co/papers/2511.10984

14. Experience-Guided Adaptation of Inference-Time Reasoning Strategies

🔑 Keywords: Experience-Guided Reasoner, AI-generated summary, agentic AI systems, LLM, control logic

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Introduce the Experience-Guided Reasoner (EGuR) to dynamically generate and optimize computational strategies during inference based on accumulated experience, aiming to improve accuracy and efficiency.

🛠️ Research Methods:

– Develop an LLM-based meta-strategy to adapt all components of strategy generation (e.g., prompts, sampling parameters, tool configurations, and control logic).

– Utilize a Guide and a Consolidator to generate multiple candidate strategies and integrate execution feedback for optimized strategy generation.

💬 Research Conclusions:

– EGuR shows significant improvement in accuracy (up to 14%) across five challenging benchmarks and reduces computational costs by up to 111x compared to the best existing baselines.

👉 Paper link: https://huggingface.co/papers/2511.11519

15. EmoVid: A Multimodal Emotion Video Dataset for Emotion-Centric Video Understanding and Generation

🔑 Keywords: EmoVid, multimodal, emotion-annotated, video generation, affective video computing

💡 Category: Generative Models

🌟 Research Objective:

– Introduce EmoVid, a multimodal emotion-annotated video dataset designed to enhance emotional expression in generated videos.

🛠️ Research Methods:

– Systematic analysis of spatial and temporal patterns linking visual features to emotions.

– Development of an emotion-conditioned video generation technique by fine-tuning the Wan2.1 model.

💬 Research Conclusions:

– EmoVid significantly improves quantitative metrics and visual quality for text-to-video and image-to-video tasks, establishing a new benchmark in affective video computing.

👉 Paper link: https://huggingface.co/papers/2511.11002

16. Large Language Models for Scientific Idea Generation: A Creativity-Centered Survey

🔑 Keywords: Large language models, Scientific ideation, Creativity frameworks, Parameter-level adaptation, Multi-agent collaboration

💡 Category: Generative Models

🌟 Research Objective:

– The study investigates methods utilizing large language models for generating scientific ideas, focusing on creativity and scientific soundness.

🛠️ Research Methods:

– The survey categorizes approaches into five families: External knowledge augmentation, Prompt-based distributional steering, Inference-time scaling, Multi-agent collaboration, and Parameter-level adaptation, aligning these with creativity frameworks like Boden’s taxonomy and Rhodes’ 4Ps.

💬 Research Conclusions:

– The research clarifies the current field status and suggests directions for systematic and transformative large language model applications in scientific discovery.

👉 Paper link: https://huggingface.co/papers/2511.07448

17. Workload Schedulers — Genesis, Algorithms and Differences

🔑 Keywords: Workload Schedulers, Operating Systems, Cluster Systems, Big Data

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The paper aims to categorize modern workload schedulers into three classes and discusses their evolution and features.

🛠️ Research Methods:

– The approach involves describing and analyzing the evolution of operating systems process schedulers, cluster systems jobs schedulers, and big data schedulers.

💬 Research Conclusions:

– The paper highlights the differences and chronological development of the schedulers and identifies similarities in scheduling strategy design for both local and distributed systems.

👉 Paper link: https://huggingface.co/papers/2511.10258

18. Don’t Waste It: Guiding Generative Recommenders with Structured Human Priors via Multi-head Decoding

🔑 Keywords: Human Priors, Generative Recommenders, Prior-Conditioned Adapter Heads, Hierarchical Composition Strategy

💡 Category: Generative Models

🌟 Research Objective:

– Enhance accuracy and beyond-accuracy objectives (i.e., diversity, novelty, personalization) by integrating human priors into end-to-end generative recommenders.

🛠️ Research Methods:

– Employ lightweight, prior-conditioned adapter heads inspired by efficient LLM decoding strategies.

– Implement a backbone-agnostic framework and hierarchical composition strategy for modeling interactions.

💬 Research Conclusions:

– The framework significantly improves both accuracy and beyond-accuracy objectives.

– Human priors allow the model to effectively leverage longer context lengths and larger model sizes.

👉 Paper link: https://huggingface.co/papers/2511.10492

19. miniF2F-Lean Revisited: Reviewing Limitations and Charting a Path Forward

🔑 Keywords: miniF2F, autoformalization, theorem proving, formal reasoning, math Olympiad

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– Analyze the discrepancy between formal and informal statements in the miniF2F benchmark from an AI math Olympiad perspective.

🛠️ Research Methods:

– Evaluation of the AI pipeline involving natural language comprehension, formalization in Lean language, and proof generation using state-of-the-art models.

💬 Research Conclusions:

– Identified accuracy drop due to discrepancies in statement types, leading to 36% accuracy initially.

– Improved accuracy to 70% by correcting errors and discrepancies in miniF2F-v2, highlighting misalignments in autoformalization and theorem proving models.

👉 Paper link: https://huggingface.co/papers/2511.03108

20. From Proof to Program: Characterizing Tool-Induced Reasoning Hallucinations in Large Language Models

🔑 Keywords: Tool-augmented Language Models, Code Interpreter, Tool-Induced Myopia, reasoning, accuracy

💡 Category: Natural Language Processing

🌟 Research Objective:

– Investigate the reliability and reasoning capabilities of Tool-augmented Language Models (TaLMs) when utilizing external tools, specifically focusing on their effectiveness with a Code Interpreter.

🛠️ Research Methods:

– Analyzed the performance of TaLMs using PYMATH, a benchmark dataset of 1,679 competition-level math problems, and developed a multi-dimensional evaluation suite to measure reasoning degradation compared to non-tool models.

💬 Research Conclusions:

– TaLMs demonstrate a significant increase in final-answer accuracy but encounter a reduction in reasoning coherence, especially with frequent tool usage, showing a tendency toward global reasoning errors such as logic and creativity issues. Proposed a framework for better alignment in tool assistance, enhancing both accuracy and reasoning.

👉 Paper link: https://huggingface.co/papers/2511.10899

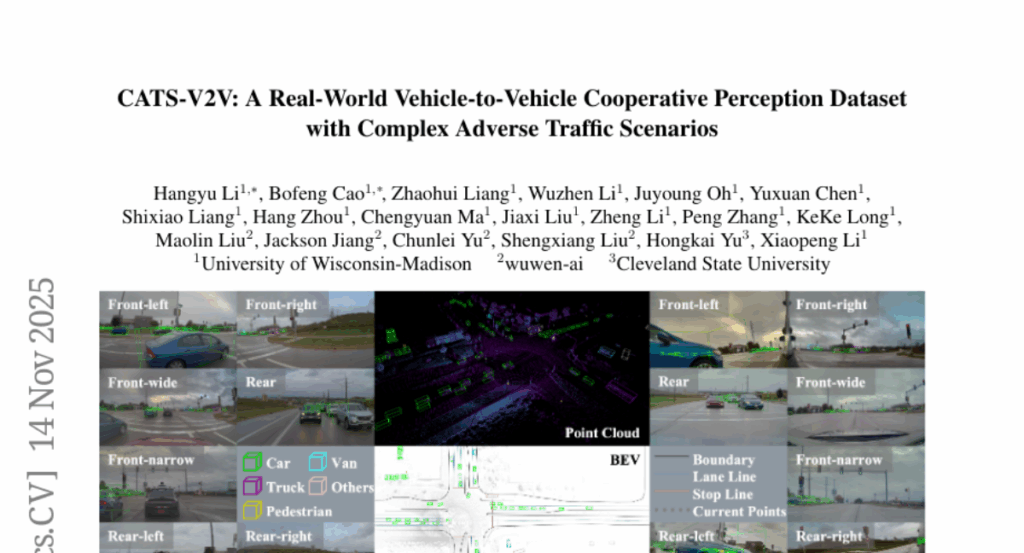

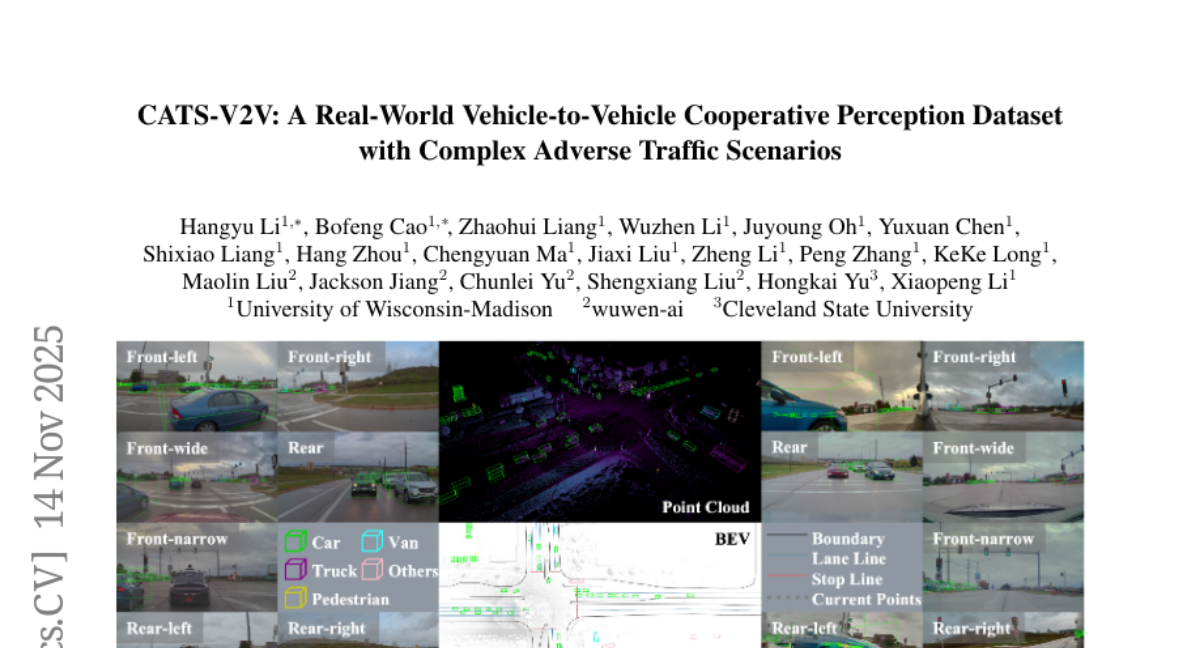

21. CATS-V2V: A Real-World Vehicle-to-Vehicle Cooperative Perception Dataset with Complex Adverse Traffic Scenarios

🔑 Keywords: V2V cooperative perception, Autonomous driving, Complex traffic scenarios, LiDAR point clouds, 4D BEV representation

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– The introduction of CATS-V2V, a real-world dataset specifically designed for V2V cooperative perception in complex adverse traffic scenarios.

🛠️ Research Methods:

– Data collection using two hardware time-synchronized vehicles covering diverse conditions, including 10 weather and lighting environments across 10 locations. The dataset consists of 60K frames of LiDAR point clouds and 1.26M multi-view camera images, along with GNSS and IMU records.

– Proposal of a target-based temporal alignment method to ensure precise alignment across sensor modalities.

💬 Research Conclusions:

– CATS-V2V offers the largest-scale and highest-quality dataset for its kind, intended to advance cooperative perception research in autonomous driving.

👉 Paper link: https://huggingface.co/papers/2511.11168

22. Building the Web for Agents: A Declarative Framework for Agent-Web Interaction

🔑 Keywords: VOIX, AI Native, Privacy-preserving, Agentic Web, Human-AI Collaboration

💡 Category: Human-AI Interaction

🌟 Research Objective:

– To introduce VOIX, a web-native framework that facilitates reliable and privacy-preserving interactions between AI agents and human-oriented user interfaces through declarative HTML elements.

🛠️ Research Methods:

– Conducted a three-day hackathon study with 16 developers to evaluate the framework’s practicality, learnability, and expressiveness.

💬 Research Conclusions:

– Participants were able to rapidly build diverse and functional agent-enabled web applications, demonstrating VOIX’s potential to enable seamless and secure human-AI collaboration on the web.

👉 Paper link: https://huggingface.co/papers/2511.11287

23.