AI Native Daily Paper Digest – 20251118

1. P1: Mastering Physics Olympiads with Reinforcement Learning

🔑 Keywords: Large Language Models, Physics Reasoning, Reinforcement Learning, International Physics Olympiad, Generalibility

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to advance physics research by developing large language models with outstanding physics reasoning capabilities.

🛠️ Research Methods:

– The P1 family models are trained entirely through reinforcement learning.

💬 Research Conclusions:

– The model P1-235B-A22B achieved Gold-medal performance in the International Physics Olympiad 2025 and overall No.1 ranking with the PhysicsMinions framework.

– P1 models also exhibit great performance on other reasoning tasks like math and coding, demonstrating their generalibility.

👉 Paper link: https://huggingface.co/papers/2511.13612

2. Uni-MoE-2.0-Omni: Scaling Language-Centric Omnimodal Large Model with Advanced MoE, Training and Data

🔑 Keywords: Uni-MoE 2.0, Omnimodal Large Model, Mixture-of-Experts, Multimodal Understanding, Reinforcement Learning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective was to advance language-centric multimodal understanding, reasoning, and generating by developing Uni-MoE 2.0, a fully open-source omnimodal large model.

🛠️ Research Methods:

– The methodology involved the creation of Uni-MoE-2.0-Omni using a dynamic-capacity Mixture-of-Experts design, a progressive training strategy with iterative reinforcement, and a multimodal data matching technique.

💬 Research Conclusions:

– Extensive evaluation across 85 benchmarks demonstrated that the model achieves state-of-the-art or highly competitive performance, notably surpassing Qwen2.5-Omni on over 50 of 76 benchmarks, with particular strengths in video understanding, omnimodality understanding, audiovisual reasoning, and long-form speech processing.

👉 Paper link: https://huggingface.co/papers/2511.12609

3. MiroThinker: Pushing the Performance Boundaries of Open-Source Research Agents via Model, Context, and Interactive Scaling

🔑 Keywords: MiroThinker, Tool-Augmented Reasoning, Interactive Scaling, Reinforcement Learning, Context Window

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To advance tool-augmented reasoning and information-seeking capabilities by introducing a new dimension of performance improvement through interaction scaling at the model level.

🛠️ Research Methods:

– Development and systematic training of MiroThinker v1.0, leveraging interactive scaling via reinforcement learning to enable multi-turn reasoning and complex research workflows.

💬 Research Conclusions:

– MiroThinker achieves superior performance on various benchmarks compared to previous open-source agents and is competitive with commercial counterparts. The findings highlight interaction scaling as a critical factor for enhancing research agents, alongside model capacity and context length.

👉 Paper link: https://huggingface.co/papers/2511.11793

4. Souper-Model: How Simple Arithmetic Unlocks State-of-the-Art LLM Performance

🔑 Keywords: Large Language Models, Model Souping, SoCE, Multilingual Capabilities, Benchmark Composition

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce a principled approach, Soup Of Category Experts (SoCE), for model souping to enhance Large Language Models’ performance.

🛠️ Research Methods:

– Utilization of benchmark composition to identify optimal model candidates.

– Application of non-uniform weighted averaging for combining expert models.

💬 Research Conclusions:

– The proposed SoCE method improves performance and robustness in various domains, achieving state-of-the-art results on the Berkeley Function Calling Leaderboard.

👉 Paper link: https://huggingface.co/papers/2511.13254

5. Part-X-MLLM: Part-aware 3D Multimodal Large Language Model

🔑 Keywords: native 3D multimodal large language model, structured executable grammar, geometry-aware modules, dual-encoder architecture

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To create a native 3D multimodal large language model, Part-X-MLLM, that unifies various 3D tasks through a single structured format.

🛠️ Research Methods:

– The model formulates tasks as programs in a structured, executable grammar and uses a dual-encoder architecture to disentangle structure from semantics, pre-training on a extensive part-centric dataset.

💬 Research Conclusions:

– Part-X-MLLM excels at producing high-quality structured plans, achieving state-of-the-art performance in grounded Q&A, compositional generation, and localized editing.

👉 Paper link: https://huggingface.co/papers/2511.13647

6. MMaDA-Parallel: Multimodal Large Diffusion Language Models for Thinking-Aware Editing and Generation

🔑 Keywords: MMaDA-Parallel, cross-modal alignment, thinking-aware image synthesis, Parallel Reinforcement Learning, semantic consistency

💡 Category: Generative Models

🌟 Research Objective:

– To address error propagation issues in sequential thinking-aware image synthesis approaches that degrade performance.

🛠️ Research Methods:

– Development of a parallel multimodal diffusion framework, MMaDA-Parallel, for continuous, bidirectional interaction between text and images.

– Introduction of ParaBench, a benchmark designed to evaluate text and image output modalities.

– Use of supervised finetuning and Parallel Reinforcement Learning for optimizing semantic alignment.

💬 Research Conclusions:

– MMaDA-Parallel significantly improves cross-modal alignment and semantic consistency, achieving a 6.9% improvement in Output Alignment on ParaBench compared to the state-of-the-art model, Bagel.

– Establishes a robust paradigm for thinking-aware image synthesis.

👉 Paper link: https://huggingface.co/papers/2511.09611

7. GroupRank: A Groupwise Reranking Paradigm Driven by Reinforcement Learning

🔑 Keywords: Large Language Models, RAG systems, Groupwise, GRPO, high quality retrieval and ranking data

💡 Category: Natural Language Processing

🌟 Research Objective:

– The authors aim to overcome limitations of current reranking paradigms in RAG systems by introducing a new method that combines the strengths of both Pointwise and Listwise approaches.

🛠️ Research Methods:

– A novel reranking paradigm called Groupwise is proposed, where queries and groups of candidate documents are jointly processed to determine relevance scores.

– Adoption of GRPO for model training, using a heterogeneous reward function integrating ranking metrics and distributional rewards.

– Implementation of an innovative pipeline for synthesizing high-quality retrieval and ranking data due to scarcity of labeled data.

💬 Research Conclusions:

– The proposed method effectively addresses scalability and flexibility issues, validated by experiments on the BRIGHT and R2MED benchmarks, showing improved performance.

👉 Paper link: https://huggingface.co/papers/2511.11653

8. TiViBench: Benchmarking Think-in-Video Reasoning for Video Generative Models

🔑 Keywords: Video Generative Models, Physical Plausibility, Logical Consistency, AI Native, Reasoning Capabilities

💡 Category: Generative Models

🌟 Research Objective:

– To evaluate and enhance the reasoning capabilities of image-to-video generation models through the introduction of TiViBench and VideoTPO.

🛠️ Research Methods:

– Introduction of TiViBench benchmark, assessing reasoning across structural, spatial, symbolic, and practical dimensions in video generation models.

– Implementation of VideoTPO, a test-time strategy inspired by preference optimization for improving reasoning performance.

💬 Research Conclusions:

– Video generative models show potential for reasoning similar to LLMs, with commercial models outperforming open-source models due to training scale limitations.

– VideoTPO significantly boosts reasoning performance without additional resources, offering a pathway for future advancements in video model reasoning.

👉 Paper link: https://huggingface.co/papers/2511.13704

9. PhysX-Anything: Simulation-Ready Physical 3D Assets from Single Image

🔑 Keywords: PhysX-Anything, Embodied AI, Simulation-ready, VLM-based model

💡 Category: Generative Models

🌟 Research Objective:

– Introduce PhysX-Anything, a 3D generative framework producing high-quality simulation-ready 3D assets with physical attributes from single images.

🛠️ Research Methods:

– Developed the first VLM-based physical 3D model with a new efficient 3D representation reducing tokens by 193x.

– Constructed a new dataset, PhysX-Mobility, enhancing diversity and expanding object categories over 2x from existing datasets.

💬 Research Conclusions:

– PhysX-Anything demonstrates strong generative performance and is useful in contact-rich robotic policy learning.

– The framework can empower a broad range of applications in embodied AI and physics-based simulations.

👉 Paper link: https://huggingface.co/papers/2511.13648

10. UFO^3: Weaving the Digital Agent Galaxy

🔑 Keywords: AI Native, Agent Interaction Protocol, TaskConstellation, adaptive computing fabric, ubiquitous computing

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To develop UFO^3, a system that unifies heterogeneous devices for seamless task collaboration and dynamic optimization.

🛠️ Research Methods:

– Utilizing a single orchestration fabric that models tasks as distributed DAGs and employs a Constellation Orchestrator for dynamic execution.

💬 Research Conclusions:

– UFO^3 achieved significant metrics in cross-device task orchestration, demonstrating efficient, accurate, and resilient task management while reducing latency by 31%.

👉 Paper link: https://huggingface.co/papers/2511.11332

11. Evolve the Method, Not the Prompts: Evolutionary Synthesis of Jailbreak Attacks on LLMs

🔑 Keywords: Automated red teaming, Large Language Models, EvoSynth, Attack Success Rate

💡 Category: AI Systems and Tools

🌟 Research Objective:

– Introducing EvoSynth, an autonomous framework for the evolutionary synthesis of jailbreak methods for Large Language Models.

🛠️ Research Methods:

– EvoSynth employs a multi-agent system to autonomously engineer, evolve, and execute novel, code-based attack algorithms with a self-correction loop.

💬 Research Conclusions:

– EvoSynth achieves an 85.5% Attack Success Rate against robust models and generates more diverse attack strategies than existing methods.

👉 Paper link: https://huggingface.co/papers/2511.12710

12. OlmoEarth: Stable Latent Image Modeling for Multimodal Earth Observation

🔑 Keywords: OlmoEarth, Multimodal, Spatio-Temporal, Self-Supervised Learning, Foundation Model

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Develop OlmoEarth, a spatio-temporal foundation model tailored for the Earth observation domain utilizing a novel self-supervised learning approach.

🛠️ Research Methods:

– Implement a state-of-the-art multimodal model with a unique masking strategy and loss function to outperform existing foundation models.

💬 Research Conclusions:

– OlmoEarth outperformed 12 foundation models, achieving best performance on multiple tasks, and serves as the backbone of a platform aiding non-profits and NGOs.

👉 Paper link: https://huggingface.co/papers/2511.13655

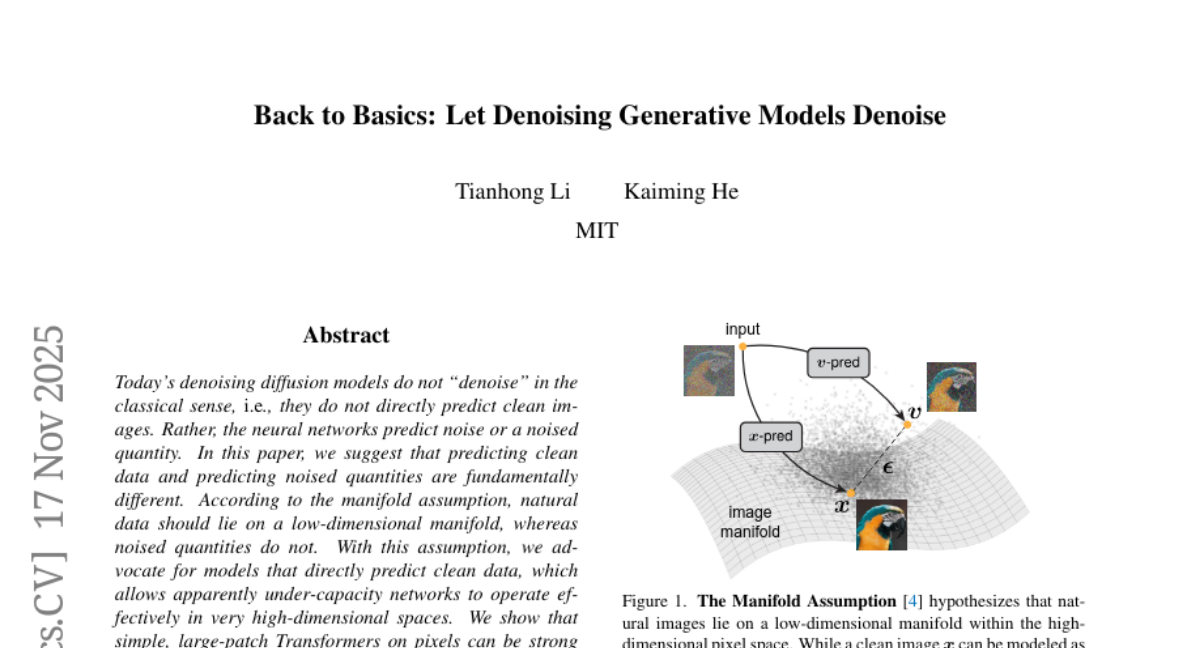

13. Back to Basics: Let Denoising Generative Models Denoise

🔑 Keywords: Denoising Diffusion Models, Clean Data Prediction, Transformers, High-Dimensional Spaces

💡 Category: Generative Models

🌟 Research Objective:

– To advocate for models that directly predict clean data instead of noised quantities, supporting effective operations in high-dimensional spaces.

🛠️ Research Methods:

– Implementation of simple, large-patch Transformers on pixels without the use of tokenizers, pre-training, or extra loss functions.

💬 Research Conclusions:

– “Just image Transformers” (JiT) demonstrates competitive results, showing efficacy when predicting high-dimensional data on ImageNet, highlighting a self-contained paradigm for Transformer-based diffusion models.

👉 Paper link: https://huggingface.co/papers/2511.13720

14. Genomic Next-Token Predictors are In-Context Learners

🔑 Keywords: In-context learning, Genomic sequences, Emergent behavior, Predictive modeling

💡 Category: Foundations of AI

🌟 Research Objective:

– To investigate whether in-context learning (ICL) can arise in genomic sequences through large-scale predictive training.

🛠️ Research Methods:

– Developed a controlled experimental framework to compare ICL in both linguistic and genomic models, specifically focusing on the Evo2 genomic model.

💬 Research Conclusions:

– The study presents the first evidence of organically emergent ICL in genomic sequences, suggesting that ICL is a consequence of large-scale predictive modeling over rich data, extending beyond linguistic domains.

👉 Paper link: https://huggingface.co/papers/2511.12797

15. Live-SWE-agent: Can Software Engineering Agents Self-Evolve on the Fly?

🔑 Keywords: Large Language Models, software agents, self-improving, Live-SWE-agent

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The paper proposes Live-SWE-agent, a revolutionary live software agent capable of real-time autonomous and continuous evolution, specifically designed for solving real-world software problems.

🛠️ Research Methods:

– The study begins with a basic agent scaffold that autonomously evolves during runtime using only simple tools like bash, specifically evaluated on SWE-bench Verified and SWE-Bench Pro benchmarks.

💬 Research Conclusions:

– Live-SWE-agent demonstrates a significant solve rate of 75.4% on SWE-bench Verified, surpassing other open-source agents, and achieves a 45.8% solve rate on SWE-Bench Pro, outperforming current state-of-the-art manually crafted agents.

👉 Paper link: https://huggingface.co/papers/2511.13646

16. Test-Time Spectrum-Aware Latent Steering for Zero-Shot Generalization in Vision-Language Models

🔑 Keywords: Vision-Language Models, Test-Time Adaptation, Spectrum-Aware Test-Time Steering, Latent Representation, AI Native

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The research aims to improve the adaptation of Vision-Language Models under domain shifts at test time using a lightweight framework, Spectrum-Aware Test-Time Steering (STS).

🛠️ Research Methods:

– STS extracts a spectral subspace from textual embeddings, allowing the model to steer latent representations without backpropagation or modifying frozen encoders, optimizing entropy minimization across augmented views.

💬 Research Conclusions:

– STS significantly outperforms or matches state-of-the-art test-time adaptation methods, achieving up to 8x faster inference and a 12x smaller memory footprint compared to traditional test-time prompt tuning.

👉 Paper link: https://huggingface.co/papers/2511.09809

17. Assessing LLMs for Serendipity Discovery in Knowledge Graphs: A Case for Drug Repurposing

🔑 Keywords: Large Language Models, Serendipity, Knowledge Graph Question Answering, Drug Repurposing, AI-generated Summary

💡 Category: AI in Healthcare

🌟 Research Objective:

– Evaluate the ability of Large Language Models to generate surprising and valuable answers in scientific knowledge graph question answering, specifically in the context of drug repurposing.

🛠️ Research Methods:

– Introduced the SerenQA framework to assess serendipity-aware KGQA, incorporating a serendipity metric and a structured evaluation pipeline with tasks such as knowledge retrieval, subgraph reasoning, and serendipity exploration.

💬 Research Conclusions:

– State-of-the-art Large Language Models perform well in knowledge retrieval but struggle with identifying surprising and valuable insights, highlighting a significant opportunity for future improvements.

👉 Paper link: https://huggingface.co/papers/2511.12472

18. WebCoach: Self-Evolving Web Agents with Cross-Session Memory Guidance

🔑 Keywords: Multimodal LLM-powered agents, WebCoach, Cross-session memory, Long-term planning, Continual learning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance the long-term robustness and sample efficiency of multimodal LLM-powered agents in web navigation by introducing a model-agnostic self-evolving framework called WebCoach.

🛠️ Research Methods:

– WebCoach includes three key components: the WebCondenser for summarizing navigation logs, an External Memory Store for organizing episodic experiences, and a Coach for retrieving and injecting relevant advice to improve performance in web browsing tasks.

💬 Research Conclusions:

– Evaluations on the WebVoyager benchmark show that WebCoach significantly improves the performance of browser-use agents across different LLM backbones, increasing task success rates and enhancing the capabilities of smaller models to perform comparably to larger ones like GPT-4o.

👉 Paper link: https://huggingface.co/papers/2511.12997

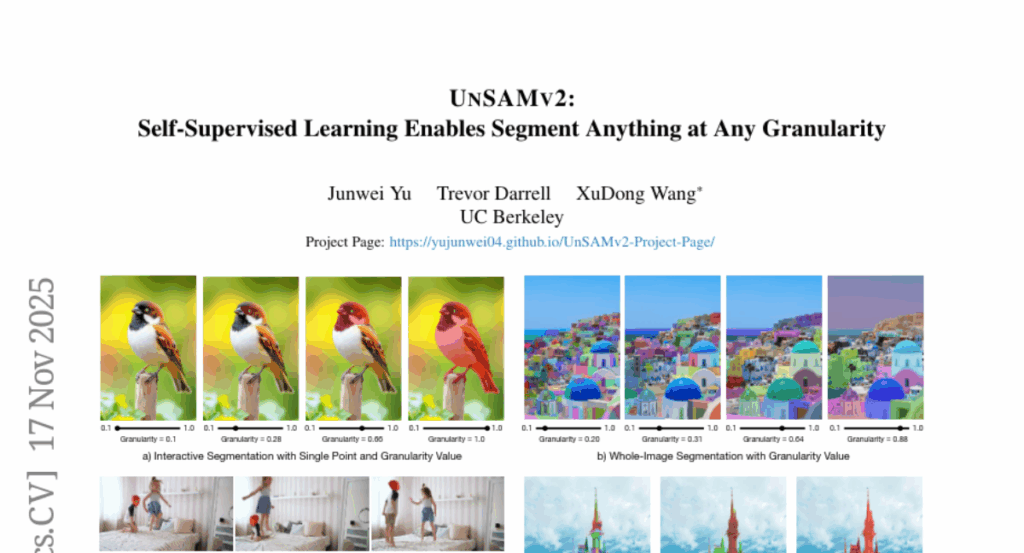

19. UnSAMv2: Self-Supervised Learning Enables Segment Anything at Any Granularity

🔑 Keywords: UnSAMv2, Vision Foundation Model, Granularity Control, Self-Supervised Learning

💡 Category: Computer Vision

🌟 Research Objective:

– To enhance the SAM family by introducing UnSAMv2, allowing segmentation at any granularity without human annotations.

🛠️ Research Methods:

– Utilizes a divide-and-conquer strategy with abundant mask-granularity pairs and a novel granularity control embedding, leveraging only 6K unlabeled images and minimal additional parameters.

💬 Research Conclusions:

– UnSAMv2 significantly improves segmentation metrics across various tasks, demonstrating that small amounts of unlabeled data with granularity-aware self-supervised learning can enhance vision foundation models.

👉 Paper link: https://huggingface.co/papers/2511.13714