AI Native Daily Paper Digest – 20251128

1. Video Generation Models Are Good Latent Reward Models

🔑 Keywords: PRFL, latent space, video generation, human preferences, gradient backpropagation

💡 Category: Generative Models

🌟 Research Objective:

– The objective is to optimize video generation preferences in latent space to enhance alignment with human preferences while reducing memory consumption and training time.

🛠️ Research Methods:

– Introduction of Process Reward Feedback Learning (PRFL), a framework for optimizing preferences entirely in latent space, allowing for efficient gradient backpropagation through the full denoising chain without VAE decoding.

💬 Research Conclusions:

– PRFL significantly improves alignment with human preferences and substantially reduces memory consumption and training time compared to traditional RGB ReFL approaches.

👉 Paper link: https://huggingface.co/papers/2511.21541

2. Canvas-to-Image: Compositional Image Generation with Multimodal Controls

🔑 Keywords: Canvas-to-Image, multimodal control, unified framework, composite canvas image, text-to-image generation

💡 Category: Generative Models

🌟 Research Objective:

– The introduction of a unified framework, Canvas-to-Image, to enhance high-fidelity multimodal image generation by encoding diverse control signals into a composite canvas image.

🛠️ Research Methods:

– Development of a Multi-Task Canvas Training strategy that optimizes diffusion models for integrated visual-spatial reasoning and multiple control modalities.

💬 Research Conclusions:

– Canvas-to-Image significantly surpasses existing methods in identity preservation and control adherence across several challenging benchmarks, including multi-person and pose-controlled compositions.

👉 Paper link: https://huggingface.co/papers/2511.21691

3. MIRA: Multimodal Iterative Reasoning Agent for Image Editing

🔑 Keywords: MIRA, multimodal reasoning agent, diffusion-based image editing, semantic consistency, perceptual quality

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to enhance diffusion-based image editing by introducing MIRA, a multimodal reasoning agent, to improve the semantic consistency and perceptual quality of edits based on complex instructions.

🛠️ Research Methods:

– The study introduces MIRA which utilizes an iterative perception-reasoning-action loop for editing, making use of visual feedback, and is trained with a multimodal tool-use dataset, MIRA-Editing, combined with a two-stage SFT + GRPO training pipeline.

💬 Research Conclusions:

– MIRA significantly improves image editing when paired with models like Flux.1-Kontext, Step1X-Edit, and Qwen-Image-Edit, achieving results comparable to or exceeding proprietary systems in terms of semantic consistency and perceptual quality.

👉 Paper link: https://huggingface.co/papers/2511.21087

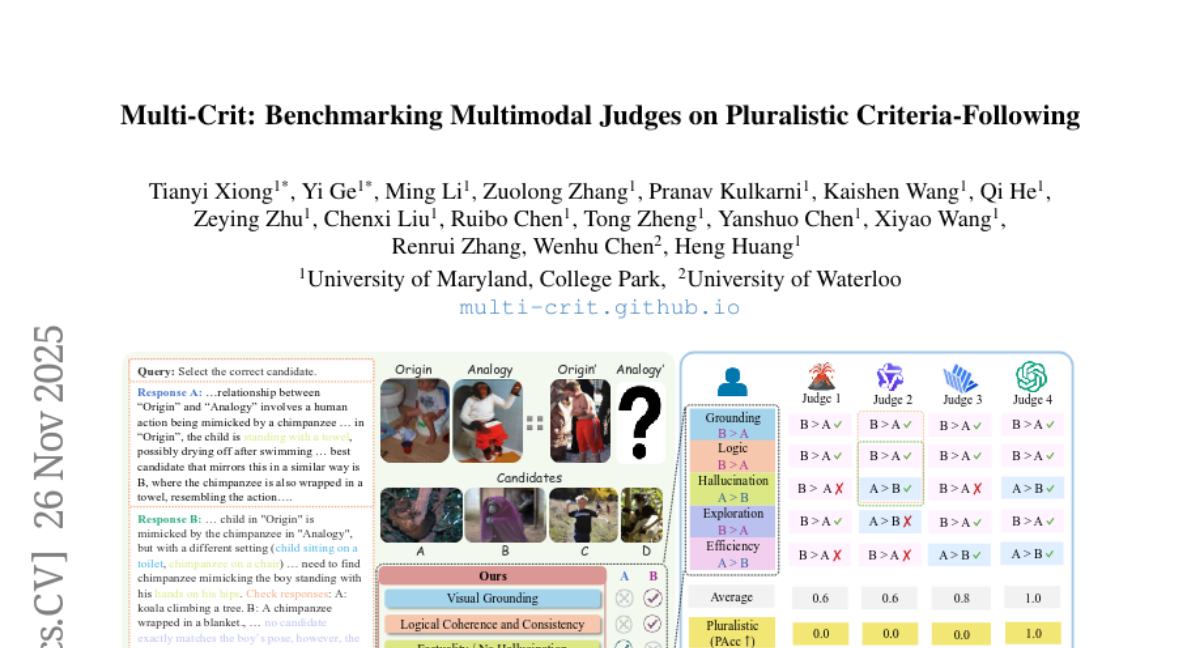

4. Multi-Crit: Benchmarking Multimodal Judges on Pluralistic Criteria-Following

🔑 Keywords: Large multimodal models, Multimodal evaluation systems, Pluralistic criteria, Criterion-level judgments, Proprietary and open-source models

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The study aims to evaluate multimodal models on their ability to follow diverse and fine-grained evaluation criteria, by developing a benchmark called Multi-Crit.

🛠️ Research Methods:

– Multi-Crit was created through a rigorous data curation pipeline for gathering response pairs annotated with multi-criterion human feedback. It introduces novel metrics for assessing pluralistic adherence, flexibility, and criterion-level preference conflicts.

💬 Research Conclusions:

– Proprietary models struggle with pluralistic criteria adherence, especially in open-ended evaluations, while open-source models further lag in flexibility. Critic fine-tuning improves visual grounding but not criterion-level judgment, thus highlighting limitations in current multimodal judges.

👉 Paper link: https://huggingface.co/papers/2511.21662

5. What does it mean to understand language?

🔑 Keywords: Language understanding, Mental models, Cognitive neuroscience, Brain regions

💡 Category: Natural Language Processing

🌟 Research Objective:

– To explore how deep language understanding requires interaction between the brain’s core language system and other brain regions for constructing perceptual and motor representations, mental models, and the use of world knowledge and memories.

🛠️ Research Methods:

– Review of existing evidence in cognitive neuroscience to support the hypothesis and suggestion of methods to directly test it.

💬 Research Conclusions:

– Establishes a new approach toward understanding language cognitively and neurally, by highlighting the limitations of the brain’s core language system and the need for broader integration with other cognitive processes.

👉 Paper link: https://huggingface.co/papers/2511.19757

6. ENACT: Evaluating Embodied Cognition with World Modeling of Egocentric Interaction

🔑 Keywords: Embodied cognition, Vision-language models, World modeling, Visual question answering, Anthropocentric biases

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The research introduces ENACT, a benchmark to evaluate embodied cognition in vision-language models through world modeling from egocentric interaction in a VQA format.

🛠️ Research Methods:

– Tasks are framed as a partially observable Markov decision process with scene graph changes and comprise forward and inverse world modeling tasks using QA pairs generated from robotics simulation.

💬 Research Conclusions:

– Models perform better on inverse tasks than forward ones, reveal a performance gap with humans that widens over interaction horizons, and exhibit anthropocentric biases.

👉 Paper link: https://huggingface.co/papers/2511.20937

7. Agentic Learner with Grow-and-Refine Multimodal Semantic Memory

🔑 Keywords: ViLoMem, Semantic Memory, Dual-Stream Memory, Multimodal Semantic Knowledge, Logical Reasoning

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective of the research is to enhance MLLMs by preserving multimodal semantic knowledge, reducing errors, and improving accuracy across benchmarks using a dual-stream memory framework.

🛠️ Research Methods:

– Introduction of ViLoMem, a framework that constructs compact, schema-based memory by separately encoding visual distraction patterns and logical reasoning errors.

– Follows a grow-and-refine principle for accumulating and updating multimodal semantic knowledge while avoiding catastrophic forgetting.

💬 Research Conclusions:

– ViLoMem consistently improves pass@1 accuracy and reduces repeated visual and logical errors across six multimodal benchmarks.

– The necessity of dual-stream memory with explicit distraction-hallucination separation is confirmed, demonstrating its value for lifelong and cross-domain agentic learning.

👉 Paper link: https://huggingface.co/papers/2511.21678

8.