AI Native Daily Paper Digest – 20251212

1. T-pro 2.0: An Efficient Russian Hybrid-Reasoning Model and Playground

🔑 Keywords: T-pro 2.0, hybrid reasoning, efficient inference, Cyrillic-dense tokenizer, EAGLE speculative-decoding

💡 Category: Natural Language Processing

🌟 Research Objective:

– The goal is to introduce T-pro 2.0, a Russian LLM optimized for hybrid reasoning and efficient inference.

🛠️ Research Methods:

– The model was developed using a Cyrillic-dense tokenizer and an EAGLE speculative-decoding pipeline; resources such as model weights and instruction corpora were released on Hugging Face.

💬 Research Conclusions:

– T-pro 2.0 provides an accessible system for extending and evaluating Russian language models, with demonstrated speed improvements in both reasoning and non-reasoning modes.

👉 Paper link: https://huggingface.co/papers/2512.10430

2. Long-horizon Reasoning Agent for Olympiad-Level Mathematical Problem Solving

🔑 Keywords: Reinforcement Learning, Verification, Iterative Active Learning, Rejection Fine-Tuning, Large Language Models

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To enhance the verification of long reasoning chains in large language models using the proposed Outcome-based Process Verifier (OPV).

🛠️ Research Methods:

– Utilization of an iterative active learning framework with expert annotations and Rejection Fine-Tuning to improve OPV’s verification capability efficiently.

💬 Research Conclusions:

– OPV achieves state-of-the-art results, improving accuracy and effectively detecting false positives, with demonstrated superior performance over larger models.

👉 Paper link: https://huggingface.co/papers/2512.10739

3. Are We Ready for RL in Text-to-3D Generation? A Progressive Investigation

🔑 Keywords: Reinforcement Learning, Text-to-3D Generation, Reward Designs, RL Algorithms, Hierarchical 3D Generation

💡 Category: Reinforcement Learning

🌟 Research Objective:

– Investigate the application of reinforcement learning (RL) for text-to-3D generation, addressing challenges related to spatial complexity and reward sensitivity.

🛠️ Research Methods:

– The study evaluates multiple aspects such as reward designs, RL algorithms (including GRPO variants), and benchmarks (introducing MME-3DR) to enhance the 3D generation process. Proposed Hi-GRPO for hierarchical optimization.

💬 Research Conclusions:

– Developed AR3D-R1, the first RL-enhanced text-to-3D model, demonstrating expert performance in refining shapes and textures. The research provides insights into RL-driven reasoning for 3D generation, with release of the source code for broader application.

👉 Paper link: https://huggingface.co/papers/2512.10949

4. OPV: Outcome-based Process Verifier for Efficient Long Chain-of-Thought Verification

🔑 Keywords: Outcome-based Process Verifier, iterative active learning, Rejection Fine-Tuning, state-of-the-art performance, Reinforcement Learning with Verifiable Rewards

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To enhance the verification of complex reasoning chains in large language models by developing an Outcome-based Process Verifier (OPV).

🛠️ Research Methods:

– Employing an iterative active learning framework combined with expert annotations and Rejection Fine-Tuning to improve OPV performance while reducing annotation costs.

💬 Research Conclusions:

– OPV significantly outperforms existing models, demonstrating an F1 score of 83.1 on OPV-Bench, and effectively detects false positives. It collaborates well with policy models, increasing accuracy as compute budget scales.

👉 Paper link: https://huggingface.co/papers/2512.10756

5. Achieving Olympia-Level Geometry Large Language Model Agent via Complexity Boosting Reinforcement Learning

🔑 Keywords: LLM agents, AI for geometry, InternGeometry, Complexity-Boosting Reinforcement Learning, symbolic engine

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– Develop a medalist-level LLM agent for geometry that surpasses human performance on International Mathematical Olympiad (IMO) geometry problems using heuristic-driven propositions and verification.

🛠️ Research Methods:

– Implement iterative proposition verification with a symbolic engine and dynamic memory mechanism.

– Introduce Complexity-Boosting Reinforcement Learning to enhance problem complexity across training stages.

💬 Research Conclusions:

– InternGeometry, based on InternThinker-32B, solves 44 out of 50 IMO geometry problems, outperforming AlphaGeometry 2 with minimal training data.

– Demonstrates the potential of LLM agents on expert-level geometry tasks, proposing novel auxiliary constructions unavailable in human solutions.

👉 Paper link: https://huggingface.co/papers/2512.10534

6. MoCapAnything: Unified 3D Motion Capture for Arbitrary Skeletons from Monocular Videos

🔑 Keywords: MoCapAnything, Category-Agnostic Motion Capture (CAMoCap), 3D joint trajectories, cross-species retargeting, skeletal animations

💡 Category: Computer Vision

🌟 Research Objective:

– To develop MoCapAnything, a reference-guided framework for reconstructing rotation-based animations from monocular videos for arbitrary rigged 3D assets, facilitating Category-Agnostic Motion Capture.

🛠️ Research Methods:

– MoCapAnything utilizes a factorized framework with three learnable modules and a lightweight inverse kinematics stage, including a Reference Prompt Encoder, a Video Feature Extractor, and a Unified Motion Decoder.

💬 Research Conclusions:

– MoCapAnything showcases high-quality skeletal animations and meaningful cross-species retargeting, enabling scalable, prompt-driven 3D motion capture across diverse assets.

👉 Paper link: https://huggingface.co/papers/2512.10881

7. BEAVER: An Efficient Deterministic LLM Verifier

🔑 Keywords: BEAVER, Large Language Models, Sound Probability Bounds, Constraint Verification, AI-generated summary

💡 Category: Natural Language Processing

🌟 Research Objective:

– To provide a deterministic and sound framework for verifying constraints in large language models using BEAVER.

🛠️ Research Methods:

– BEAVER systematically explores the generation space with novel data structures, maintaining provably sound bounds.

💬 Research Conclusions:

– BEAVER achieves 6 to 8 times tighter probability bounds and identifies 3 to 4 times more high-risk instances compared to baseline methods, facilitating precise risk assessment.

👉 Paper link: https://huggingface.co/papers/2512.05439

8. From Macro to Micro: Benchmarking Microscopic Spatial Intelligence on Molecules via Vision-Language Models

🔑 Keywords: Vision-Language Models, Microscopic Spatial Intelligence, AI-Generated Summary, scientific AGI

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The paper introduces Microscopic Spatial Intelligence (MiSI) as a new concept to evaluate Vision-Language Models’ abilities to understand spatial relationships of microscopic entities.

🛠️ Research Methods:

– A systematic benchmark framework, MiSI-Bench, was created featuring over 163,000 question-answer pairs and 587,000 images from approximately 4,000 molecular structures to assess the domain.

💬 Research Conclusions:

– Current state-of-the-art VLMs perform below human level in most tasks, but a fine-tuned 7B model shows promise, outperforming humans in spatial transformation tasks, despite struggling with scientifically-grounded tasks.

👉 Paper link: https://huggingface.co/papers/2512.10867

9. VQRAE: Representation Quantization Autoencoders for Multimodal Understanding, Generation and Reconstruction

🔑 Keywords: VQRAE, Vector Quantization, Unified Tokenizer, Multimodal Understanding, Discrete Tokens

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– To develop VQRAE, a Vector Quantization Representation AutoEncoder, unifying multimodal understanding, generation, and reconstruction.

🛠️ Research Methods:

– Utilization of a unified tokenizer with continuous semantic features and discrete tokens, employing a symmetric ViT decoder and a two-stage training strategy involving a high-dimensional semantic VQ codebook.

💬 Research Conclusions:

– VQRAE demonstrates competitive performance in visual understanding, generation, and reconstruction benchmarks, achieving 100% utilization ratio in the semantic VQ codebook and promising scaling property in the autoregressive paradigm.

👉 Paper link: https://huggingface.co/papers/2511.23386

10. Thinking with Images via Self-Calling Agent

🔑 Keywords: Self-Calling Chain-of-Thought, visual reasoning, language-only CoT, group-relative policy optimization, parameter-sharing subagents

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– Introduce Self-Calling Chain-of-Thought (sCoT), a novel visual reasoning paradigm, to enhance performance and efficiency in visual reasoning through language-only CoT with self-calling subagents.

🛠️ Research Methods:

– Employ sCoT by decomposing complex tasks into atomic subtasks with self-calling subagents in an isolated context, utilizing group-relative policy optimization to reinforce reasoning behaviors.

💬 Research Conclusions:

– sCoT improves the overall reasoning performance by up to 1.9% and reduces GPU hours by approximately 75% compared to existing strong baseline approaches.

👉 Paper link: https://huggingface.co/papers/2512.08511

11. Evaluating Gemini Robotics Policies in a Veo World Simulator

🔑 Keywords: Generative Evaluation System, Frontier Video Model, Out-of-Distribution Generalization, Safety Checks, AI Native

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To demonstrate the comprehensive application of frontier video models (Veo) in evaluating robotics policies across nominal performance, out-of-distribution generalization, and safety concerns.

🛠️ Research Methods:

– Development of a generative evaluation system built on a frontier video foundation model to enable realistic scene simulations and evaluate robot policy conditions, supporting multi-view consistency and generative image-editing.

💬 Research Conclusions:

– The system effectively predicts the performance of robotic policies in varied conditions and validates safety, through 1600+ real-world evaluations, demonstrating its potential to enhance policy assessment in both safe and novel scenarios.

👉 Paper link: https://huggingface.co/papers/2512.10675

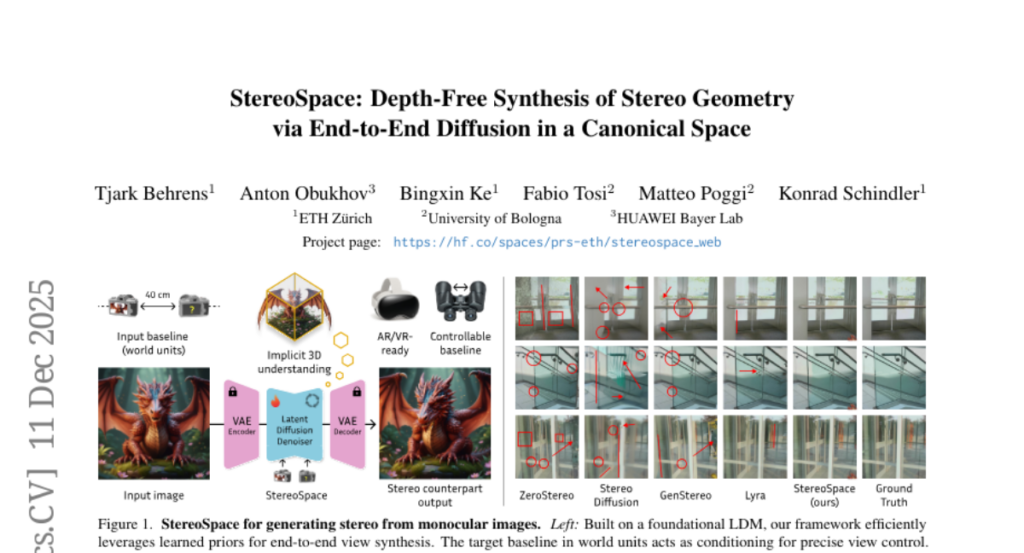

12. StereoSpace: Depth-Free Synthesis of Stereo Geometry via End-to-End Diffusion in a Canonical Space

🔑 Keywords: StereoSpace, viewpoint-conditioned diffusion, perceptual comfort, geometric consistency

💡 Category: Generative Models

🌟 Research Objective:

– Introduce StereoSpace, a diffusion-based framework for generating stereo images without explicit depth or warping.

🛠️ Research Methods:

– Utilize viewpoint conditioning to model geometry and infer correspondences with a canonical rectified space.

– Implement an end-to-end evaluation protocol devoid of ground truth geometry to ensure fair testing.

💬 Research Conclusions:

– StereoSpace achieves superior performance in generating sharp parallax and robust stereo images, surpassing existing warp & inpaint and latent-warping methods.

– The framework establishes viewpoint-conditioned diffusion as a scalable, depth-free solution for stereo generation.

👉 Paper link: https://huggingface.co/papers/2512.10959

13. Stronger Normalization-Free Transformers

🔑 Keywords: Derf, Dynamic Tanh, normalization, AI-generated summary

💡 Category: Machine Learning

🌟 Research Objective:

– To explore the design of point-wise normalization functions, with a focus on surpassing the performance of Dynamic Tanh (DyT) while improving generalization.

🛠️ Research Methods:

– Study intrinsic properties of point-wise functions and perform a large-scale search to discover effective function designs.

– Introduce Derf based on the rescaled Gaussian cumulative distribution function as an optimized point-wise normalization function.

💬 Research Conclusions:

– Derf outperforms existing normalization functions like LayerNorm, RMSNorm, and DyT across various domains including vision, speech representation, and DNA sequence modeling.

– Notable performance gains are primarily due to improved generalization, making Derf a practical option for normalization-free Transformer architectures.

👉 Paper link: https://huggingface.co/papers/2512.10938

14. MoRel: Long-Range Flicker-Free 4D Motion Modeling via Anchor Relay-based Bidirectional Blending with Hierarchical Densification

🔑 Keywords: 4D Gaussian Splatting, MoRel, Anchor Relay-based Bidirectional Blending, Memory-efficient, Temporal coherence

💡 Category: Computer Vision

🌟 Research Objective:

– The study introduces MoRel, a novel 4D Gaussian Splatting framework, aimed at improving dynamic video rendering through efficient memory usage and handling long-range motion.

🛠️ Research Methods:

– The research employs an Anchor Relay-based Bidirectional Blending mechanism to ensure temporally consistent modeling and a Feature-variance-guided Hierarchical Densification scheme to enhance rendering quality and manage feature-variance levels.

💬 Research Conclusions:

– MoRel successfully achieves temporally coherent and flicker-free long-range 4D reconstruction with bounded memory usage, demonstrating improved scalability and efficiency in Gaussian-based dynamic scene representations.

👉 Paper link: https://huggingface.co/papers/2512.09270

15. H2R-Grounder: A Paired-Data-Free Paradigm for Translating Human Interaction Videos into Physically Grounded Robot Videos

🔑 Keywords: video-to-video translation, generative model, unpaired robot videos, inpainting, temporal coherence

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– To develop a framework that translates human-object interaction videos into realistic robot manipulation videos using a generative model and unpaired data.

🛠️ Research Methods:

– Implements a transferable representation with inpainting and visual cues to generate motion-consistent robot videos.

– Fine-tunes a SOTA video diffusion model using in-context learning for temporal coherence and leveraging rich prior knowledge.

💬 Research Conclusions:

– The proposed framework achieves significantly more realistic and physically grounded robot motions compared to baselines, offering a promising direction for advancing robot learning from unlabeled human videos.

👉 Paper link: https://huggingface.co/papers/2512.09406

16. Tool-Augmented Spatiotemporal Reasoning for Streamlining Video Question Answering Task

🔑 Keywords: Spatiotemporal Reasoning, Multimodal Large Language Models, Video Question Answering, Video Toolkit, STAR Framework

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– The objective is to enhance multimodal large language models with a spatiotemporal reasoning framework for improved video question answering capabilities.

🛠️ Research Methods:

– Implementation of a Spatiotemporal Reasoning Framework (STAR) to strategically schedule tools for spatial and temporal understanding.

– Integration of a comprehensive Video Toolkit to strengthen the reasoning capabilities of foundation models within the video domain.

💬 Research Conclusions:

– The STAR framework significantly enhances the performance of GPT-4o on VideoMME by 8.2% and LongVideoBench by 4.6%.

– The approach marks a critical step towards developing autonomous and intelligent video analysis assistants.

👉 Paper link: https://huggingface.co/papers/2512.10359

17. The FACTS Leaderboard: A Comprehensive Benchmark for Large Language Model Factuality

🔑 Keywords: FACTS Leaderboard, factual accuracy, language models, automated judge models, AI-generated summary

💡 Category: Natural Language Processing

🌟 Research Objective:

– Introduce The FACTS Leaderboard suite to evaluate the factual accuracy of language models across diverse scenarios.

🛠️ Research Methods:

– Utilizes four sub-leaderboards to assess different aspects: image-based questions, closed-book factoid questions, information-seeking with search API, and document-grounded long-form responses.

💬 Research Conclusions:

– Provides a comprehensive and robust measure of a model’s factuality by using automated judge models and maintaining both public and private leaderboard splits for integrity and external participation.

👉 Paper link: https://huggingface.co/papers/2512.10791

18. Fed-SE: Federated Self-Evolution for Privacy-Constrained Multi-Environment LLM Agents

🔑 Keywords: Federated Self-Evolution, Privacy Constraints, Low-Rank Subspace, Parameter-Efficient Fine-Tuning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The objective is to enhance LLM agents in privacy-constrained environments using a Federated Self-Evolution framework.

🛠️ Research Methods:

– Introduces a local evolution-global aggregation paradigm with parameter-efficient fine-tuning locally and global aggregation within a low-rank subspace to overcome federated learning challenges.

💬 Research Conclusions:

– Experiments demonstrate approximately 18% improvement in task success rates over federated baselines, validating the framework’s effectiveness in cross-environment knowledge transfer.

👉 Paper link: https://huggingface.co/papers/2512.08870

19. ReViSE: Towards Reason-Informed Video Editing in Unified Models with Self-Reflective Learning

🔑 Keywords: ReViSE, reasoning-informed video editing, visual fidelity, vision-language models, intrinsic feedback

💡 Category: Computer Vision

🌟 Research Objective:

– The study aims to bridge the gap between video model reasoning and visual editing by integrating reasoning capabilities with visual editing to enhance accuracy and fidelity.

🛠️ Research Methods:

– The methods involve developing the ReViSE framework, using a self-reflective reasoning mechanism, and introducing the Reason-Informed Video Editing (RVE) task supported by the comprehensive RVE-Bench benchmark.

💬 Research Conclusions:

– Extensive experiments show that ReViSE achieves a 32% improvement in editing accuracy and visual fidelity compared to state-of-the-art methods, demonstrating significant advancements in reasoning-informed video editing.

👉 Paper link: https://huggingface.co/papers/2512.09924

20. Confucius Code Agent: An Open-sourced AI Software Engineer at Industrial Scale

🔑 Keywords: Confucius Code Agent, AI software engineering, coding agents, Confucius SDK, industrial scale

💡 Category: AI Systems and Tools

🌟 Research Objective:

– The paper aims to develop the Confucius Code Agent (CCA), an open-source AI software engineer designed to operate at an industrial scale, addressing transparency, extensibility, and robust performance requirements.

🛠️ Research Methods:

– CCA is built using the Confucius SDK, which integrates Agent Experience (AX), User Experience (UX), and Developer Experience (DX). The SDK utilizes a unified orchestrator with hierarchical working memory and a meta-agent for continuous improvement through a build-test-improve loop.

💬 Research Conclusions:

– The Confucius Code Agent demonstrates significant performance improvements on real-world software engineering tasks, achieving a Resolve@1 performance of 54.3% on SWE-Bench-Pro, thereby bridging the gap between research and production-grade AI systems.

👉 Paper link: https://huggingface.co/papers/2512.10398

21. Omni-Attribute: Open-vocabulary Attribute Encoder for Visual Concept Personalization

🔑 Keywords: Omni-Attribute, image attribute encoder, attribute-specific representations, high-fidelity, compositional generation

💡 Category: Computer Vision

🌟 Research Objective:

– To introduce Omni-Attribute, an open-vocabulary image attribute encoder for precise visual concept personalization and compositional generation.

🛠️ Research Methods:

– Curating semantically linked image pairs annotated with attributes.

– Utilizing a dual-objective training paradigm balancing generative fidelity with contrastive disentanglement.

💬 Research Conclusions:

– The resulting embeddings are effective for open-vocabulary attribute retrieval, personalization, and compositional generation, achieving state-of-the-art performance across multiple benchmarks.

👉 Paper link: https://huggingface.co/papers/2512.10955

22. X-Humanoid: Robotize Human Videos to Generate Humanoid Videos at Scale

🔑 Keywords: Embodied AI, Generative Video Editing, Human-to-Humanoid Translation, Video-to-Video Structure, Unreal Engine

💡 Category: Robotics and Autonomous Systems

🌟 Research Objective:

– Develop X-Humanoid to create extensive datasets for training embodied AI models via generative video editing, translating human actions into humanoid robot actions.

🛠️ Research Methods:

– Utilize the Wan 2.2 model adapted to a video-to-video structure and finetune it for human-to-humanoid translation. Implement a data creation pipeline with Unreal Engine to generate paired synthetic videos.

💬 Research Conclusions:

– Created over 3.6 million “robotized” humanoid video frames, with 69% of users rating the method superior in motion consistency and 62.1% in embodiment correctness compared to existing baselines.

👉 Paper link: https://huggingface.co/papers/2512.04537

23. MOA: Multi-Objective Alignment for Role-Playing Agents

🔑 Keywords: MOA, Reinforcement Learning, Multi-Objective Alignment, Thought-Augmented Rollout

💡 Category: Reinforcement Learning

🌟 Research Objective:

– The study aims to optimize multiple dimensions of role-playing agents (RPAs) using a novel reinforcement-learning framework called MOA (Multi-Objective Alignment), enhancing performance across diverse scenarios and complex conversations.

🛠️ Research Methods:

– Implementation of a multi-objective optimization strategy that simultaneously trains on fine-grained rubrics.

– Integration of thought-augmented rollout with off-policy guidance to address diversity and quality issues in model outputs.

💬 Research Conclusions:

– MOA allows an 8B model to match or surpass strong baselines such as GPT-4o and Claude in various dimensions, proving its potential in building RPAs that effectively handle role knowledge, persona style, diverse scenarios, and complex conversations.

👉 Paper link: https://huggingface.co/papers/2512.09756

24. DuetSVG: Unified Multimodal SVG Generation with Internal Visual Guidance

🔑 Keywords: DuetSVG, multimodal model, SVG generation, image tokens, test-time scaling strategy

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Introduce DuetSVG, a unified multimodal model that generates both image and SVG tokens to enhance SVG quality.

🛠️ Research Methods:

– DuetSVG is trained on image and SVG datasets and utilizes a novel test-time scaling strategy for improved SVG decoding.

💬 Research Conclusions:

– DuetSVG outperforms existing methods by producing visually faithful, semantically aligned, and syntactically clean SVGs.

👉 Paper link: https://huggingface.co/papers/2512.10894

25.