AI Native Daily Paper Digest – 20251226

1. Latent Implicit Visual Reasoning

🔑 Keywords: Large Multimodal Models, visual reasoning, task-agnostic, supervised learning, state-of-the-art

💡 Category: Multi-Modal Learning

🌟 Research Objective:

– Address the limitations of Large Multimodal Models in handling predominantly visual reasoning tasks by introducing a task-agnostic mechanism.

🛠️ Research Methods:

– Develop a method to train LMMs using visual reasoning tokens without explicit supervision to enable task-adaptive image re-encoding.

💬 Research Conclusions:

– The proposed approach outperforms direct fine-tuning and achieves state-of-the-art results across a range of vision-centric tasks, enhancing generalization to multi-task instruction tuning.

👉 Paper link: https://huggingface.co/papers/2512.21218

2. Spatia: Video Generation with Updatable Spatial Memory

🔑 Keywords: Spatia, Spatial Memory, 3D Scene Point Cloud, Visual SLAM, Video Generation

💡 Category: Generative Models

🌟 Research Objective:

– To introduce Spatia, a spatial memory-aware video generation framework that maintains long-term spatial and temporal consistency in video generation.

🛠️ Research Methods:

– Utilizes a 3D scene point cloud as persistent spatial memory and employs visual SLAM and dynamic-static disentanglement for maintaining spatial consistency.

💬 Research Conclusions:

– The framework successfully enables realistic video generation, allowing for explicit camera control and 3D-aware interactive editing, providing a geometrically grounded approach for scalable video production.

👉 Paper link: https://huggingface.co/papers/2512.15716

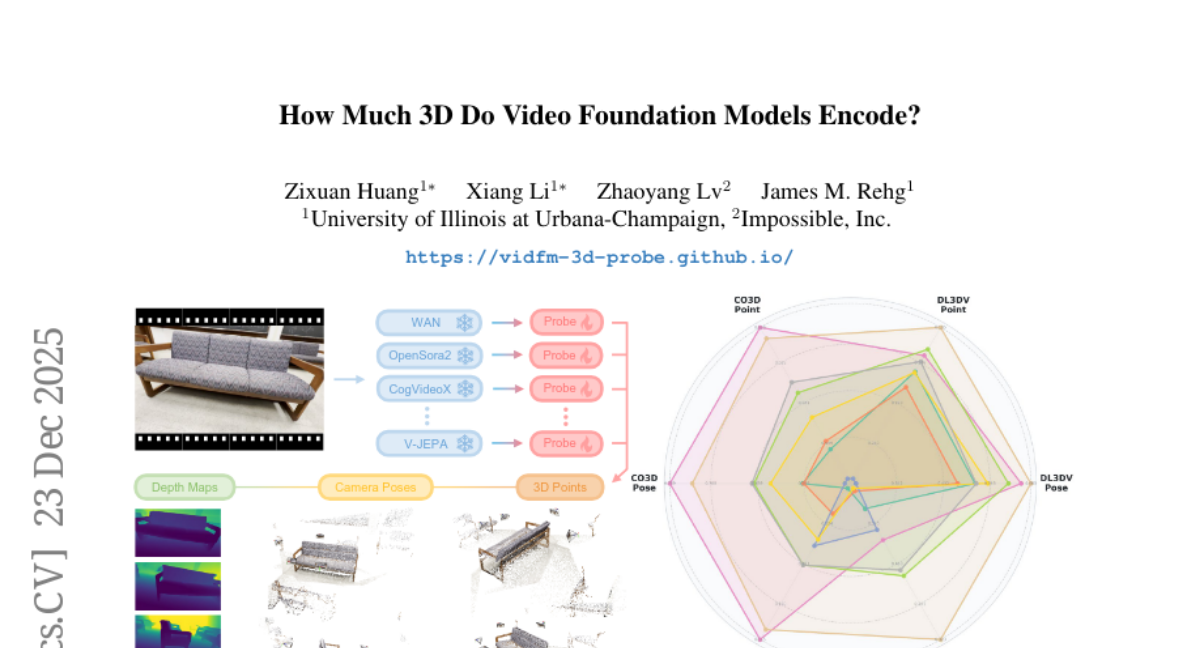

3. How Much 3D Do Video Foundation Models Encode?

🔑 Keywords: Video Foundation Models, 3D awareness, state-of-the-art video generation models, 3D objects and scenes, scalable 3D models

💡 Category: Computer Vision

🌟 Research Objective:

– To quantify the 3D understanding of existing Video Foundation Models (VidFMs) pretrained on vast video data.

🛠️ Research Methods:

– A model-agnostic framework was proposed to measure the 3D awareness of various VidFMs by estimating multiple 3D properties from their features via shallow read-outs.

💬 Research Conclusions:

– State-of-the-art video generation models demonstrate a strong 3D understanding of objects and scenes, even surpassing expert models specifically trained for 3D tasks, despite not being trained on any 3D data.

👉 Paper link: https://huggingface.co/papers/2512.19949

4. GTR-Turbo: Merged Checkpoint is Secretly a Free Teacher for Agentic VLM Training

🔑 Keywords: Multi-turn Reinforcement Learning, Vision-Language Models, GTR-Turbo

💡 Category: Reinforcement Learning

🌟 Research Objective:

– To develop GTR-Turbo, an efficient reinforcement learning method that improves upon Guided Thought Reinforcement without relying on expensive teacher models.

🛠️ Research Methods:

– GTR-Turbo merges weights from checkpoints during RL training and uses this merged model to guide training via supervised fine-tuning or soft logit distillation.

💬 Research Conclusions:

– GTR-Turbo enhances baseline model accuracy by 10-30%, while cutting training time by 50% and compute cost by 60% compared to GTR, all without using privileged models.

👉 Paper link: https://huggingface.co/papers/2512.13043

5.

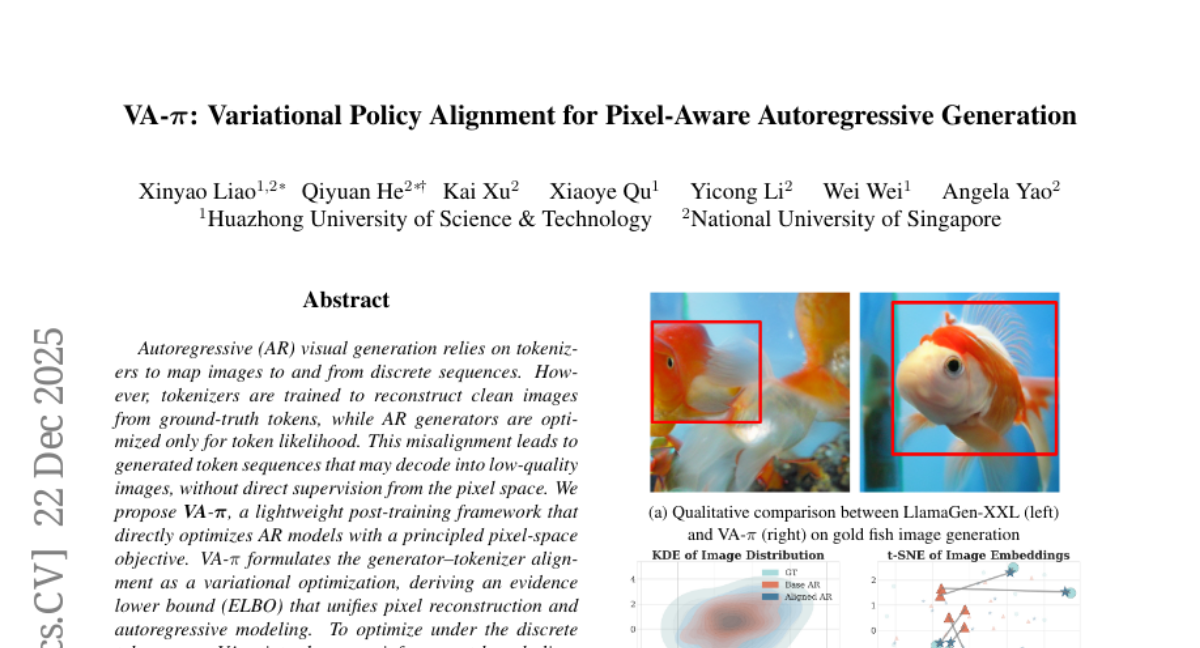

6. VA-π: Variational Policy Alignment for Pixel-Aware Autoregressive Generation

🔑 Keywords: VA-π, Autoregressive (AR) visual generation, tokenizers, intrinsic reward, reinforcement-based alignment

💡 Category: Generative Models

🌟 Research Objective:

– To improve image quality and performance of autoregressive visual generators using VA-π, without retraining tokenizers or employing external rewards.

🛠️ Research Methods:

– Implementation of a post-training framework that directly optimizes AR models with a pixel-space objective, employing a reinforcement-based alignment strategy as intrinsic reward.

💬 Research Conclusions:

– VA-π enables significant improvement in image generation quality, as evidenced by reduced FID and improved IS scores on LlamaGen-XXL, and achieves notable gains in text-to-image tasks.

👉 Paper link: https://huggingface.co/papers/2512.19680

7. Schoenfeld’s Anatomy of Mathematical Reasoning by Language Models

🔑 Keywords: Large language models, Reasoning traces, ThinkARM, Schoenfeld’s Episode Theory

💡 Category: Knowledge Representation and Reasoning

🌟 Research Objective:

– To identify and analyze the cognitive structure and steps of reasoning in large language models beyond surface-level statistics through the introduction of the ThinkARM framework.

🛠️ Research Methods:

– Utilization of Schoenfeld’s Episode Theory as a lens to abstract reasoning traces into functional reasoning steps like Analysis, Explore, Implement, Verify, etc., and the application of this abstraction to mathematical problem solving in various models.

💬 Research Conclusions:

– The abstraction reveals structural differences between reasoning and non-reasoning models, highlights exploration as a critical branching step for correctness, and shows that efficiency-oriented methods selectively suppress evaluative feedback rather than uniformly shortening responses.

👉 Paper link: https://huggingface.co/papers/2512.19995

8. Emergent temporal abstractions in autoregressive models enable hierarchical reinforcement learning

🔑 Keywords: Autoregressive models, Reinforcement Learning, Hierarchical structure, Internal RL

💡 Category: Reinforcement Learning

🌟 Research Objective:

– This study investigates how to improve efficiency in reinforcement learning by exploring within the internal representations of large-scale autoregressive models, focusing on overcoming inefficiencies when rewards are sparse.

🛠️ Research Methods:

– The approach introduces a higher-order, non-causal sequence model that controls the residual stream activations of a base autoregressive model, allowing for the discovery of temporally-abstract actions and latent action generation.

💬 Research Conclusions:

– The research demonstrates that using internal reinforcement learning (“internal RL”) enables efficient learning from sparse rewards and shows potential for implementing hierarchical reinforcement learning within foundational models.

👉 Paper link: https://huggingface.co/papers/2512.20605