AI Native Daily Paper Digest – 20251231

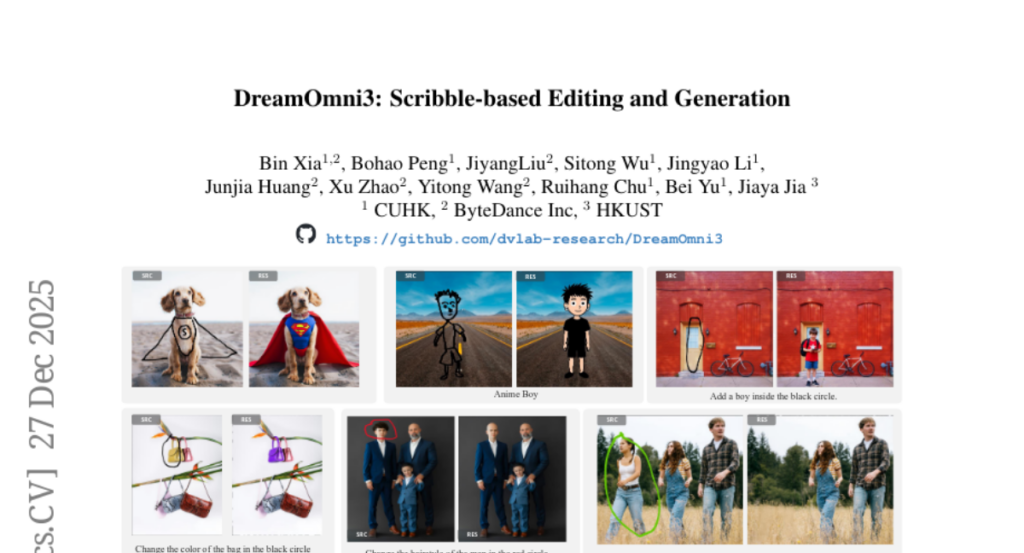

1. UltraShape 1.0: High-Fidelity 3D Shape Generation via Scalable Geometric Refinement

🔑 Keywords: 3D geometry generation, diffusion framework, high-fidelity, spatial localization

💡 Category: Generative Models

🌟 Research Objective:

– Introduce UltraShape 1.0, a scalable framework for generating high-fidelity 3D geometry.

🛠️ Research Methods:

– Implement a two-stage generation pipeline with coarse global structure synthesis followed by detailed refinement.

– Develop a comprehensive data processing pipeline featuring a novel watertight processing method and high-quality data filtering.

– Decouple spatial localization from geometric detail synthesis during the diffusion process using voxel-based refinement.

💬 Research Conclusions:

– UltraShape 1.0 demonstrates competitive performance in both data processing quality and geometry generation.

– The framework is trained on publicly available 3D datasets, achieving strong geometric quality with limited resources.

– Future research is supported through the release of all code and trained models.

👉 Paper link: https://huggingface.co/papers/2512.21185

2. End-to-End Test-Time Training for Long Context

🔑 Keywords: Continual Learning, Transformer, Test-Time Training, Meta-Learning

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study investigates re-framing long-context language modeling as a continual learning problem rather than through architecture design.

🛠️ Research Methods:

– Utilization of a standard Transformer with sliding-window attention, enhancing model initialization with meta-learning, and employing End-to-End Test-Time Training (TTT-E2E).

💬 Research Conclusions:

– TTT-E2E scales with context length similarly to full attention Transformers, yet provides constant inference latency akin to RNNs, delivering significantly faster performance.

👉 Paper link: https://huggingface.co/papers/2512.23675

3. GraphLocator: Graph-guided Causal Reasoning for Issue Localization

🔑 Keywords: Issue Localization, Automated Software Engineering, Causal Issue Graph, GraphLocator

💡 Category: AI Systems and Tools

🌟 Research Objective:

– To address the semantic gap between natural language issue descriptions and their corresponding source code in software repositories.

🛠️ Research Methods:

– Proposed GraphLocator, which utilizes causal structure discovering and dynamic issue disentangling to manage symptom-to-cause and one-to-many mismatches in issue localization.

💬 Research Conclusions:

– GraphLocator demonstrates superior performance over baselines with significant improvements in localization accuracy, recall, and precision, effectively tackling both symptom-to-cause and one-to-many mismatch scenarios.

👉 Paper link: https://huggingface.co/papers/2512.22469

4.

5. GateBreaker: Gate-Guided Attacks on Mixture-of-Expert LLMs

🔑 Keywords: Mixture-of-Experts (MoE), Large Language Models (LLMs), safety alignment, GateBreaker, attack framework

💡 Category: Natural Language Processing

🌟 Research Objective:

– The study aims to understand and enhance the safety alignment mechanisms in Mixture-of-Experts architectures within Large Language Models, which have not been extensively examined compared to dense architectures.

🛠️ Research Methods:

– Introduction of GateBreaker, an attack framework that evaluates MoE LLMs’ safety by identifying and selectively disabling neurons critical for safety, through gate-level profiling, expert-level localization, and targeted safety removal.

💬 Research Conclusions:

– The research identifies that safety is concentrated in a small subset of neurons within MoE models, and disabling approximately 3% of these neurons markedly increases the attack success rate with minimal compromise on utility. Additionally, this safety vulnerability extends to related models and vision language models.

👉 Paper link: https://huggingface.co/papers/2512.21008

6. Evaluating Parameter Efficient Methods for RLVR

🔑 Keywords: Parameter-Efficient Fine-Tuning, Reinforcement Learning, Verifiable Feedback, LoRA, Mathematical Reasoning

💡 Category: Reinforcement Learning

🌟 Research Objective:

– This study evaluates Parameter-Efficient Fine-Tuning (PEFT) methods within the context of Reinforcement Learning with Verifiable Rewards (RLVR) to enhance language models’ reasoning capabilities.

🛠️ Research Methods:

– Conducted the first comprehensive evaluation of over 12 PEFT methodologies across the DeepSeek-R1-Distill families on mathematical reasoning benchmarks.

💬 Research Conclusions:

– Structural variants such as DoRA, AdaLoRA, and MiSS outperform the commonly used LoRA.

– Identified a spectral collapse phenomenon in SVD-informed strategies affecting RL optimization.

– Extreme parameter reduction, like with VeRA, compromises reasoning capacity.

– The study provides a guide advocating for more exploration of parameter-efficient RL methods.

👉 Paper link: https://huggingface.co/papers/2512.23165

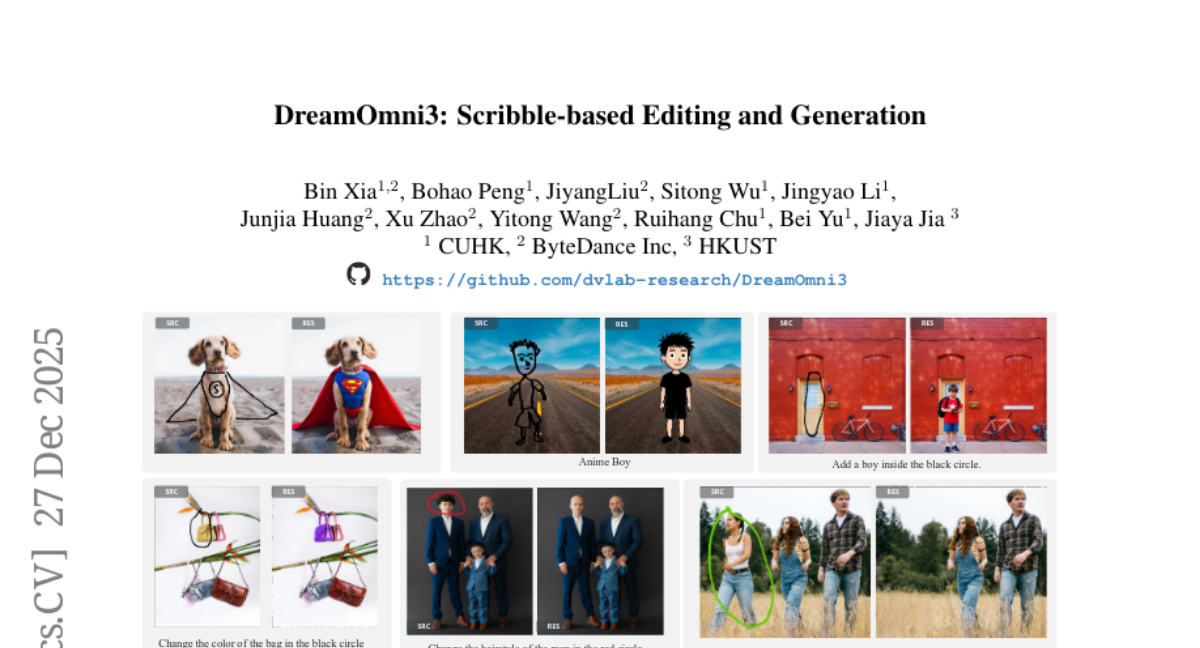

7. DreamOmni3: Scribble-based Editing and Generation

🔑 Keywords: unified generation and editing models, text prompts, scribble-based editing, graphical user interface, DreamOmni3

💡 Category: Generative Models

🌟 Research Objective:

– To propose scribble-based editing and generation tasks enabling flexible creation on GUI, combining textual, images, and freehand sketches.

🛠️ Research Methods:

– Developed a data synthesis pipeline and framework design for scribble-based editing and generation, defining key tasks and employing a joint input scheme to handle complex edits.

💬 Research Conclusions:

– DreamOmni3 has demonstrated outstanding performance in the proposed tasks. Comprehensive benchmarks are established, and models and code will be publicly released to promote further research.

👉 Paper link: https://huggingface.co/papers/2512.22525