AI Native Foundation Weekly Newsletter: 11 April 2025

Contents

-

Meta Llama 4: Multimodal AI with Revolutionary 10M Context Window

-

Musk’s xAI Acquires X in $113B Combined Valuation Deal

-

Midjourney V7 Alpha: Smarter, Faster, With Built-in Personalization

-

Google’s TxGemma: Open AI Models Accelerating Drug Development

-

Runway Gen-4: AI Revolution in Consistent Filmmaking

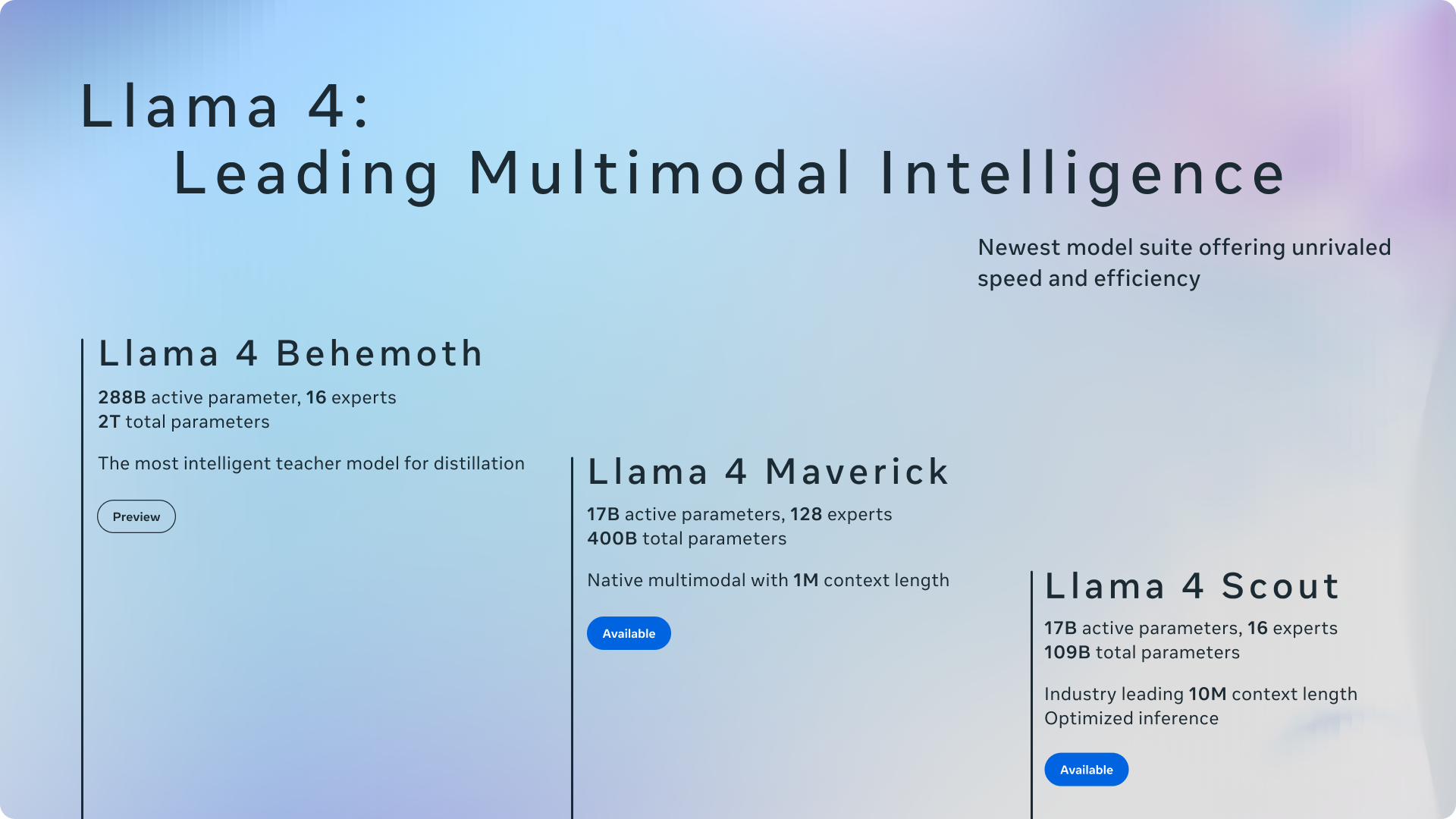

Meta Llama 4: Multimodal AI with Revolutionary 10M Context Window

Meta introduces the Llama 4 family with two groundbreaking models available now: Scout (17B active parameters/16 experts) offers unprecedented 10M context window and fits on a single H100 GPU, while Maverick (17B active/128 experts) outperforms GPT-4o on key benchmarks. Both are natively multimodal, supporting text, images and videos. Meta also previews Behemoth, their 288B active parameter teacher model that outperforms GPT-4.5 on STEM tasks. The models demonstrate reduced political bias and increased viewpoint balance compared to Llama 3, with refusal rates below 2%.

Musk’s xAI Acquires X in $113B Combined Valuation Deal

Elon Musk has merged his AI startup xAI with social platform X in an all-stock transaction valuing xAI at $80B and X at $33B. The strategic combination consolidates X’s 600M users and valuable training data under xAI’s umbrella, strengthening Grok’s competitive position against rivals like OpenAI. The deal creates a new holding company, xAI Holdings Corp, making X’s massive user content directly available to power Musk’s AI ambitions while potentially simplifying fundraising efforts for both entities.

Midjourney V7 Alpha: Smarter, Faster, With Built-in Personalization

V7 Alpha is now available for community testing. This upgraded model offers superior text interpretation, stunning image prompts, higher quality textures, and improved coherence of all elements including hands and bodies. Personalization is enabled by default (requires 5-minute unlock, toggleable anytime). The new Draft Mode renders 10x faster at half the cost, perfect for rapid iteration. V7 launches in Turbo and Relax modes, with standard speed mode still being optimized. Upscaling and editing features currently use V6 models, with V7 upgrades coming soon.

Google’s TxGemma: Open AI Models Accelerating Drug Development

Google introduces TxGemma, a collection of open models fine-tuned from Gemma 2 to improve therapeutic development efficiency. Available in three sizes (2B, 9B, 27B) with specialized ‘predict’ and ‘chat’ versions, these models can classify molecule properties, predict binding affinity, and generate chemical reactions. The 27B model outperforms previous systems on 45 of 66 tasks. Researchers can access TxGemma on Vertex AI and Hugging Face, with Colab notebooks for inference, fine-tuning, and implementing Agentic-Tx workflows to tackle complex research problems.

Runway Gen-4: AI Revolution in Consistent Filmmaking

Runway’s Gen-4 transforms creative workflows with unprecedented subject consistency across scenes. Using just one reference image, filmmakers can maintain characters, objects and environments while changing angles, lighting and settings—perfect for storyboarding, VFX integration, and production design. This breakthrough joins Runway’s ecosystem of AI tools including Act-One for script analysis and Frames for concept development, enabling creators to generate production-ready video with realistic physics and superior prompt understanding. No fine-tuning required.