AI Native Weekly Newsletter: 27 June 2025

Elsewhere, Claude unlocks artifact-based app creation for all users, Google releases its open-source Gemini CLI for terminal-native workflows, ChatGPT expands its connectors with new file search capabilities, and ElevenLabs introduces a voice-native AI agent that takes real action. From agents to applets, this week’s updates show AI Native tools becoming more integrated, personal, and autonomous than ever.

Contents

-

AI Native Case Study | Replit’s ‘Vibe Coding’ Rocketship: From $10M to $100M ARR in 5.5 Months

-

Claude Artifacts: Build AI Apps Without Coding – All Users

-

Google launches open-source Gemini CLI for developers

-

ChatGPT Expands Connectors with New Search Capabilities

-

11.ai Combines Voice + MCP for Action-Taking AI Assistant

-

Google Unveils On-Device AI for Autonomous Robots

-

FLUX.1 Kontext [dev] Sets a New Standard for Open Image Editing

-

Kimi-Researcher Agent Achieves 26.9% on HLE via Reinforcement Learning

AI Native Case Study | Replit’s ‘Vibe Coding’ Rocketship: From $10M to $100M ARR in 5.5 Months

Replit’s 10x revenue growth in under six months is more than a milestone—it signals the opening chapter of the AI Native personal application era. With the launch of Replit Agent in September 2024, the company evolved from a freemium coding tool into a full-stack AI development environment. Its “vibe coding” paradigm allows users to build production-grade apps through natural language, reducing the barrier between idea and deployment. From 34 million users to 100,000 apps in production, Replit demonstrates how usage-based pricing, generative agents, and open collaboration can converge into a self-sustaining ecosystem. This trajectory reflects a broader shift in the software industry: AI Native creation is rapidly expanding beyond professional developers and increasingly enabling individuals to turn ideas into working applications.

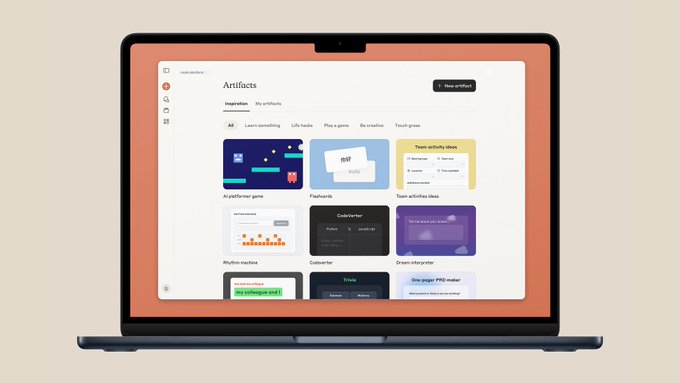

Claude Artifacts: Build AI Apps Without Coding – All Users

Anthropic has launched a new update to the Claude app that turns AI-generated artifacts into fully interactive, shareable apps—no coding required. Users can now create tools like flashcard generators, storytelling assistants, or educational games simply by describing their ideas in conversation. The redesigned artifacts space enables browsing, customizing, and organizing creations in one place. Available to all Claude users (Free, Pro, Max), this update marks a step toward AI-native app creation for everyday users.

Google launches open-source Gemini CLI for developers

Google has released Gemini CLI, an open-source AI assistant that brings Gemini 2.5 Pro directly into the terminal. Designed for developers, it supports natural language coding, debugging, task automation, and search integration. With industry-leading free usage limits (60 requests/min, 1,000 per day), Gemini CLI offers lightweight, extensible AI access via Apache 2.0. It also shares core technology with Gemini Code Assist, enabling consistent experiences across both CLI and IDE environments.

ChatGPT Expands Connectors with New Search Capabilities

Pro users can now use chat search connectors for Dropbox, Box, Google Drive, Microsoft OneDrive, and SharePoint, enabling quick access to files with queries like “Show me Q2 goals in Drive” or “Find last week’s roadmap in Box.” Results appear inline with source links for seamless workflows. Note: This beta feature is currently unavailable to users in the EEA, Switzerland, and UK. Connectors enhance ChatGPT with personalized data access while maintaining security protocols.

11.ai Combines Voice + MCP for Action-Taking AI Assistant

ElevenLabs launches 11.ai, a voice-first AI assistant that goes beyond answering questions to take real actions through Model Context Protocol (MCP) integration. Unlike traditional voice assistants, it can research customers, manage Linear tasks, catch up on Slack messages, and connect to tools like Perplexity and Notion. Built on ElevenLabs’ ultra-low latency platform with 5,000+ voice options. Currently in free alpha for feedback collection.

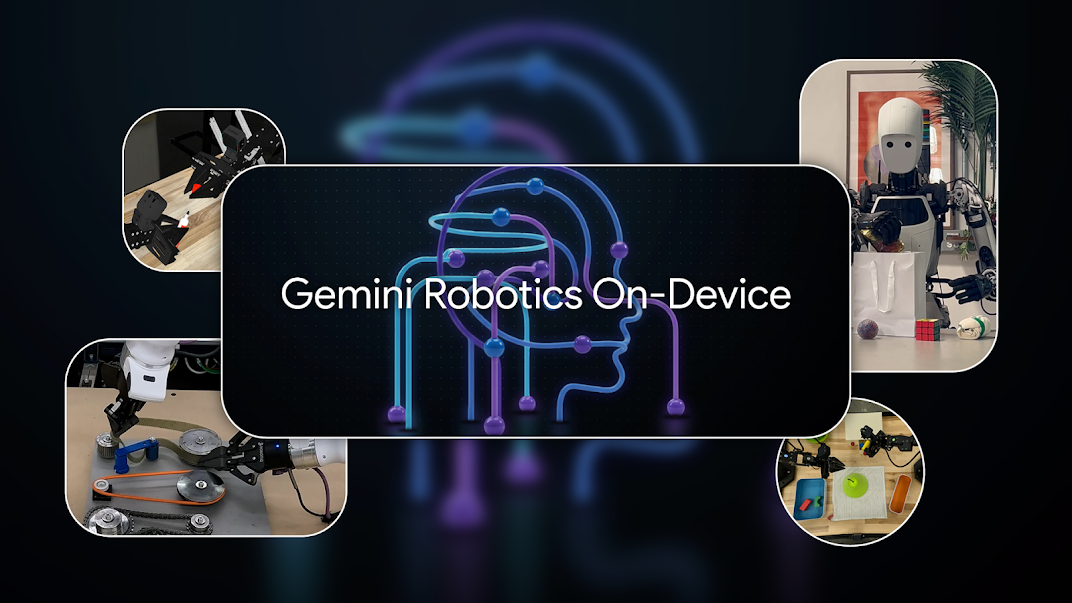

Google Unveils On-Device AI for Autonomous Robots

Google DeepMind launched Gemini Robotics On-Device, a powerful VLA model running locally on robots without internet. It excels at dexterous tasks like unzipping bags and folding clothes, adapts to new tasks with just 50-100 demos, and outperforms other on-device models. The system works across different robot types including bi-arm Franka robots and Apollo humanoids, offering low-latency performance for real-world applications with intermittent connectivity.

FLUX.1 Kontext [dev] Sets a New Standard for Open Image Editing

Black Forest Labs releases FLUX.1 Kontext [dev], the first open-weight AI image editing model matching proprietary performance. This 12B parameter developer version runs on consumer hardware while maintaining professional quality, enabling iterative editing, character preservation, and precise local/global edits. Available for free research and non-commercial use, with day-0 support for ComfyUI and HuggingFace.

Kimi-Researcher Agent Achieves 26.9% on HLE via Reinforcement Learning

Moonshot AI’s Kimi-Researcher agent achieved state-of-the-art 26.9% Pass@1 on Humanity’s Last Exam using end-to-end reinforcement learning—jumping from 8.6% baseline. The autonomous agent performs 23 reasoning steps and explores 200+ URLs per task, demonstrating emergent abilities like resolving conflicting sources and rigorous fact-checking. This breakthrough validates end-to-end agentic RL as a path to advanced AI intelligence, with implications for autonomous research assistants and complex problem-solving systems.