AI Native Weekly Newsletter: 30 May 2025

Contents

-

Claude Launches Voice Mode with Enhanced Safety Features

-

Google’s SignGemma Translates Sign Language to Text

-

DeepSeek-R1-0528 Achieves 87.5% on AIME 2025

-

KLING AI 2.1: Faster Video Generation at Lower Credit Costs

-

Tencent’s HunyuanVideo-Avatar: Multi-Character AI Animation

-

Anthropic Open-Sources AI Circuit-Tracing Tools

Claude Launches Voice Mode with Enhanced Safety Features

Claude’s voice mode enables complete spoken conversations on iOS and Android, available across free and paid plans. Key features include 5 voice options, hands-free operation, and saved transcripts. Premium users get Google Workspace integration for document access. Enterprise admins can disable voice mode organization-wide. Built-in safety measures include content filtering and risk mitigation protocols to ensure secure voice interactions.

Google’s SignGemma Translates Sign Language to Text

Google has announced SignGemma, their most advanced model designed to translate sign language into spoken text. This open-source model will join the Gemma model family later this year, creating new opportunities for inclusive technology development. SignGemma represents a significant breakthrough in accessibility technology, potentially helping deaf and hard-of-hearing individuals communicate more effectively with the broader community. Google is actively seeking user feedback and inviting interested parties to participate in early testing of the model.

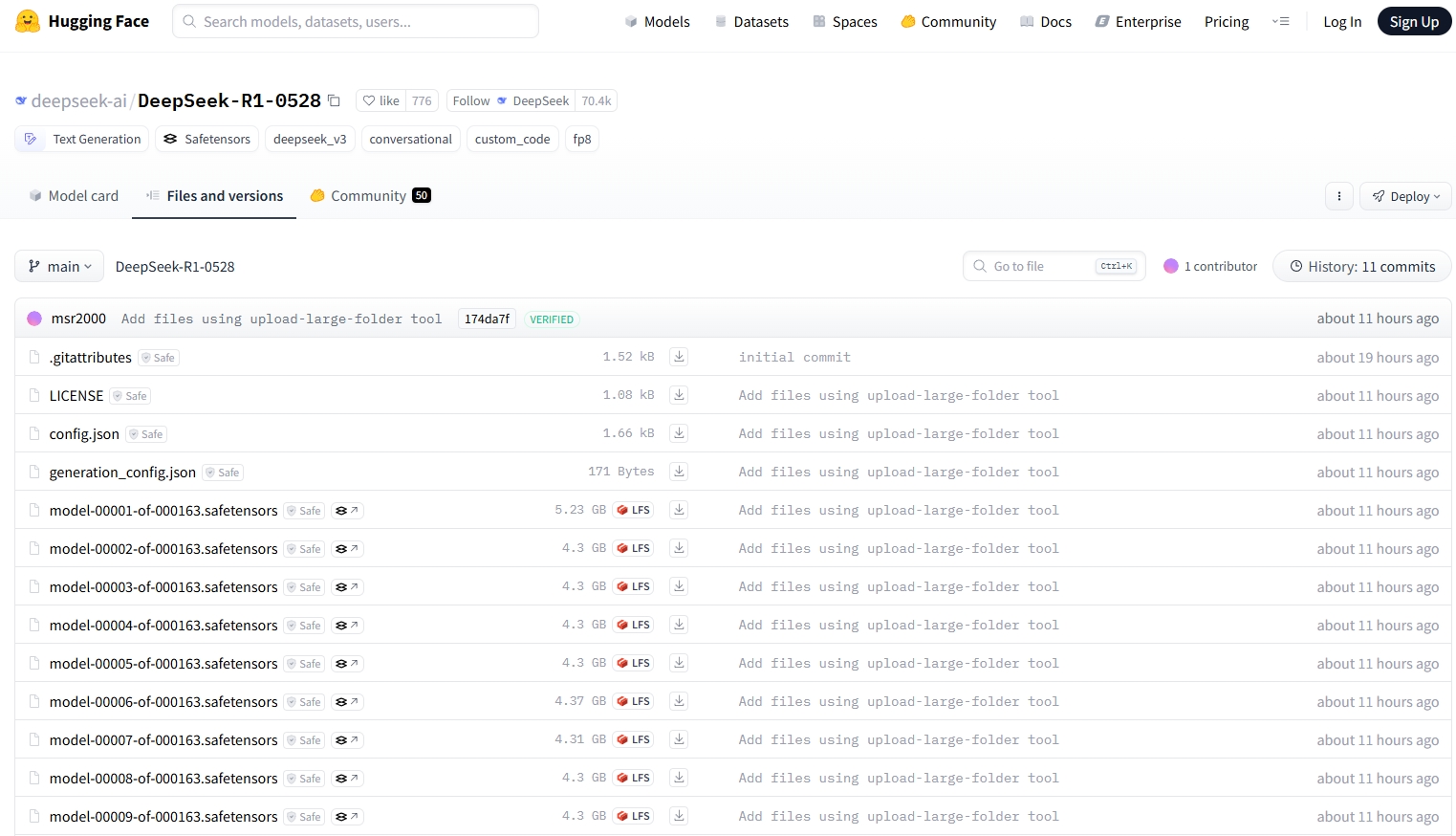

DeepSeek-R1-0528 Achieves 87.5% on AIME 2025

DeepSeek’s upgraded R1-0528 model shows dramatic improvements across benchmarks, jumping from 70% to 87.5% on AIME 2025 tests through enhanced reasoning depth (23K vs 12K tokens per question). The model now rivals O3 and Gemini 2.5 Pro performance while offering reduced hallucinations and better function calling. Additionally, their distilled 8B model achieves SOTA performance among open-source models, matching Qwen3-235B-thinking on mathematical reasoning tasks.

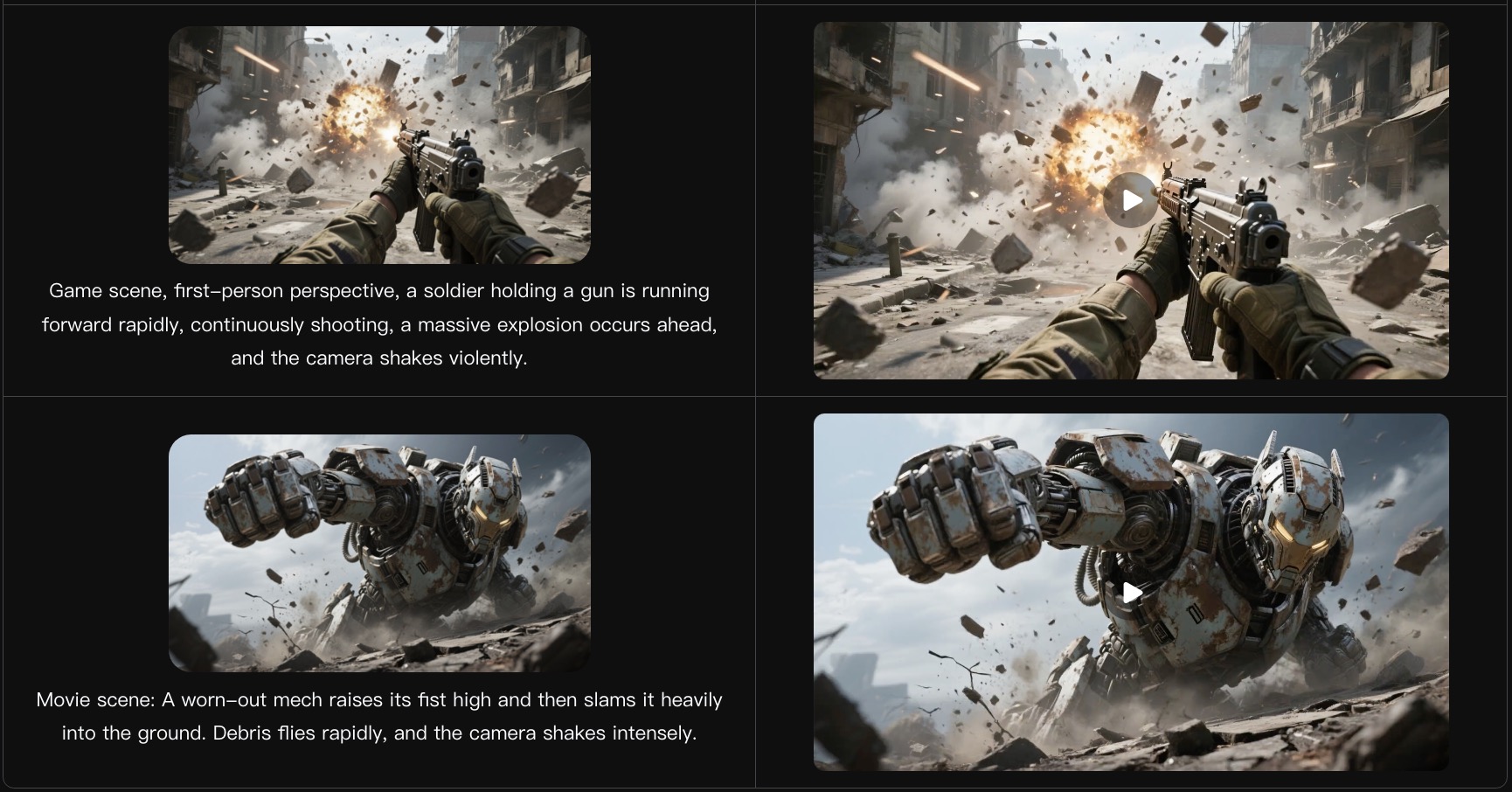

KLING AI 2.1: Faster Video Generation at Lower Credit Costs

KLING AI has launched version 2.1 with significant upgrades to its video generation capabilities. The new lineup includes two tiers: Standard mode (720p) at 20 credits per 5-second generation and Professional mode (1080p) at 35 credits per generation. The flagship KLING 2.1 Master model delivers enhanced dynamics and improved prompt adherence with full 1080p support. Currently, the non-Master models only support image-to-video generation, while text-to-video functionality is planned for future release.

Tencent’s HunyuanVideo-Avatar: Multi-Character AI Animation

Tencent releases HunyuanVideo-Avatar, an advanced AI model generating dynamic, emotion-controllable videos with multi-character dialogue from audio input. Features character image injection, Audio Emotion Module, and Face-Aware Audio Adapter. Supports photorealistic to cartoon styles with GPU memory optimization (24GB minimum, 96GB recommended). Applications include e-commerce, online streaming, and social media production. Open-source with inference code and model weights available.

Anthropic Open-Sources AI Circuit-Tracing Tools

Anthropic releases open-source tools that generate attribution graphs to reveal how language models think internally. Developed with Anthropic Fellows and Decode Research, the library with Neuronpedia frontend has already uncovered multi-step reasoning and multilingual representations in Gemma-2-2b and Llama-3.2-1b. Researchers can now trace circuits, visualize graphs interactively, and test hypotheses by modifying features to advance AI interpretability research.